Clean and efficient SQL code is the backbone of effective data analysis. Yet, without proper standards, even the most advanced queries can become unwieldy, slow, and hard to maintain. GoogleSQL, BigQuery’s powerful SQL dialect, empowers teams to harness modern data analysis capabilities -but only if they adhere to best practices.

This guide dives into proven coding standards to enhance readability, collaboration, and performance, ensuring your BigQuery workflows remain streamlined and impactful.

SQL code standards define conventions for writing structured and consistent queries. These include rules for formatting, naming, and commenting to create clear and readable code. In the context of BigQuery, these standards align with modern SQL practices and emphasize the specific capabilities of GoogleSQL.

GoogleSQL is an ANSI-compliant Structured Query Language (SQL) that supports a wide range of statements for working with BigQuery. These include:

These capabilities make GoogleSQL a robust tool for managing and analyzing data at scale while adhering to modern SQL standards.

Understanding the significance of SQL code standards in BigQuery is the first step toward achieving scalable and efficient data management. These standards provide a framework that ensures uniformity and clarity in query writing, fostering better collaboration and simplifying maintenance.

Whether you’re a data analyst, engineer, or manager, consistent code practices help streamline workflows and deliver higher-quality results.

Readable SQL code is critical for effective communication within data teams. Consistent formatting – such as proper indentation, keyword capitalization, and aligning clauses – ensures that even complex queries are easy to interpret. Clear readability minimizes errors during code reviews and accelerates troubleshooting.

By following agreed-upon formatting conventions, teams can make BigQuery queries more accessible to both technical and non-technical stakeholders.

Collaboration thrives when all team members adhere to the same code standards. BigQuery projects often involve cross-functional teams, including data engineers, analysts, and business users.

Unified coding practices eliminate confusion caused by inconsistent styles, enabling smoother code-sharing and review processes. Moreover, well-commented queries foster better understanding, allowing teams to onboard new members quickly and work cohesively on shared projects.

High-quality queries are the backbone of reliable data analysis. Code standards enforce practices like avoiding SELECT *, optimizing joins, and leveraging BigQuery’s partitioning and clustering features.

These guidelines improve performance and reduce the likelihood of introducing costly errors. Adopting consistent standards ensures that queries remain efficient and robust, even as data scales or schema changes.

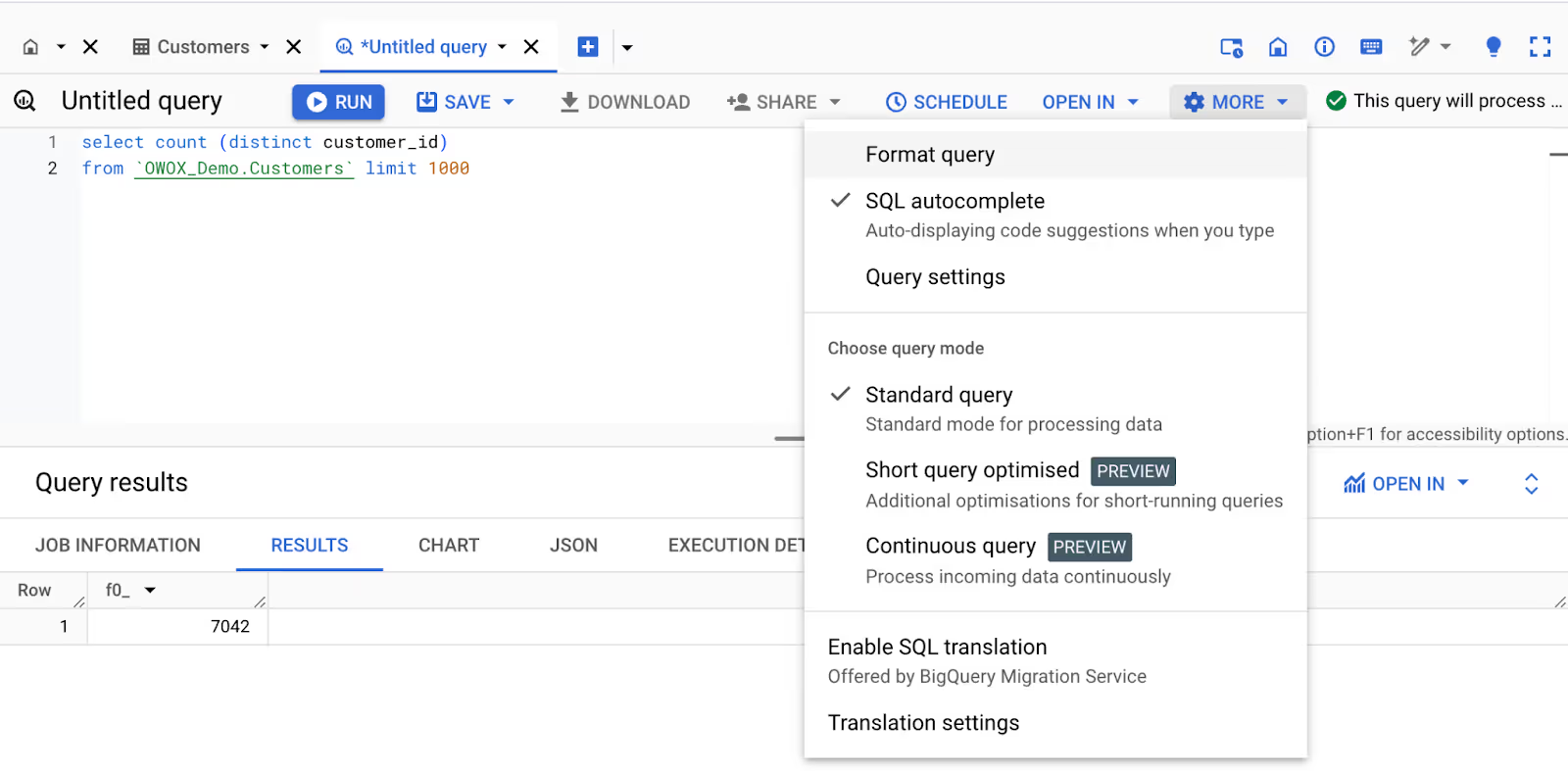

Automation tools streamline the enforcement of code standards, saving time and reducing manual effort. In BigQuery’s SQL workspace, the Format Query feature (Ctrl + Shift + F) automatically aligns your queries with best practices.

External tools like linters can detect errors, highlight areas for improvement, and ensure adherence to predefined guidelines. Leveraging automation promotes consistency and allows teams to focus on query logic instead of formatting.

Code standards play a key role in building sustainable BigQuery projects. Structured and modular queries are easier to adapt when business needs evolve or new data sources are added.

Practices such as using meaningful variable names, modular CTEs, and detailed comments make it easier for teams to revisit and extend old queries.

By investing in strong standards today, organizations can ensure that their BigQuery implementations remain relevant and maintainable for years to come.

In BigQuery projects, adopting clear and consistent SQL code standards is essential for seamless collaboration across the Integration, Consulting, and Retention departments. These guidelines simplify cross-team workflows, enhance code readability, and ensure maintainability over time.

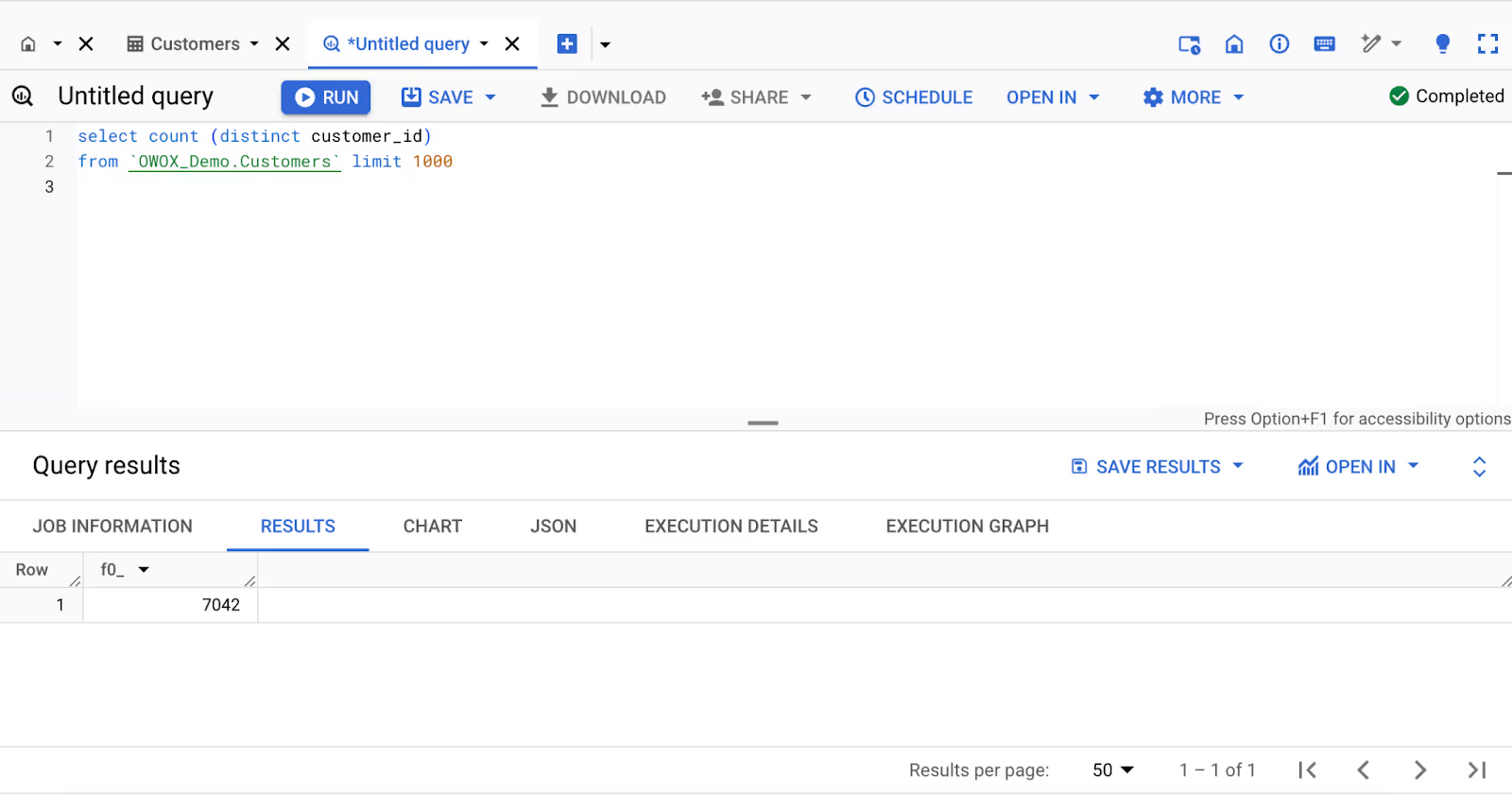

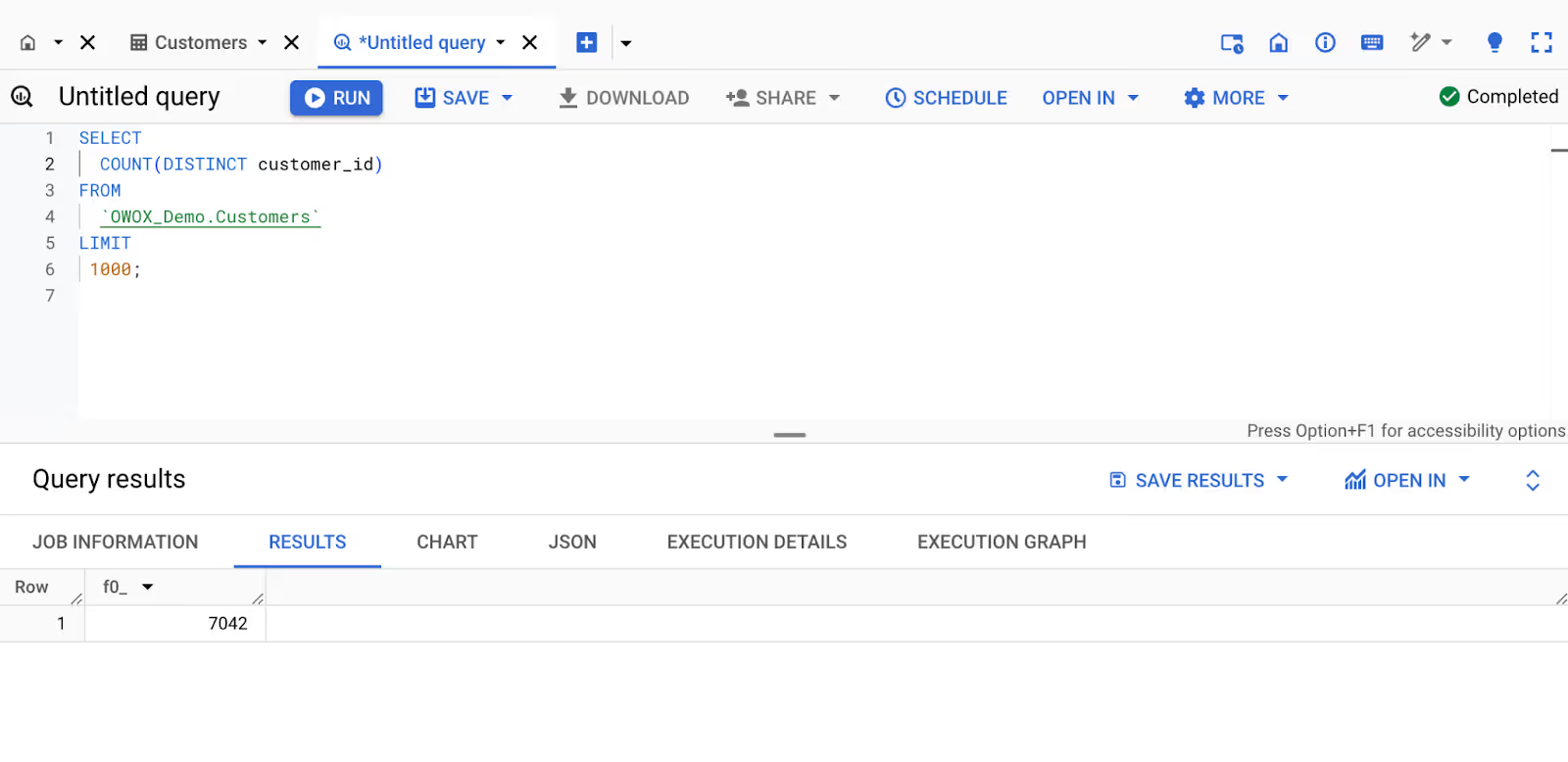

Before formatting:

1select count (distinct customer_id)

2from `OWOX_Demo.Customers` limit 1000

After formatting:

1SELECT

2 COUNT(DISTINCT customer_id)

3FROM

4 `OWOX_Demo.Customers`

5LIMIT

6 1000;

Apply formatting with:

Breaking your queries into logical stages can improve readability and maintainability:

Use indents under comments to clearly define each stage and its sub-steps, making it easier for others to follow the logic.

Comments provide a critical context for your code. Every major operation or transformation should include a comment that explains its purpose.

For example:

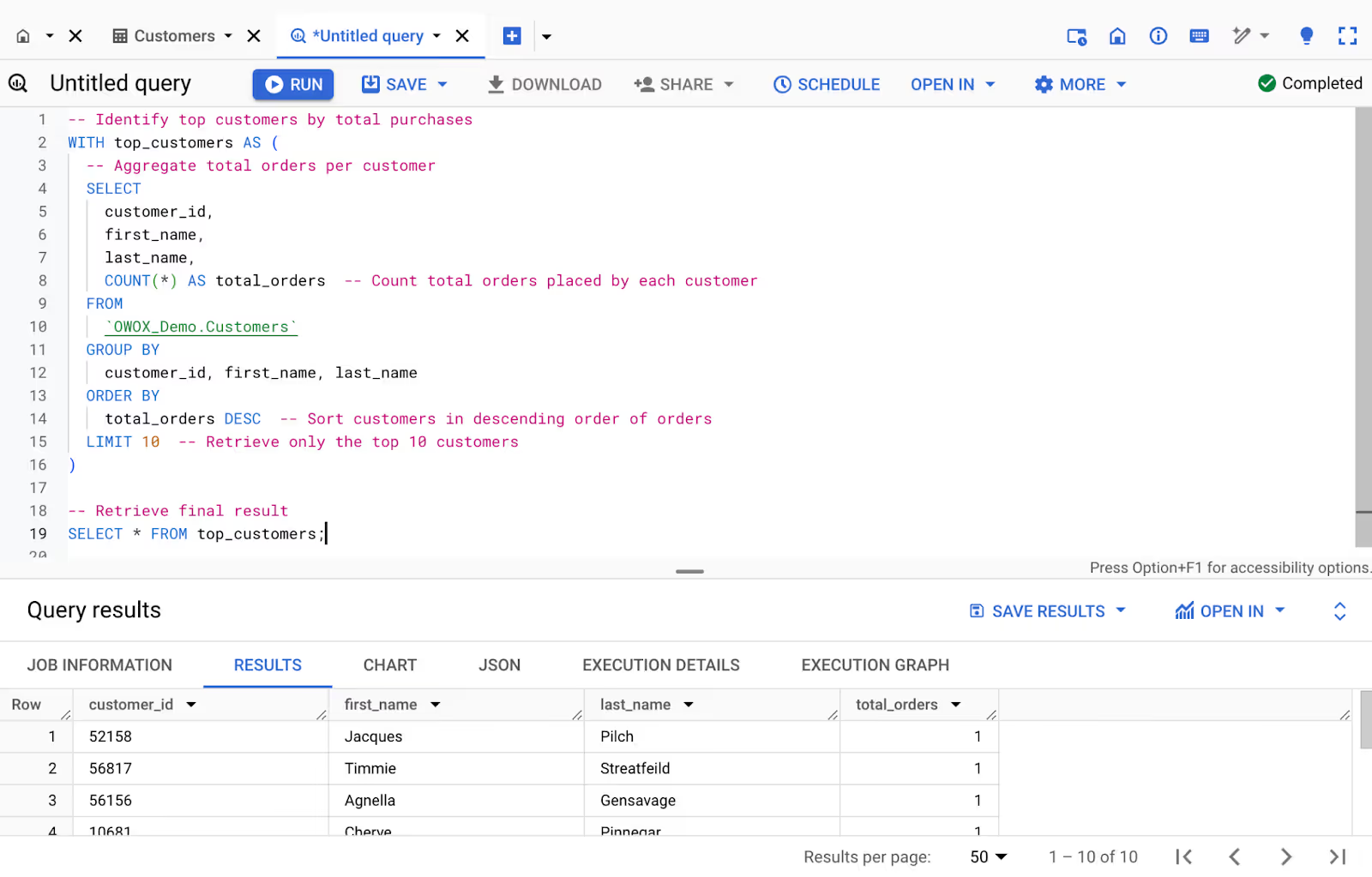

1-- Identify top customers by total purchases

2WITH top_customers AS (

3 -- Aggregate total orders per customer

4 SELECT

5 customer_id,

6 first_name,

7 last_name,

8 COUNT(*) AS total_orders -- Count total orders placed by each customer

9 FROM

10 `OWOX_Demo.Customers`

11 GROUP BY

12 customer_id, first_name, last_name

13 ORDER BY

14 total_orders DESC -- Sort customers in descending order of orders

15 LIMIT 10 -- Retrieve only the top 10 customers

16)

17

18-- Retrieve final result

19SELECT * FROM top_customers;

This practice helps team members and future collaborators quickly grasp the intent behind your code.

Common Table Expressions (CTEs) are an essential tool for structuring SQL queries in a clear and modular way. By breaking down complex queries into smaller, more manageable parts, CTEs enhance readability and make debugging significantly easier. A CTE acts like a temporary table that exists only for the duration of the query, allowing you to reuse the logic across different parts of the same query.

For example, instead of writing repetitive subqueries, you can define a CTE to simplify the workflow:

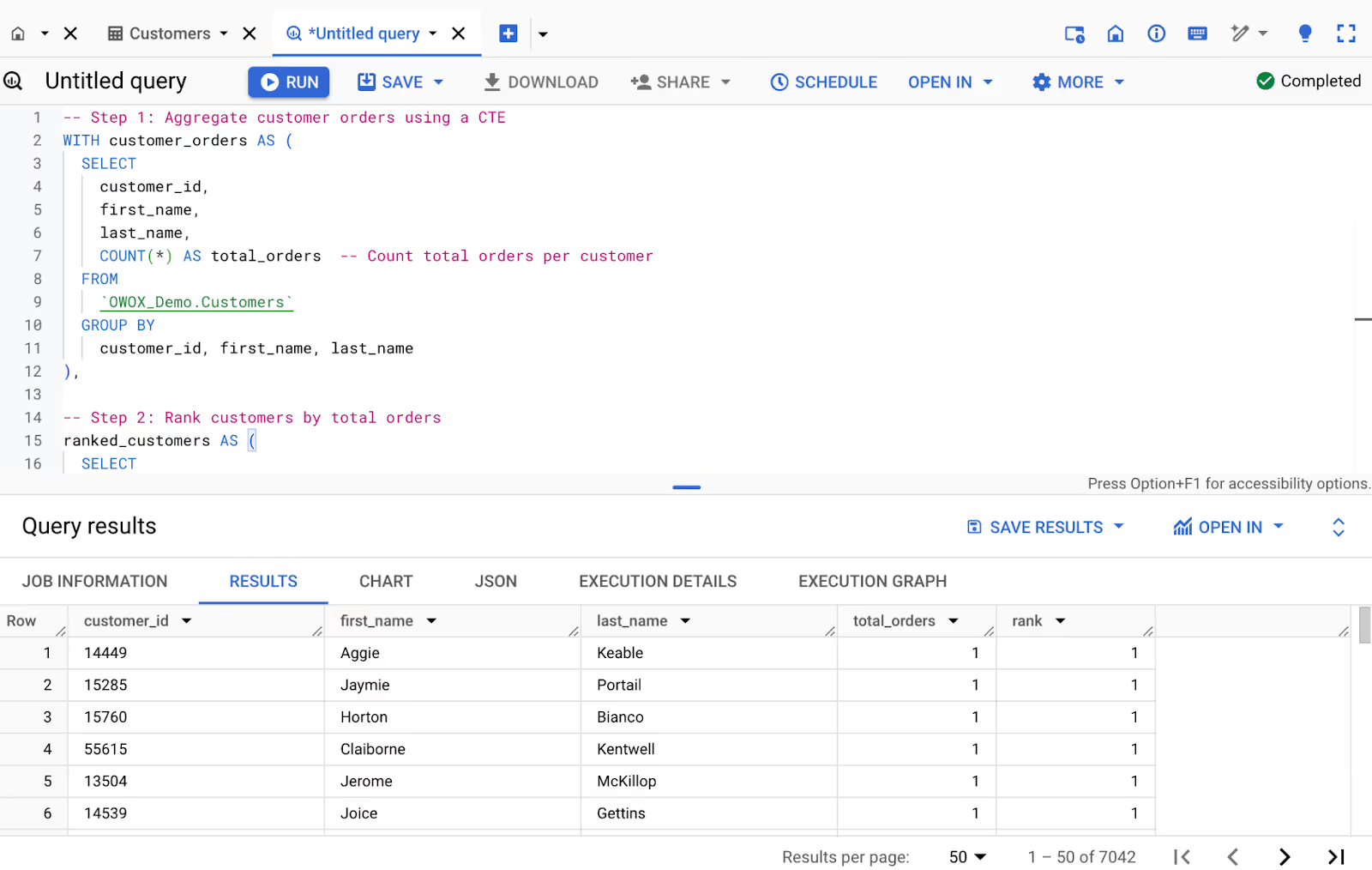

1-- Step 1: Aggregate customer orders using a CTE

2WITH customer_orders AS (

3 SELECT

4 customer_id,

5 first_name,

6 last_name,

7 COUNT(*) AS total_orders -- Count total orders per customer

8 FROM

9 `OWOX_Demo.Customers`

10 GROUP BY

11 customer_id, first_name, last_name

12),

13

14-- Step 2: Rank customers by total orders

15ranked_customers AS (

16 SELECT

17 customer_id,

18 first_name,

19 last_name,

20 total_orders,

21 RANK() OVER (ORDER BY total_orders DESC) AS rank -- Assign rank based on order count

22 FROM

23 customer_orders

24)

25

26-- Step 3: Retrieve the top 10 customers

27SELECT

28 customer_id,

29 first_name,

30 last_name,

31 total_orders,

32 rank

33FROM

34 ranked_customers

35WHERE

36 rank <= 10; -- Filter to show only the top 10 customers

This query uses CTEs for clarity and efficiency. customer_orders groups data to calculate total orders per customer, while ranked_customers applies RANK() to sort them by order count. The final query retrieves the top 10 customers based on their ranking.

CTEs also support recursive queries, enabling advanced use cases like hierarchical data exploration. Using CTEs effectively can significantly enhance your SQL development process, ensuring cleaner and more maintainable code.

Regularly running and testing your SQL queries is critical for maintaining accuracy and ensuring robust data analysis. By executing code incrementally, you can validate each step of your logic and quickly identify potential errors or inefficiencies. For example, start by running smaller sections of your query, such as filtering data or joining tables, to confirm intermediate results are as expected.

This approach reduces the risk of later cascading errors and streamlines debugging, as issues can be isolated to specific query segments. Frequent execution is critical in dynamic environments like BigQuery, where queries often involve complex data transformations or external data sources.

Implement best practices to enhance performance:

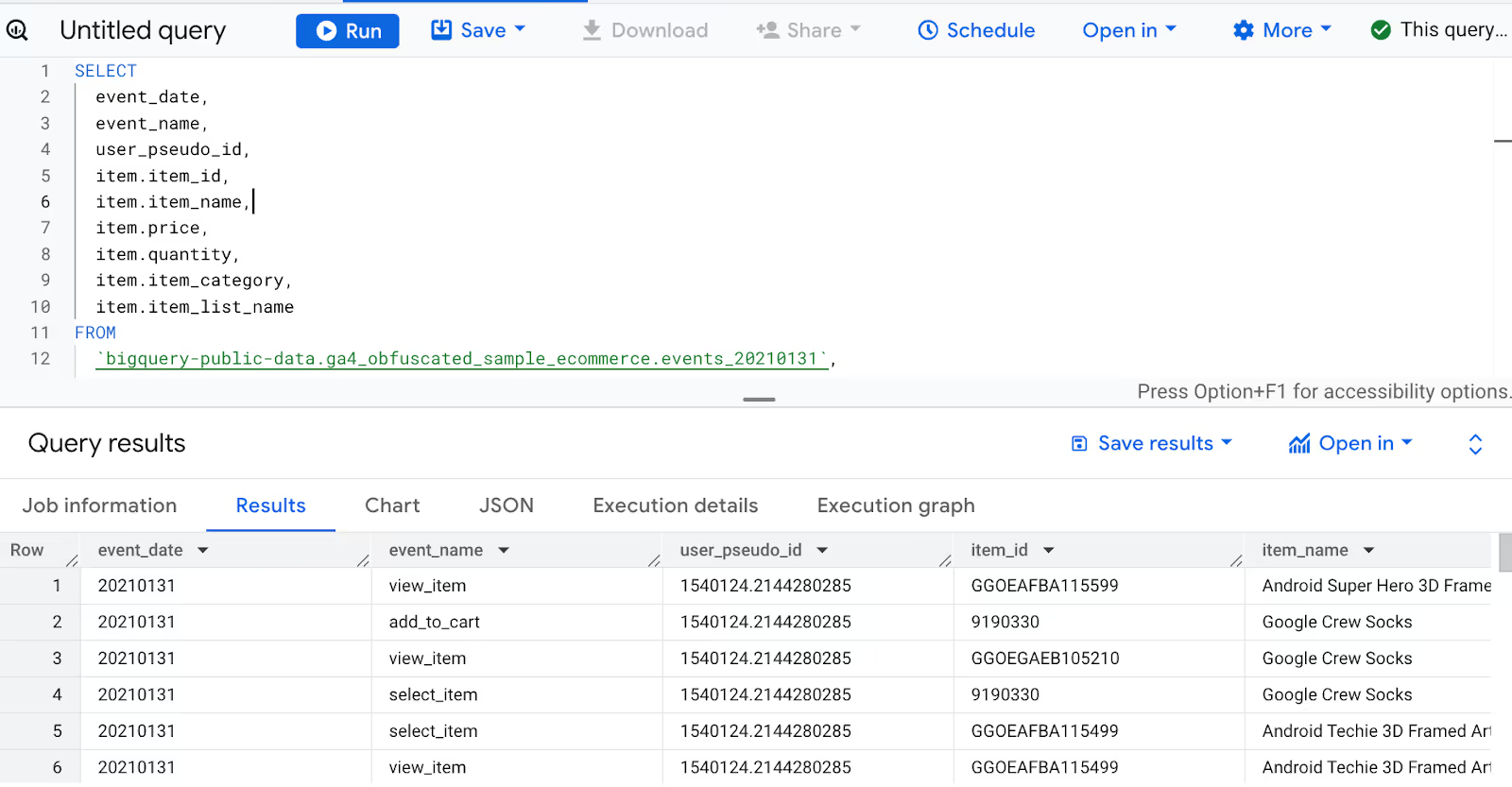

Take advantage of BigQuery’s UNNEST and STRUCT features to manage hierarchical data effectively. Nested data, while powerful, can complicate queries if not handled correctly.

Using the UNNEST function allows you to break down arrays into manageable rows for easier processing. Similarly, STRUCT helps maintain logical groupings of related fields, enabling cleaner and more efficient data handling.

For example:

1 SELECT

2 event_date,

3 event_name,

4 user_pseudo_id,

5 item.item_id,

6 item.item_name,

7 item.price,

8 item.quantity,

9 item.item_category,

10 item.item_list_name

11FROM

12 `bigquery-public-data.ga4_obfuscated_sample_ecommerce.events_20210131`,

13 UNNEST(items) AS item

14WHERE

15 ARRAY_LENGTH(items) > 0

16 AND item.item_id IS NOT NULL

17LIMIT 50;

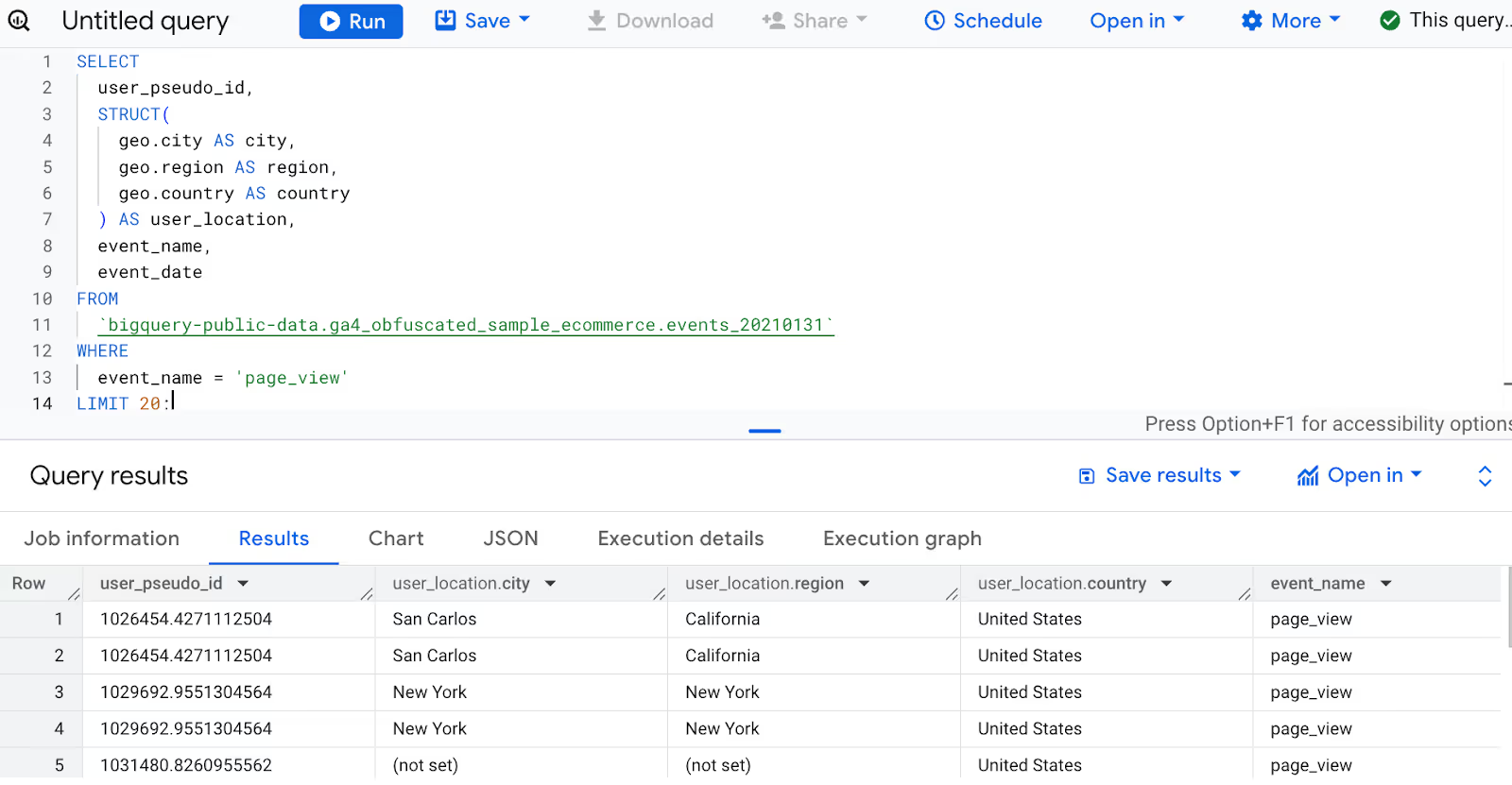

Example:

1SELECT

2 user_pseudo_id,

3 STRUCT(

4 geo.city AS city,

5 geo.region AS region,

6 geo.country AS country

7 ) AS user_location,

8 event_name,

9 event_date

10FROM

11 `bigquery-public-data.ga4_obfuscated_sample_ecommerce.events_20210131`

12WHERE

13 event_name = 'page_view'

14LIMIT 20;

Ensuring proper handling of these fields simplifies downstream analysis, reduces redundancy, and maintains data integrity. This approach not only improves the immediate quality of your SQL code but also ensures long-term maintainability and collaboration success.

Clear and accurate queries require consistent application of syntax rules to improve readability and reduce errors. Proper formatting makes complex queries easier to debug and collaborate on, especially in team environments.

Use consistent indentation for each query level to separate logical sections visually. For instance, subqueries should have their brackets placed on separate lines, and the contents inside should be indented to distinguish them clearly.

Moreover, collapsing semantic blocks into groups enhances clarity. By structuring your SQL code into well-defined sections, such as SELECT statements, JOIN operations, and WHERE clauses, you make the intent and flow of the query more apparent to anyone reviewing it. This approach minimizes misinterpretation and ensures long-term maintainability.

Organizing code effectively is crucial for maintaining clarity and efficiency, especially when working on large and complex SQL queries.

Regular code reviews are an essential practice for maintaining high-quality SQL code. By scheduling periodic reviews, teams can ensure that all queries adhere to established coding standards, enhancing performance and readability.

During these reviews, focus on identifying redundant code, ensuring proper use of functions, and verifying compliance with naming conventions and formatting rules. Reviews also allow collaborative learning, as team members can share insights and propose optimizations for complex queries.

A unified SQL style guide ensures consistency, readability, and efficiency across all queries within your team or organization. Such a guide should cover key aspects of SQL development, including formatting rules, naming conventions, and query optimization techniques.

Begin by using descriptive and concise names for tables, columns, and aliases to make the purpose of each component immediately understandable. Consistent naming conventions, such as snake_case or camelCase, should be applied uniformly throughout your query to ensure readability.

By establishing clear standards, teams can reduce ambiguity and improve collaboration, primarily when multiple contributors work on the same project.

GoogleSQL is a powerful tool for advanced data analysis in BigQuery, designed to simplify working with complex datasets. By using its features effectively, you can improve query performance and make data processing tasks easier.

These features help manage nested fields, handle arrays, and perform dynamic calculations. Learning to apply these functionalities ensures your SQL code remains clear, efficient, and adaptable for future needs.

Window functions, or analytic functions, calculate values over a defined set of rows and return a single result for each row within that set. Unlike aggregate functions, which return a single result for an entire group of rows, window functions retain the individual row context while computing results. This makes them highly useful for computing rankings, running totals, moving averages, and cumulative sums.

At the core of a window function is the OVER clause, which defines the "window" of rows for each calculation. The OVER clause allows you to specify the partitioning and ordering rules determining how the function operates on the data.

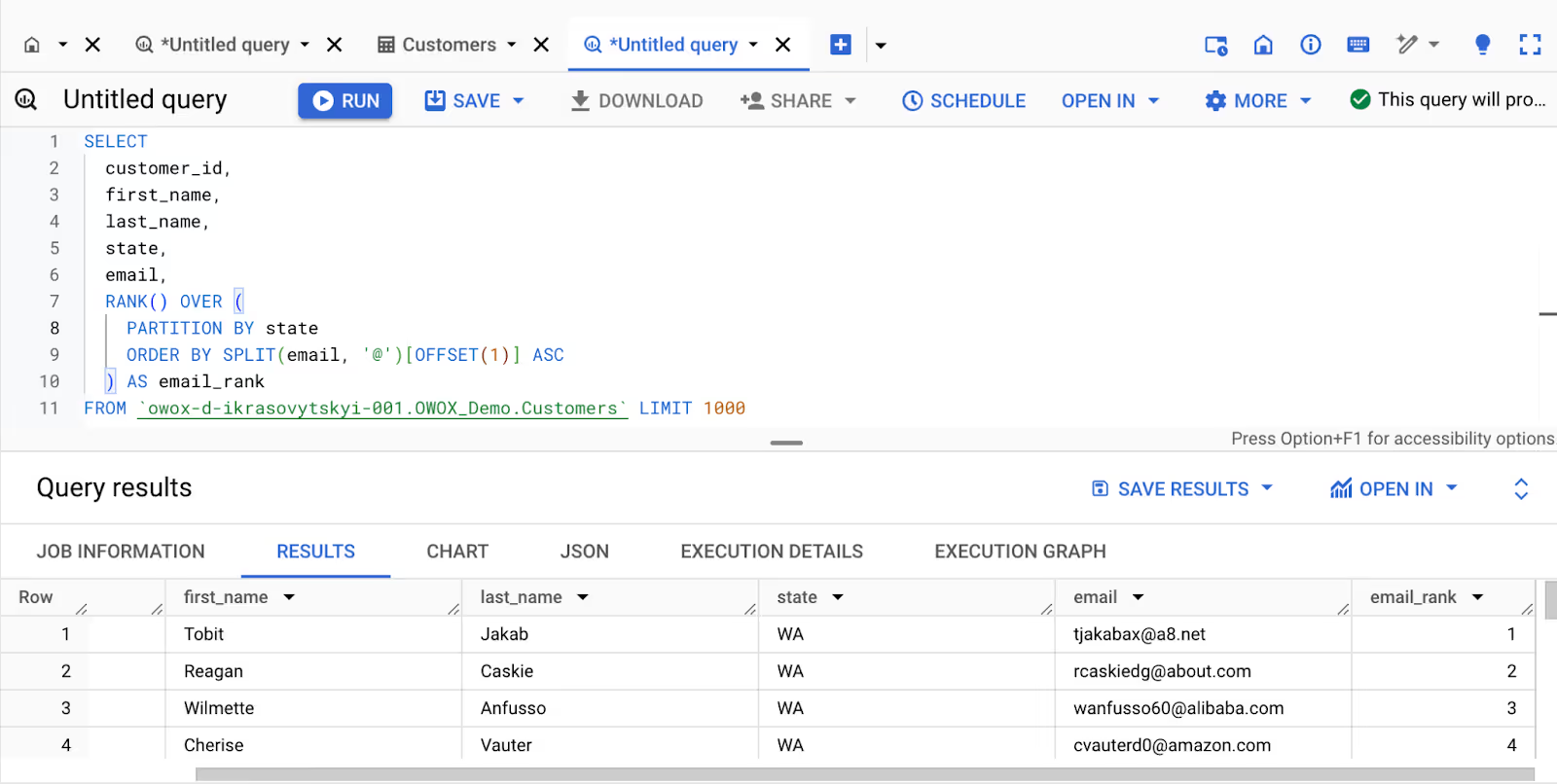

Imagine you want to analyze customers based on their email domains within each state. For example, in California (CA), customers with "gmail.com" emails should appear before those with "yahoo.com" emails. The query first groups customers by state, so rankings reset for each state.

Then, it orders customers alphabetically by their email domain. Finally, the RANK() function assigns a number to each customer, indicating their position within that state’s email domain ranking.

1SELECT

2 customer_id,

3 first_name,

4 last_name,

5 state,

6 email,

7 RANK() OVER (

8 PARTITION BY state

9 ORDER BY SPLIT(email, '@')[OFFSET(1)] ASC

10 ) AS email_rank

11FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Customers` LIMIT 1000

By using window functions, you can perform complex calculations directly within your queries, reducing the need for multiple intermediate steps or post-query data processing. These functions enable dynamic data analysis and are especially powerful when working with large datasets in BigQuery.

In GoogleSQL for BigQuery, an array is an ordered list of zero or more values of the same data type. Arrays can be constructed from simple data types, such as INT64, or complex data types, such as STRUCTs. However, arrays of arrays are not supported.

To work with arrays effectively, you can create array literals, build arrays from subqueries using the ARRAY function, or aggregate values into an array using the ARRAY_AGG function.

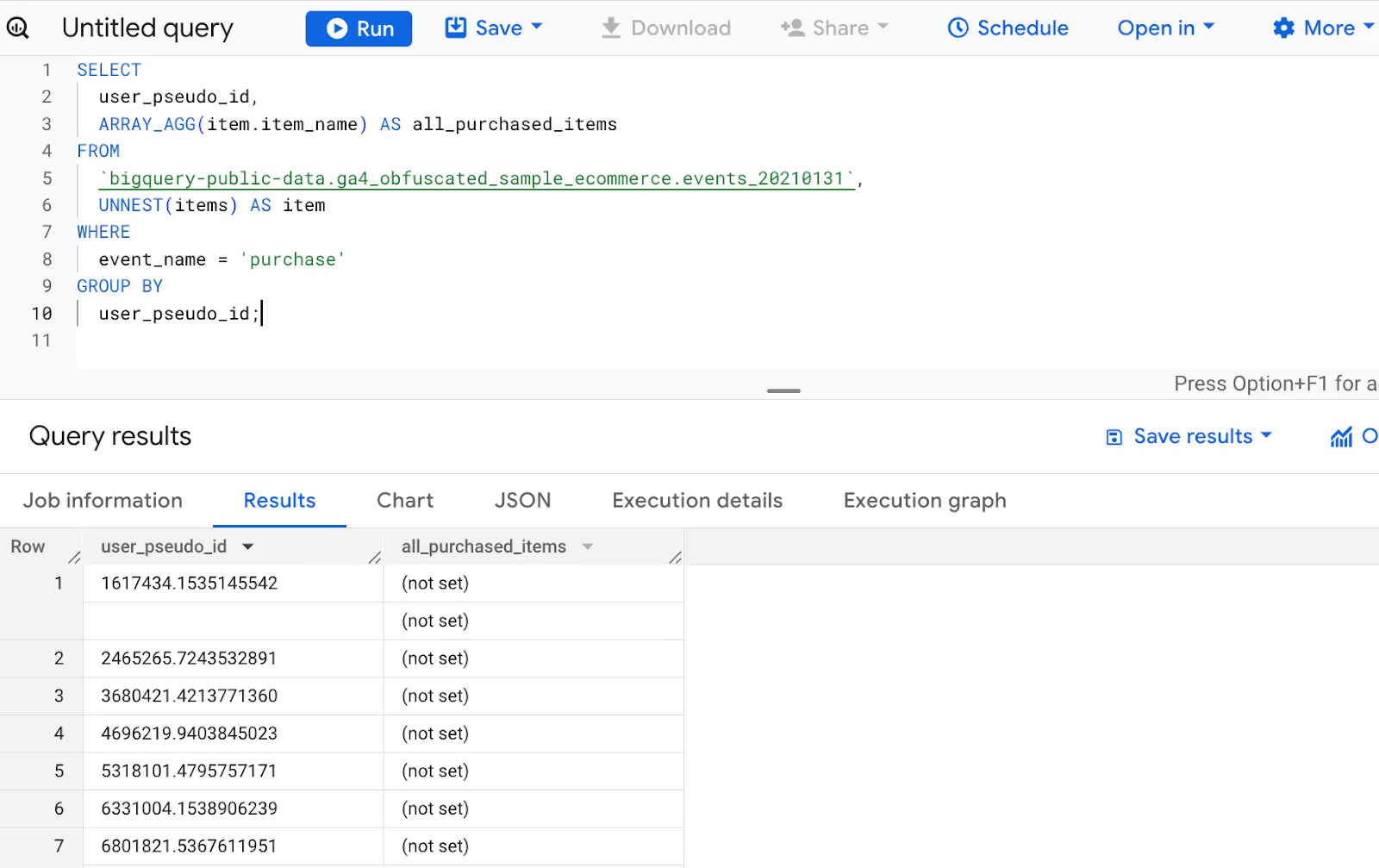

Suppose you're analyzing GA4 ecommerce events and want to group all purchased item names per user into a single array. ARRAY_AGG() helps collect item-level data for user-level insights.

1SELECT

2 user_pseudo_id,

3 ARRAY_AGG(item.item_name) AS all_purchased_items

4FROM

5 `bigquery-public-data.ga4_obfuscated_sample_ecommerce.events_20210131`,

6 UNNEST(items) AS item

7WHERE

8 event_name = 'purchase'

9GROUP BY

10 user_pseudo_id;

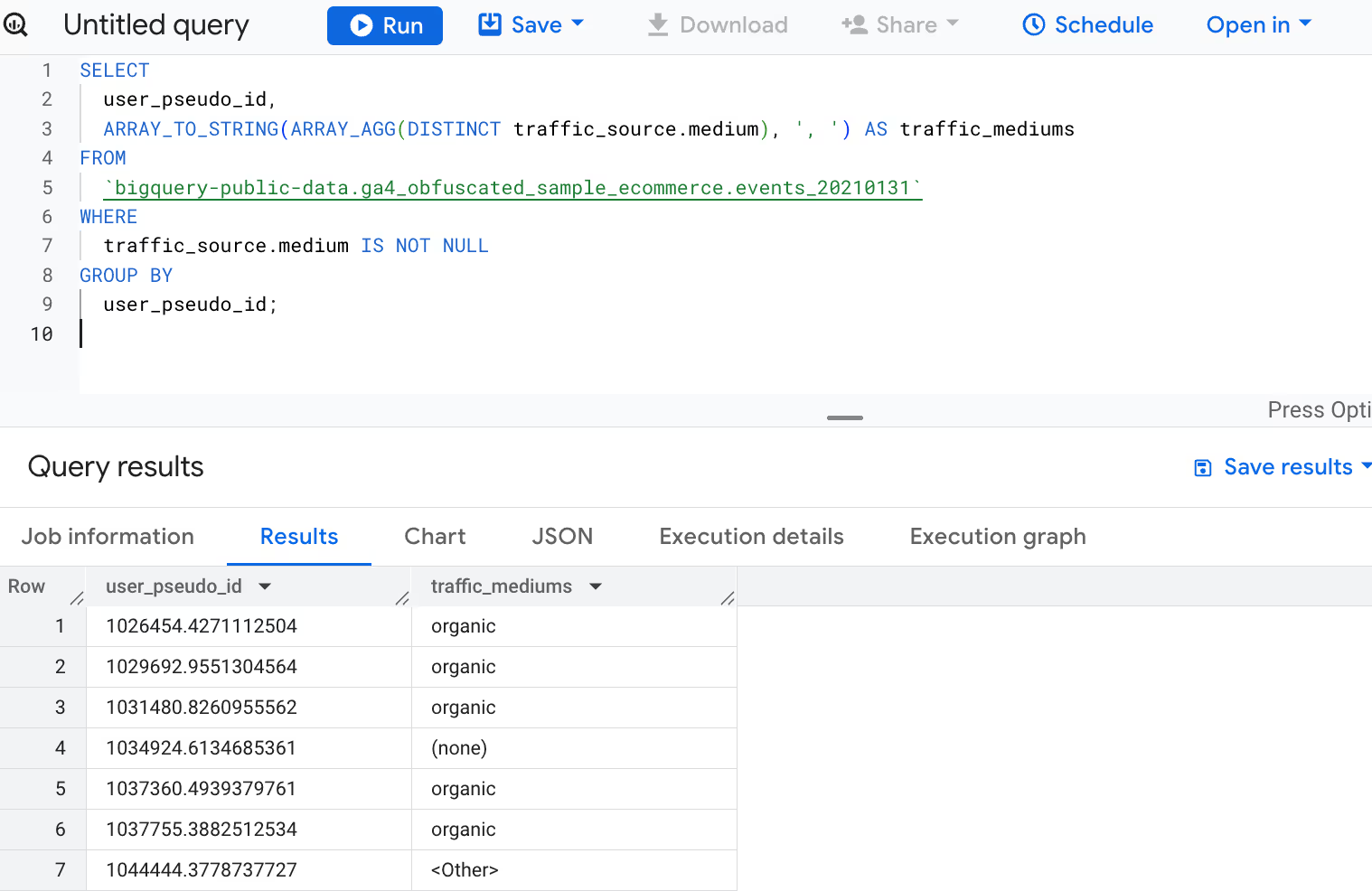

Additionally, arrays can be combined using functions like ARRAY_CONCAT(), and they can be converted to strings using ARRAY_TO_STRING(). For example, you want to list all traffic mediums used by each user across multiple GA4 events. ARRAY_AGG() and ARRAY_TO_STRING() let you condense these into a readable string per user.

1SELECT

2 user_pseudo_id,

3 ARRAY_TO_STRING(ARRAY_AGG(DISTINCT traffic_source.medium), ', ') AS traffic_mediums

4FROM

5 `bigquery-public-data.ga4_obfuscated_sample_ecommerce.events_20210131`

6WHERE

7 traffic_source.medium IS NOT NULL

8GROUP BY

9 user_pseudo_id;

GoogleSQL for BigQuery supports a range of timestamp functions that precisely handle time-based data. It’s essential to understand how timestamps are stored and displayed, and how time zones impact conversions between these formats. For more details, refer to the documentation on how time zones work with timestamp functions.

These functions are designed to work within the defined DATE and TIMESTAMP range. Note that exceeding these ranges results in a runtime error. Properly using these functions ensures accurate time-based computations and prevents potential issues with data overflow.

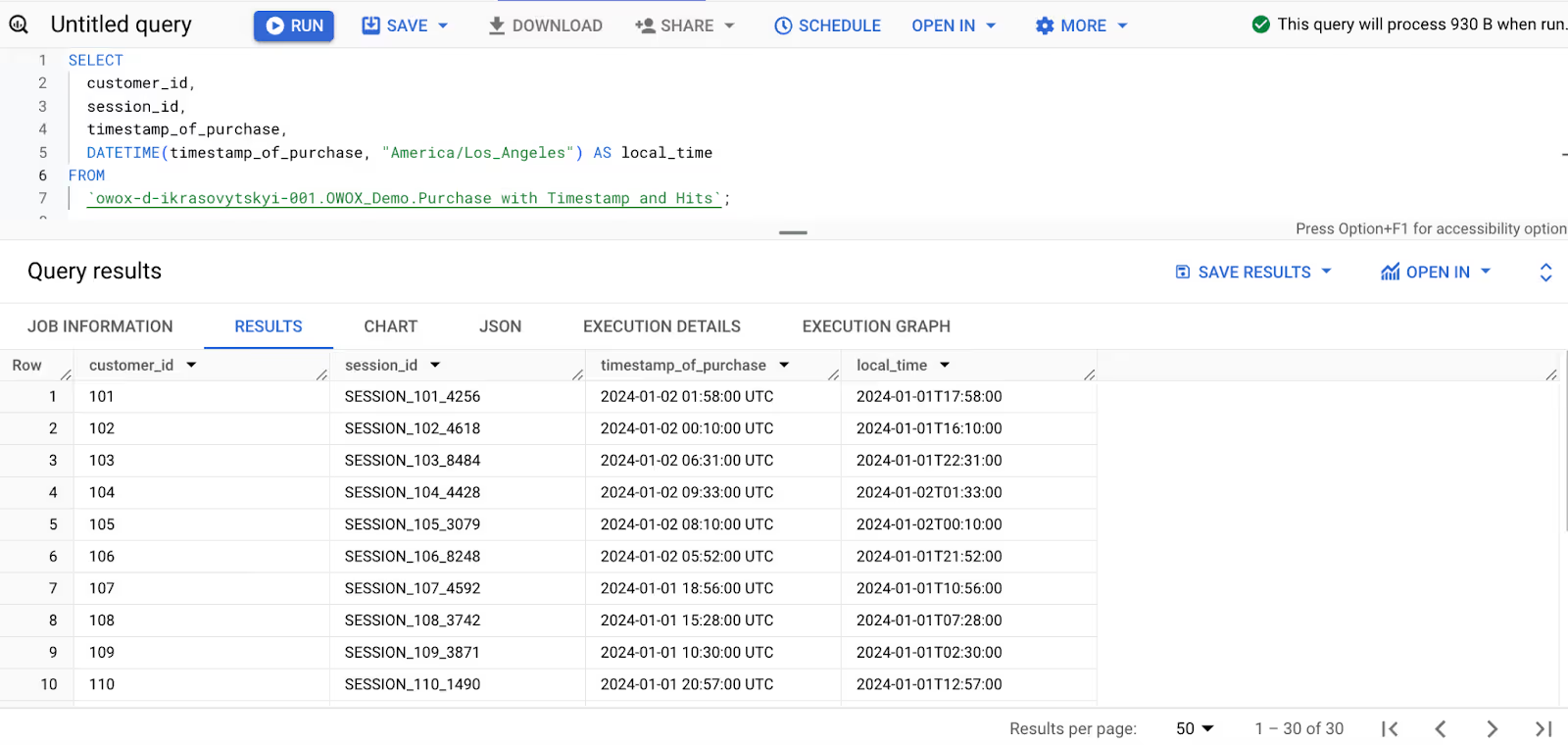

For example, to convert a specific datetime to a given time zone, you can use:

1SELECT

2 customer_id,

3 session_id,

4 timestamp_of_purchase,

5 DATETIME(timestamp_of_purchase, "America/Los_Angeles") AS local_time

6FROM

7 `owox-d-ikrasovytskyi-001.OWOX_Demo.Purchase with Timestamp and Hits`;

This query ensures the timestamp is localized correctly for the specified time zone, allowing precise and meaningful data analysis.

In GoogleSQL for BigQuery, the UNNEST operator is essential for working with nested fields. It takes an array as input and returns a table with one row for each element in the array.

However, it’s important to note that when the UNNEST operator is applied, records at the level where the array is contained are repeated for every row generated from the array. Additionally, if the array is empty, no records will appear in the query results, including the parent record.

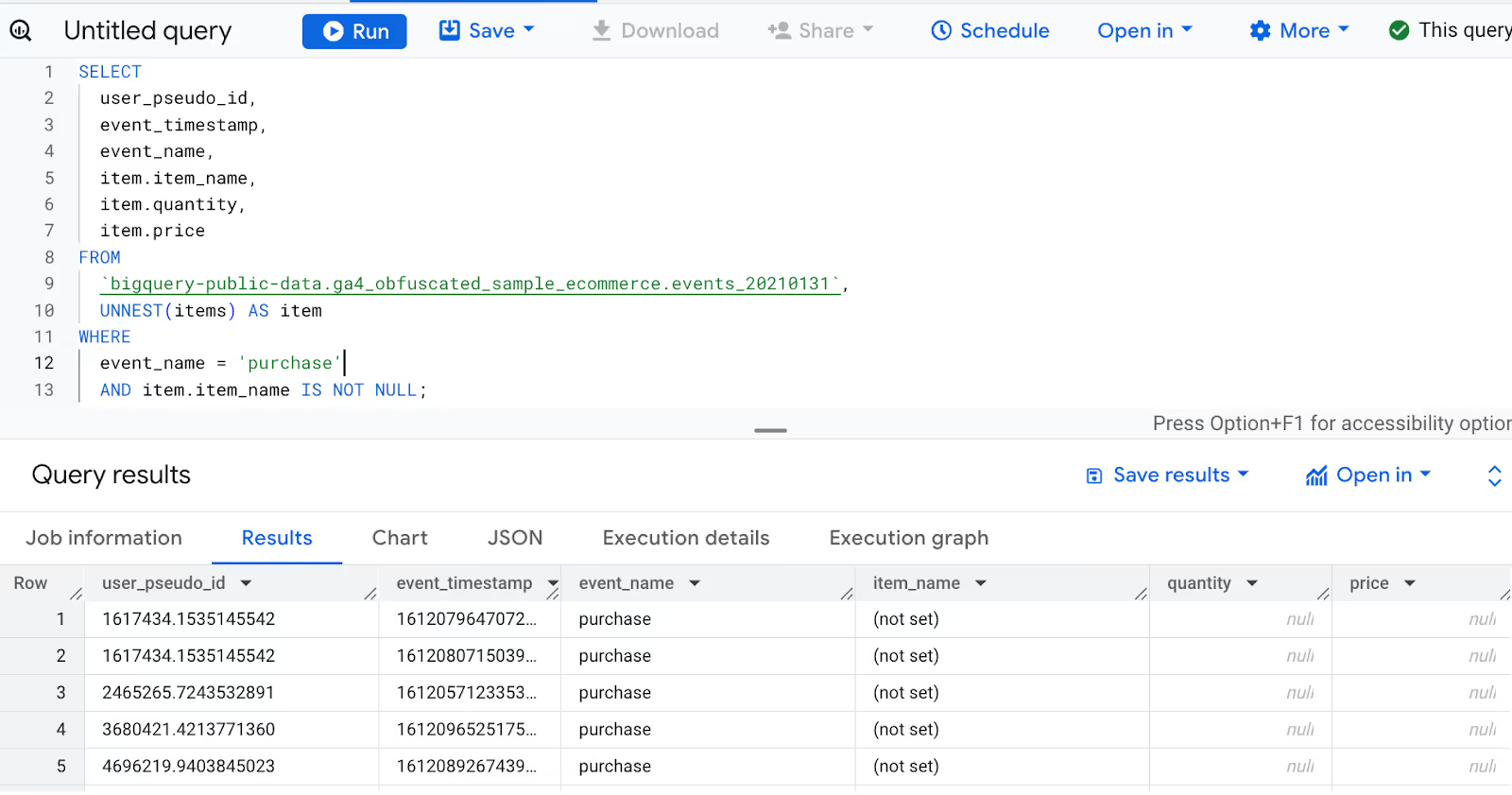

Suppose you are working with session-level data where each session contains multiple nested hits (like page views, events, and transactions). To analyze each hit individually, you use UNNEST() to flatten the nested structure.

For example:

1SELECT

2 user_pseudo_id,

3 event_timestamp,

4 event_name,

5 item.item_name,

6 item.quantity,

7 item.price

8FROM

9 `bigquery-public-data.ga4_obfuscated_sample_ecommerce.events_20210131`,

10 UNNEST(items) AS item

11WHERE

12 event_name = 'purchase'

13 AND item.item_name IS NOT NULL;

Nested structures are ideal for representing hierarchical data in a more compact format. They allow for:

While nested structures are advantageous for storage and representation, they require expertise to handle effectively, particularly when creating arrays from flat tables. Analysts often start using the UNNEST command to convert nested data into a flat structure, but there are scenarios where retaining the nested format is more efficient and illustrative.

Understanding how and when to use nested structures and the UNNEST operator ensures optimal handling of hierarchical data in BigQuery.

The OWOX BigQuery Data Marts is a powerful tool that enhances your ability to analyze and report on business data directly from BigQuery.

Whether you’re analyzing campaign performance or building in-depth business data reports, the OWOX BigQuery Data Marts provides a comprehensive suite of tools to create a collection of SQL Queries - Data Marts that business users would be able to run themselves and make your work more efficient and impactful.

By using its pre-built templates and robust features, you can transform raw data into actionable insights with ease.

BigQuery code standards refer to best practices for writing SQL scripts that are efficient, readable, and maintainable. These include proper formatting, consistent naming conventions, and optimized query structures to enhance collaboration and performance.

Consistent naming is key. Use snake_case for datasets, tables, and columns. Names should be descriptive but concise, avoiding reserved keywords or special characters. Prefix tables with meaningful identifiers for better organization.

Tools like dbt and SQLFluff help enforce coding standards by linting and formatting SQL. Visual Studio Code and JetBrains DataGrip also offer extensions to assist in maintaining clean and consistent code.

Organize queries with proper indentation and meaningful aliases. Use Common Table Expressions (CTEs) for complex logic, write comments to explain steps, and break down long queries into smaller, logical blocks.

UDFs allow you to define reusable logic in SQL. To use them effectively, ensure they are modular, well-documented, and optimized. Test UDFs for performance, and avoid using them for operations better handled by native SQL functions.

Yes, BigQuery integrates seamlessly with tools like Looker and Data Studio for visualization, dbt for transformation workflows, and Cloud Functions or APIs for automation, enabling better analytics and collaboration.

Query caching improves efficiency by storing the results of previously executed queries for 24 hours. If the same query is rerun, cached results are used, reducing costs and speeding up execution without reprocessing data.

BigQuery’s features, such as nested and repeated fields, require clear structuring of queries and efficient joins. Following standards ensures data processing remains scalable while maintaining readability and performance.