Ever tried exporting or importing CSVs in BigQuery and hit unexpected limits or confusing errors? Whether you're a data analyst downloading results for reporting or an engineer automating exports with Python, handling CSVs in BigQuery isn’t always as simple as it looks.

.png)

In this guide, you’ll learn step-by-step how to export query results and full tables to CSV, upload or load CSV files into BigQuery, and even automate the entire workflow. We’ll also cover limitations you should know, common errors to avoid, and smarter ways to handle exports using tools like OWOX BigQuery Data Marts.

BigQuery provides several methods for exporting tables and query results to CSV format. Users can choose the approach that best fits their workflow, whether they need a quick manual export or are building automated pipelines using scripts or APIs.

The Google Cloud Console gives you a simple interface to export BigQuery table data to Cloud Storage in just a few clicks. You can choose the file format, set compression, and pick where to save the file.

Steps to Export a Table to CSV:

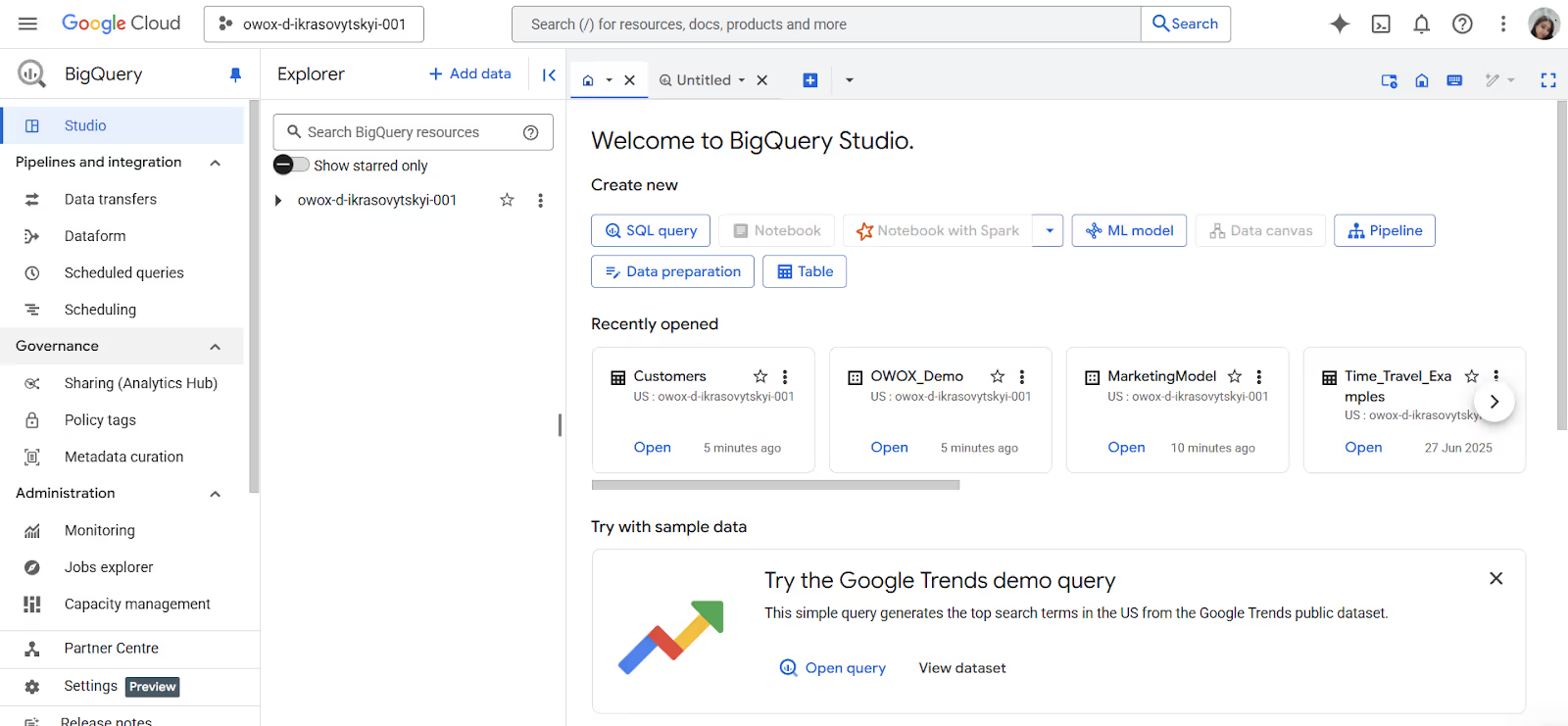

1. Open the BigQuery Console

Head to the BigQuery page in the Google Cloud Console.

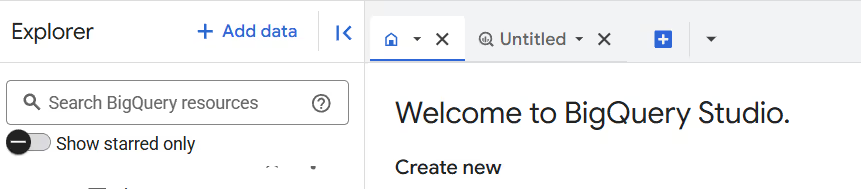

2. Select Your Table

In the Explorer panel, expand your project and dataset. Click on the table you want to export.

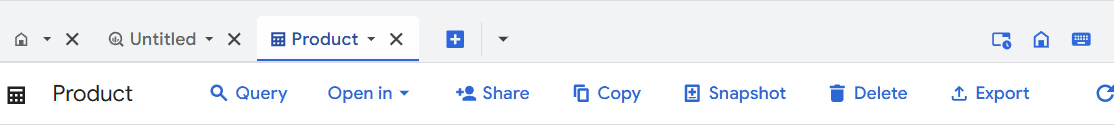

3. Click on export

In the Table Details panel, click the Export button, then select Export to GCS (Google Cloud Storage).

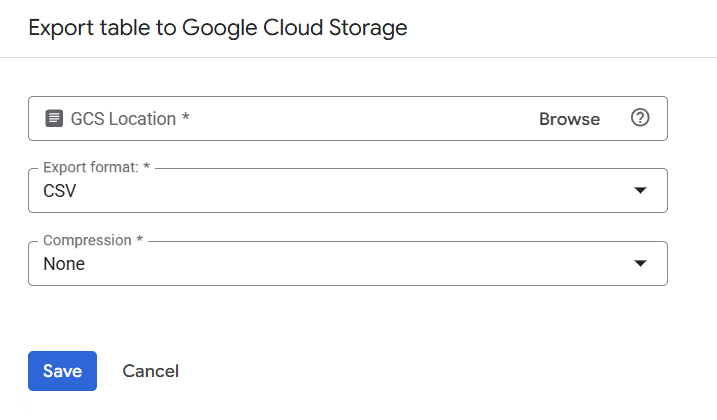

4. Set Destination, File Format, and Compression

5. Start the Export

Click Save to begin the process. Once completed, the CSV file will be available in your specified Cloud Storage path.

6. Download the File (Optional)

Navigate to the Cloud Storage bucket, locate your file, and click the download icon to obtain a local copy.

The bq extract command is part of the BigQuery CLI and is ideal for users who want more control over how data is exported. While not as visual as the Cloud Console, this method is highly efficient for scripting, automation, and recurring exports.

Steps to Export a Table Using bq extract:

Run the bq extract Command with Required Flags.

Here’s the full syntax of the command:

bq --location=LOCATION extract \

--destination_format=FORMAT \

--compression=COMPRESSION_TYPE \

--field_delimiter=DELIMITER \

--print_header=BOOLEAN \

PROJECT_ID:DATASET.TABLE \

gs://BUCKET/FILENAME.csv

Example Export Command:

Here’s a sample command that exports the mytable table in GZIP-compressed CSV format to a GCS bucket:

bq extract \

--compression=GZIP \

--destination_format=CSV \

'my-project:maindataset.mytable' \

gs://bucket-one/onefile.csv

gsutil cp gs://bucket-one/onefile.csv /your/local/folder/

If you're building automated data workflows, exporting BigQuery tables to CSV using client libraries is a powerful option. It supports languages such as Python, Java, Node.js, and others.

It works with languages like Python, Java, Node.js, and more, making it a great fit for teams that are already working with code. While it takes a bit more setup at first, it gives you full control over how and when exports run, especially if you're using scheduling tools or cloud functions as part of a larger workflow.

Steps to Export a Table Using the Python Client Library:

pip install google-cloud-bigquery

Define the project, dataset, table, and destination path:

from google.cloud import bigquery

client = bigquery.Client()

project = "project-one"

dataset_id = "one"

table_id = "onefile"

bucket_name = "bucket-one"

destination_uri = f"gs://{bucket_name}/onefile.csv"

dataset_ref = bigquery.DatasetReference(project, dataset_id)

table_ref = dataset_ref.table(table_id)

extract_job = client.extract_table(

table_ref,

destination_uri,

location="US" # Must match the table's location

)

extract_job.result() # Waits for job to finish

print(f"Exported {project}:{dataset_id}.{table_id} to {destination_uri}")

If you don’t want to load CSV data into BigQuery, you can create an external table that points to a file stored in Google Cloud Storage.

This lets you query the data directly, just like a native BigQuery table, without consuming additional storage. It's a useful option for working with large or static datasets, reducing duplication and improving flexibility.

Steps to Create an External Table from a CSV File in Cloud Storage:

Using external tables in BigQuery lets you query CSV files in Cloud Storage without loading them into BigQuery. It’s a cost-effective way to work with large datasets while keeping your storage lean and flexible.

While exporting CSVs from BigQuery is straightforward, there are key limitations that can affect file size, structure, and consistency. Understanding these constraints helps you avoid errors within your workflow.

BigQuery limits each exported CSV file to a maximum size of 1 GB. If your table exceeds this, you must use a wildcard in the destination URI (e.g., your_file_*.csv) to split the export into multiple files. This ensures large datasets are handled correctly without job failure due to size constraints.

When using wildcard URIs to split exports, BigQuery automatically shards the data, often producing files of uneven size. Some files may approach or exceed 1 GB in size, depending on the data distribution. Additionally, each export job is limited to a maximum of 500 wildcard URIs, which can restrict control when exporting very large datasets into precisely segmented files.

The CSV format does not support nested or repeated fields, which are common in complex datasets such as event logs or Google Analytics exports. If your table includes arrays or structs, you’ll need to flatten the data using functions like UNNEST before exporting. For preserving structure, consider exporting to formats like JSON, Avro, or Parquet instead.

BigQuery does not support exporting data from multiple tables in a single export job. Each export task must target only one table at a time. If you need to export several tables, you’ll have to run separate export jobs for each, either manually or through automation using scripts, scheduled queries, or orchestration tools.

By default, BigQuery does not guarantee the order of rows in exported CSV files. If row order is important, you must use the EXPORT DATA SQL statement along with an ORDER BY clause in your query. This ensures consistent output, especially for time-series data, paginated reports, or any downstream process that depends on row sequencing.

When exporting data using the Google Cloud Console, GZIP is the only available compression option. If you need alternative compression types, such as DEFLATE or NONE, you must use the bq command-line tool or client libraries. This limitation can impact workflows that rely on specific compression formats for downstream tools or integrations outside the Google Cloud environment.

BigQuery export jobs operate on a snapshot of the table taken at the moment the job starts. Any new data inserted or updated during the export process will not be included in the output. To capture the most recent changes, you must start a new export job after the additional data has been loaded.

While exporting CSVs from BigQuery, users may encounter errors that stop or corrupt the process. Here are common issues and practical fixes to keep your exports smooth and reliable.

⚠️ Error: This error happens when BigQuery doesn’t have the right to write to the specified Cloud Storage bucket. It’s usually due to missing IAM permissions for the service account running the export job.

✅ Fix: Grant the roles/storage.objectCreator role to the BigQuery service account or user. This allows BigQuery to write files to the bucket and complete the export successfully. Make sure the bucket exists and matches the dataset’s region.

⚠️Error: If you rely on default export settings without specifying --field_delimiter or --print_header, BigQuery may generate CSVs with incorrect column separators or missing headers. This can disrupt downstream processes that rely on a consistent structure.

✅ Fix: Explicitly define the --field_delimiter and use --print_header=true when exporting. These options ensure your CSV has the expected format and structure, especially when used in automated workflows or imported into spreadsheets and external systems.

⚠️ Error: BigQuery may throw an “Invalid value at job.configuration…” error when the CSV structure doesn’t align with the expected schema. This often results from data type mismatches, extra commas, special characters, or inconsistent column counts.

✅ Fix: Check your CSV for malformed rows, hidden characters, or misaligned columns. If needed, clean the data before export. When importing, manually define the schema to avoid relying on potentially inaccurate auto-detection.

⚠️ Error: If your export target path already exists and the Cloud Storage bucket has retention policies enabled, BigQuery cannot overwrite the file. This results in export failures or unexpected storage costs.

✅ Fix: Avoid overwriting locked files by using unique, timestamped filenames for each export. If overwriting is necessary, temporarily disable retention policies or versioning in the bucket settings before running the export job to allow successful file replacement.

For teams that want to automate reporting without giving up control, OWOX Data Marts (Community Edition) offers a forever-free solution. It’s ideal for businesses that value data autonomy and want to manage connectivity, transformation, and reporting on their own terms.

With support for platforms like Snowflake, Databricks, AWS Athena, and Azure Synapse, you can build a flexible, scalable reporting layer. Use semantic models, schedule refreshes, and surface data in Sheets, Looker Studio, or Excel, with no vendor lock-in.

Check out the GitHub repo to get started and explore all features.

No, BigQuery exports must go to Google Cloud Storage. You can then download files manually or use tools like gsutil to copy them locally.

BigQuery automatically splits exports into multiple files based on size and sharding logic. You can export up to 500 files per job using wildcard URIs.

CSV is a flat file format and doesn't support complex data types. You must first flatten or unnest nested/repeated fields before exporting to CSV.

Each exported CSV file is limited to 1 GB. If your table exceeds this limit, BigQuery automatically splits the export into multiple files using wildcards.

Upload the CSV to Google Cloud Storage and create a table using the “Create table” option in BigQuery, either with auto-detect or a defined schema.

Each export job supports up to 1 GB per file and 500 output files. Export limits may also depend on dataset size and storage bucket permissions.

BigQuery itself doesn't export on a schedule. However, you can automate exports using scheduled queries, workflows, or tools like Cloud Functions and OWOX BI Reports.