Ever needed to recover lost data in BigQuery, maybe from a mistaken delete or a pipeline error? BigQuery’s Time Travel feature lets you access earlier versions of your tables without relying on backups or complicated recovery processes. It’s a built-in safety net for tracking changes or restoring data when things go off track.

This article walks through real-world use cases of Time Travel in action. You’ll learn how data analysts, engineers, and compliance teams use it to restore data, debug issues, and meet audit needs, all with practical queries and step-by-step examples.

BigQuery Time Travel is a feature that enables you to access past versions of your data, dating back up to seven days, in case data is accidentally deleted or changed due to human error or process failures. Time Travel helps you recover it without needing a backup.

Instead of losing important information forever, you can write a simple SQL query to view or restore data as it existed at a specific point in time. This makes Time Travel extremely useful for recovery, audits, and troubleshooting.

BigQuery Time Travel isn't just for emergencies; it supports everyday use cases across analytics, compliance, and engineering. Below are real-world scenarios where teams rely on it to maintain data accuracy.

A major e-commerce company accidentally deleted a key BigQuery table that stored user session data, data that fed into dashboards and machine learning models. The loss put critical reporting and analytics at risk.

Thanks to BigQuery Time Travel, the data team was able to roll the table back to its previous state in under 10 minutes. This quick recovery kept operations running without major disruption.

Afterward, the team implemented safeguards, including process checks and team training, to minimize the likelihood of such an incident occurring again.

For high-frequency tables that receive constant updates or streaming inserts, analytics results can vary between runs. To ensure consistency, teams can use BigQuery Time Travel with a fixed timestamp using FOR SYSTEM_TIME AS OF.

This allows queries to return results from a stable snapshot, avoiding discrepancies. It's handy when building dashboards or reports that require consistent outputs during active data changes, without the need to set up complex caching systems.

A global financial services firm used BigQuery to store stock market tick data, which their analysts used to identify unusual patterns and test trading strategies. Before switching to Time Travel, they had to export historical data, a slow and error-prone process that was done manually.

Using BigQuery Time Travel, the team was able to query previous versions of their data right from SQL. It helped them verify changes, avoid rework, and adjust their strategy faster, leading to more reliable insights for their trading decisions.

At a retail analytics company, a table with a 30-day expiration rule was automatically deleted, but the team was still relying on it for a quarterly sales report. The issue wasn’t noticed until queries started failing.

The data engineering team used BigQuery Time Travel to quickly roll back a table to its state just before it expired. This helped avoid reporting delays and was a useful reminder to keep table expiration settings up to date.

A fintech company required a method to track changes in sensitive transaction data for audit purposes. With BigQuery Time Travel, the compliance team was able to review previous versions of the data and track changes over time. That meant they didn’t need to keep separate audit logs, which made reporting simpler and helped ensure everything was traceable for audits, both internal and external.

BigQuery Time Travel isn't just theoretical; it’s built for real use. Below are practical SQL examples that show how to query, recover, and restore data using a consistent table.

Time Travel in BigQuery lets you see a table exactly as it was at a specific point in time. It’s helpful for checking past data without having to restore or change anything.

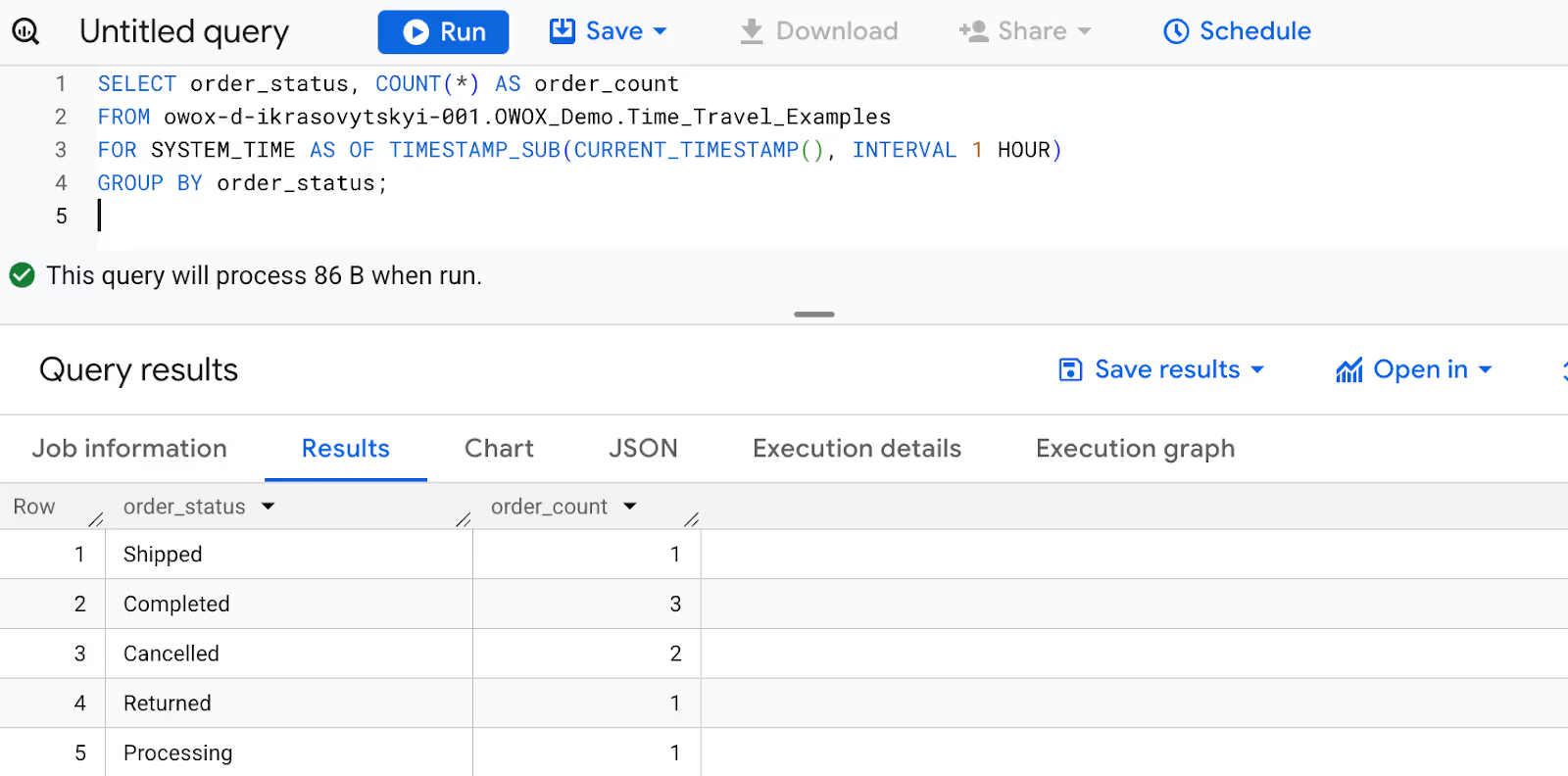

Example:

An analyst spots a sudden spike in cancelled orders on a sales dashboard. To dig into it, they check how the order data looked an hour ago using Time Travel. This helps them figure out if the spike was due to a real business event or if someone accidentally updated the order_status field.

1SELECT order_status, COUNT(*) AS order_count

2FROM owox-d-ikrasovytskyi-001.OWOX_Demo.Time_Travel_Examples

3FOR SYSTEM_TIME AS OF TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 1 HOUR)

4GROUP BY order_status;

Here:

This approach is ideal when you want to audit recent changes or verify past data without altering your current table. It’s fast, SQL-native, and doesn’t require recovery workflows.

BigQuery Time Travel lets you view a table as it existed before changes were made. This is useful when someone accidentally updates or modifies data, like changing the order status or total price.

Instead of relying on a backup, you can query the table from a few minutes earlier and recover the correct values within the 7-day Time Travel window.

Example:

Let's assume a teammate mistakenly updated all order_status values to "Processing" in a live table used for fulfillment tracking. This created confusion in reports and operations dashboards.

The analyst needs to check the table’s state from two minutes earlier to identify what the original statuses were and recover the correct values before syncing with downstream tools.

1SELECT order_ID, order_status

2FROM owox-d-ikrasovytskyi-001.OWOX_Demo.Time_Travel_Examples

3FOR SYSTEM_TIME AS OF TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 2 MINUTE);

Here:

Note: To restore data from an entirely deleted table, you must have the bigquery.admin role. Standard editor or owner roles are not sufficient.

Time Travel provides a quick way to recover from accidental updates without affecting current workflows or relying on backups.

BigQuery Time Travel can be used not only to view historical data, but also to restore an entire table to its earlier version. This is useful when bulk changes, overwrites, or deletes affect critical records, and you need to roll back to a known good state.

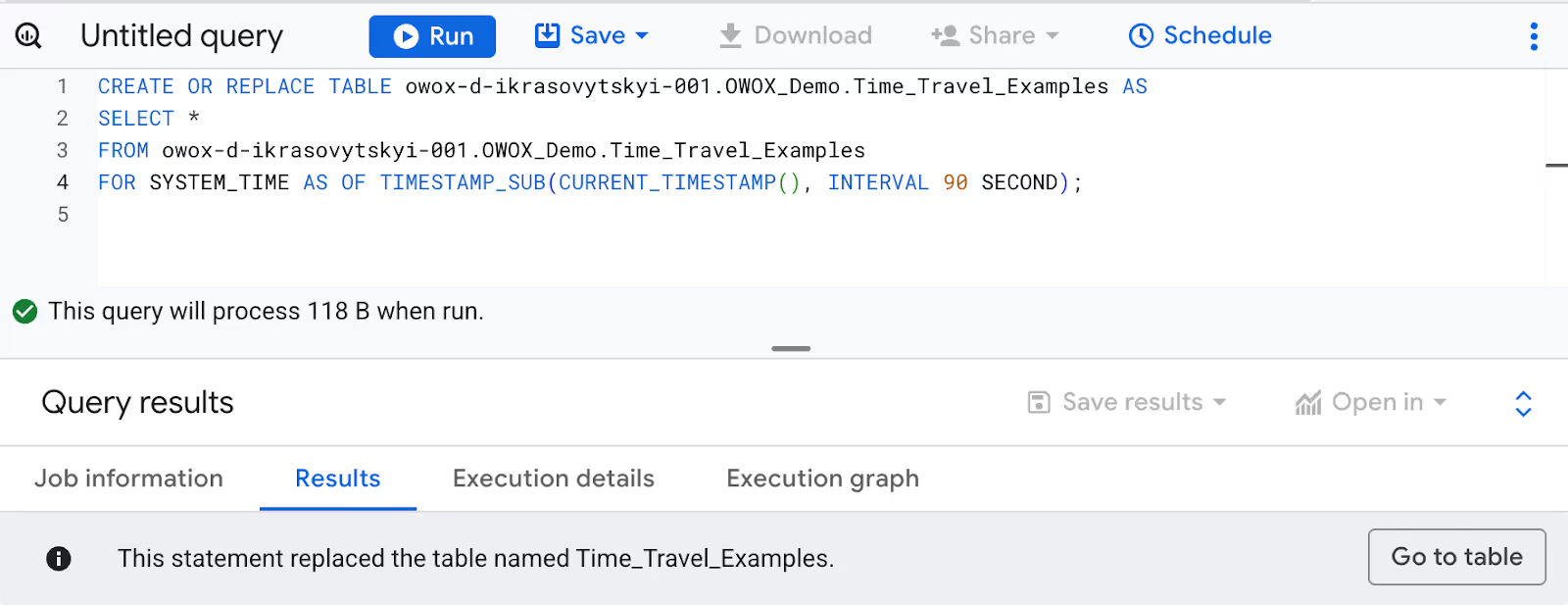

Example:

A table storing recent product orders was accidentally overwritten during a data import. To restore the correct data, the analyst identifies the error timing and reverts the table to its state from 90 seconds ago.

1CREATE OR REPLACE TABLE owox-d-ikrasovytskyi-001.OWOX_Demo.Time_Travel_Examples AS

2SELECT *

3FROM owox-d-ikrasovytskyi-001.OWOX_Demo.Time_Travel_Examples

4FOR SYSTEM_TIME AS OF TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 90 SECOND);

Here:

Important Note: Restoring a table this way replaces any new data added since the chosen point in time. Always validate the recovery timestamp to avoid losing valid updates.

This approach is a fast, budget-friendly way to restore entire tables, perfect for situations where you need to fix something quickly or roll back large-scale changes.

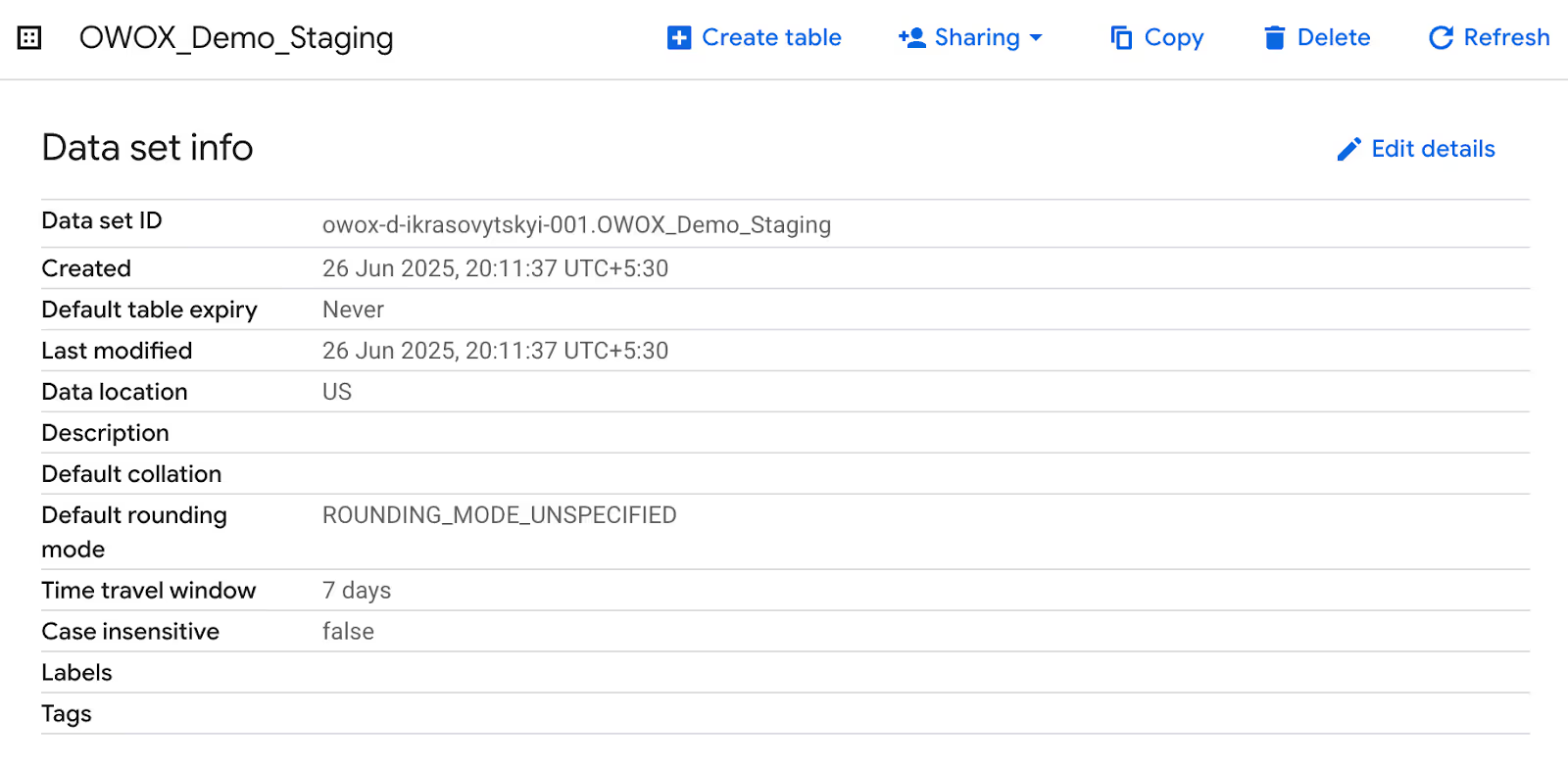

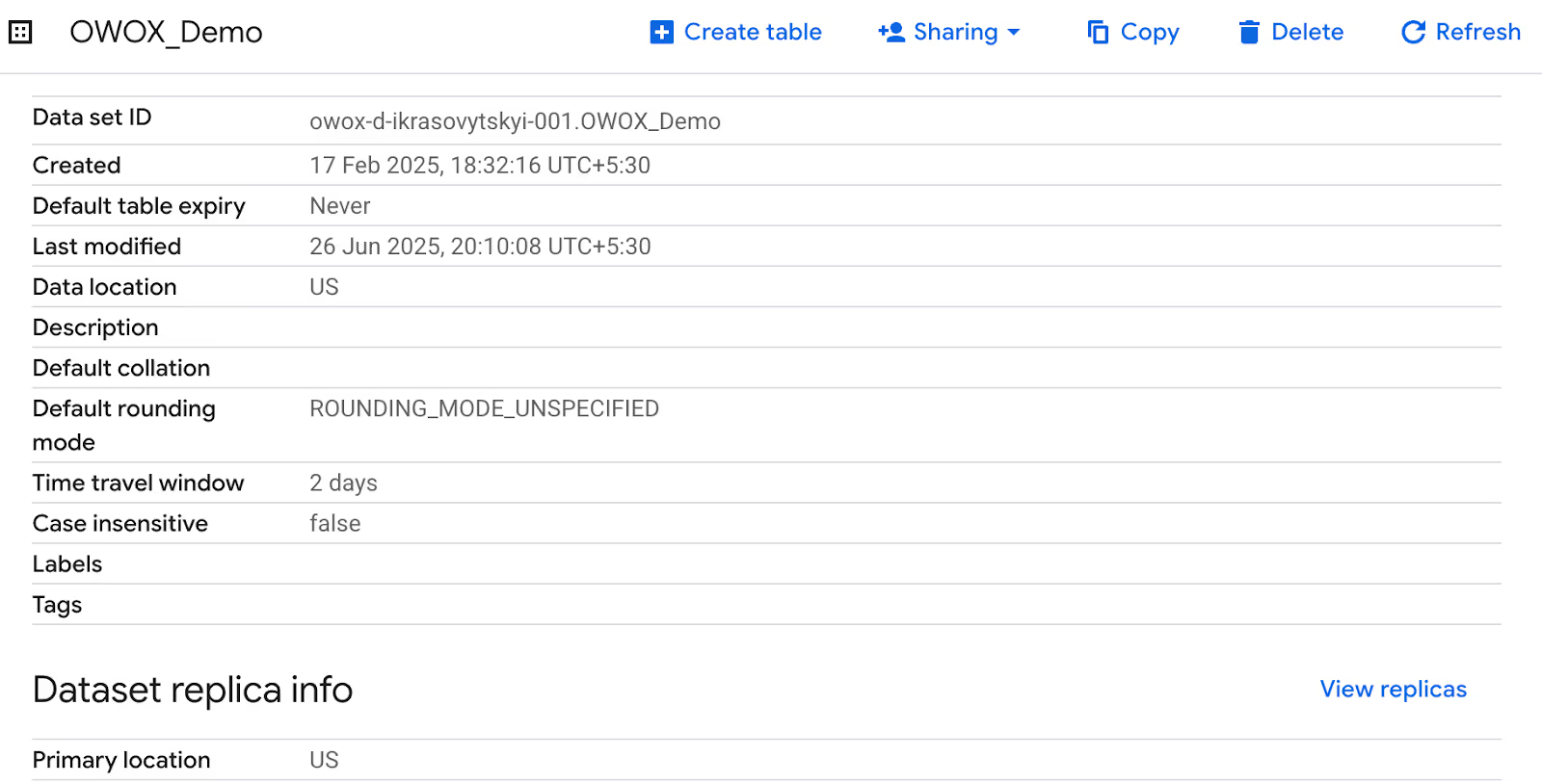

BigQuery allows you to customize the time travel window at the dataset level. By default, it retains historical table data for 7 days (168 hours). However, not all datasets need that full retention.

In cases where you're working with staging, testing, or frequently refreshed data, you can reduce the time travel window to as little as 1 hour.

Example:

The team managing the ProductOrders dataset wants to reduce the time travel window from 7 days to 24 hours. Since the dataset is used for staging transactional data during testing, keeping a shorter history helps minimize unnecessary storage usage.

To set the time travel window at the time of dataset creation:

1CREATE SCHEMA `owox-d-ikrasovytskyi-001.OWOX_Demo_Staging`

2OPTIONS (

3 max_time_travel_hours = 168

4);

To modify the time travel window for an existing dataset:

1ALTER SCHEMA `owox-d-ikrasovytskyi-001.OWOX_Demo`

2SET OPTIONS (

3 max_time_travel_hours = 48

4);

Here:

Shortening the time travel window is a practical step for managing storage in staging or temporary datasets, where long-term history isn’t needed.

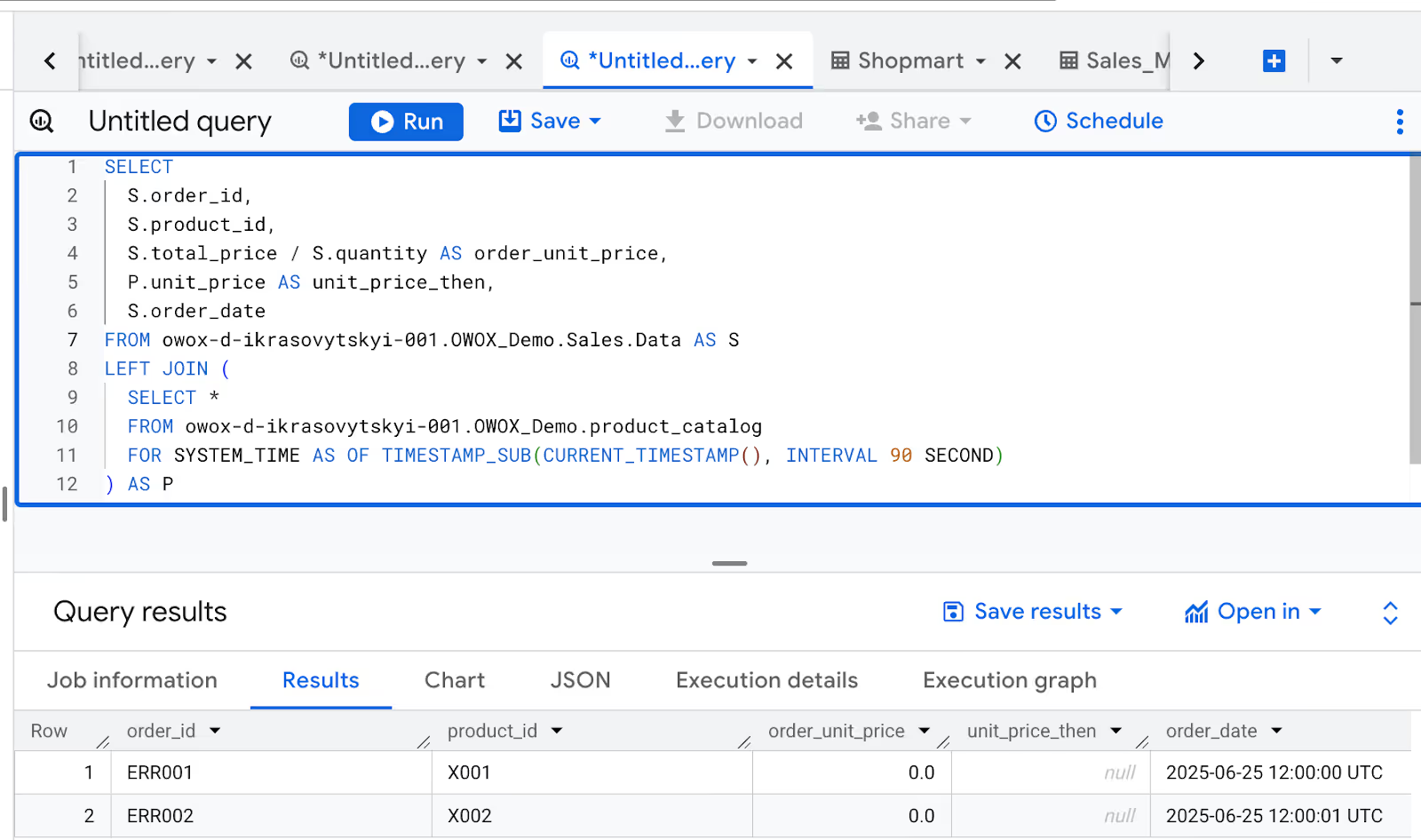

BigQuery lets you use Time Travel in JOINs, so you can query multiple tables as they looked at different points in time. This is especially helpful when you're comparing historical data across related datasets, like orders and product details, without needing to restore or copy anything.

Example:

An analyst wants to compare order records with historical product pricing data as it existed at different times. This helps validate whether changes to product data caused pricing discrepancies in past orders.

1SELECT

2 S.order_id,

3 S.product_id,

4 S.total_price / S.quantity AS order_unit_price,

5 P.unit_price AS unit_price_then,

6 S.order_date

7FROM owox-d-ikrasovytskyi-001.OWOX_Demo.Sales.Data AS S

8LEFT JOIN (

9 SELECT *

10 FROM owox-d-ikrasovytskyi-001.OWOX_Demo.product_catalog

11 FOR SYSTEM_TIME AS OF TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 90 SECOND)

12) AS P

13ON S.product_id = P.product_id;

Here:

This approach enables teams to track how changes in one table (such as product data) may have impacted records in another (like orders), without the need to restore or duplicate datasets.

For teams with strict recovery time objectives (RTO), manual restoration of deleted BigQuery tables might not be fast enough. Automating the process with Google Cloud Functions ensures faster, hands-free recovery within the Time Travel window.

Example Scenario

The team managing the Product Orders wants to automate restoration in case the table is accidentally deleted. They deploy a Cloud Function that listens for deletion events and instantly recovers the table with a timestamped name.

1from google.cloud import bigquery

2

3def restore_deleted_table(data, context):

4 client = bigquery.Client()

5

6 # Extract the deleted table's reference from the Pub/Sub message

7 deleted_table_ref = bigquery.TableReference.from_string(data['resource']['name'])

8

9 # Set restore time to when the deletion event was triggered

10 restore_job_config = bigquery.RestoreTableConfig(

11 source_table_ref=deleted_table_ref,

12 restore_timestamp_ms=context.timestamp

13 )

14

15 # Restore the table with a timestamp suffix

16 restored_table_id = f"{deleted_table_ref.table_id}_{context.timestamp}"

17 restored_table_ref = bigquery.TableReference(deleted_table_ref.dataset_ref, restored_table_id)

18

19 # Launch the restore job

20 restore_job = client.restore_table(restored_table_ref, restore_job_config)

21 restore_job.result()

22

23 print(f"Restored deleted table {deleted_table_ref.path} to {restored_table_ref.path}")

Here:

Automating table recovery reduces response time, eliminates manual steps, and ensures critical datasets, are restored within seconds, greatly minimizing downtime and data loss.

BigQuery Time Travel is a reliable feature, but it’s not without its challenges. Things like missed retention periods or slower-than-expected queries can still happen. Here are some common issues users run into and how to deal with them.

⚠️ Error: Overwriting a table during a quick aggregation or transformation query can wipe out months of detailed data. This disrupts reporting pipelines and causes dashboards connected to the table to fail.

✅ Solution: Always create backups before running overwrite queries. If overwritten, use BigQuery Time Travel to restore the table to its previous state within the 7-day retention window.

⚠️ Error: Trying to recover a deleted or modified table after the 7-day Time Travel window results in permanent data loss. Time Travel cannot access data beyond the configured retention period.

✅ Solution: Set up scheduled backups for critical tables. Use export jobs or snapshot tables to maintain copies beyond the Time Travel limit. Ensure appropriate permissions (e.g., bigquery.admin) are in place for recovery operations.

⚠️ Error: Time Travel queries can slow down significantly when scanning large tables or querying data from several days ago. Reconstructing historical table states increases execution time and resource usage.

✅ Solution: When querying historical data, use partitioned tables to limit how much data is scanned. Apply filters to the partition column and select only the columns you actually need. This helps reduce query time and resource use without adding complexity.

To get real value out of BigQuery’s Time Travel feature, it's important to pair it with smart data management habits. The tips below can help you improve performance, manage storage more effectively, and reduce the risk of issues during recovery.

Define how long you actually need to keep historical data based on your team’s audit, compliance, or analysis needs. BigQuery lets you keep up to 7 days of past data, but in many cases, a shorter window is enough and using only what you need can help save on storage and simplify maintenance.

Make sure your team understands how to use Time Travel correctly, especially when dealing with older data. A few clear, practical guidelines can help prevent issues like accidental deletions or avoidable recovery steps. It also ensures retention settings are set and used properly.

Use partitioning and clustering to improve the performance of Time Travel queries. Partitioning helps limit the scanned data to a specific date or range, while clustering organizes data for faster lookups and analysis. Together, they reduce query costs and make historical data access more efficient and scalable.

Since Time Travel only retains data for up to 7 days, archive older snapshots regularly to Cloud Storage or BigQuery long-term tables. This ensures access to historical data beyond the retention window for audits, reporting, or reprocessing needs, without relying solely on Time Travel.

Keep a versioned changelog of schema updates, transformation logic, and data policies. This helps contextualize historical data when using Time Travel. Tracking metadata changes ensures clarity during audits, simplifies debugging, and allows teams to align past table states with the logic or structure used at that time.

BigQuery offers a solid set of functions that make it easier to transform, analyze, and manage large datasets. They help break down complex tasks, speed up queries, and handle data at scale without a lot of manual work.

The OWOX Reports lets you pull data from BigQuery straight into Google Sheets—no need to write SQL. It’s designed for both analysts and non-technical users, so anyone on your team can explore data, build reports, and apply filters without extra help.

You can update data with a click and keep your reports current without copying and pasting or exporting files. Whether you’re checking performance metrics or building a dashboard, this tool helps you spend less time on busywork and more time getting insights.

Time Travel lets you access previous versions of a table from up to 7 days ago using SQL, enabling data recovery, auditing, and historical analysis.

Use FOR SYSTEM_TIME AS OF in a query to view past data and CREATE TABLE AS SELECT to restore it—within the 7-day Time Travel window.

Yes, by querying a stable snapshot using a fixed timestamp, you can ensure consistent results even as streaming data or frequent updates continue in the background.

Common issues include performance lags in large queries, limited retention (7 days), and failed recovery attempts beyond that window without backup. Admin permissions are also required for specific actions.

Partition and cluster your tables, filter by partition columns, and limit scanned columns. This reduces the data BigQuery needs to process when reconstructing historical table states.

Set clear data retention rules, make sure your team understands them, and automate backups to keep things running smoothly. Use features like Time Travel, partitioning, and metadata tracking to make recovery and audits easier and more reliable.