Dealing with messy JSON in BigQuery? Whether it’s product logs, CRM exports, or deeply nested analytics events, JSON can turn into a time sink fast, unless you know how to work with it efficiently.

That’s where BigQuery’s JSON manipulation functions come in. From appending values to cleaning up nulls, these functions help you reshape data directly in SQL, no extra scripts or ETL steps needed. In this guide, you’ll learn how to use functions to simplify your data workflows and deliver cleaner, more reliable reports.

JSON data manipulation involves modifying the structure or content of JSON data to facilitate easier manipulation and processing. This includes actions like adding new values, updating existing fields, or removing unnecessary elements.

BigQuery makes it easier for analysts and engineers to work with complex, semi-structured data like API responses or event logs directly within SQL. JSON plays a big role here, offering flexibility that traditional structured tables don’t.

JSON is a flexible format that doesn’t require a fixed schema, which makes it a great fit for handling data from APIs, logs, or other sources where the structure can change over time.

BigQuery provides built-in functions to modify JSON data directly within your queries. These functions enable you to update, insert, remove, or clean JSON fields without requiring external processing or manual restructuring.

The JSON_ARRAY_APPEND function in BigQuery adds new elements to the end of an array within a JSON object. It’s commonly used to update fields like product lists, user tags, or event logs dynamically.

The Syntax of JSON_ARRAY_APPEND is:

1JSON_ARRAY_APPEND(json_expr, json_path, value [, append_each_element => TRUE | FALSE])

Here:

This function appends values to arrays while preserving the existing structure.

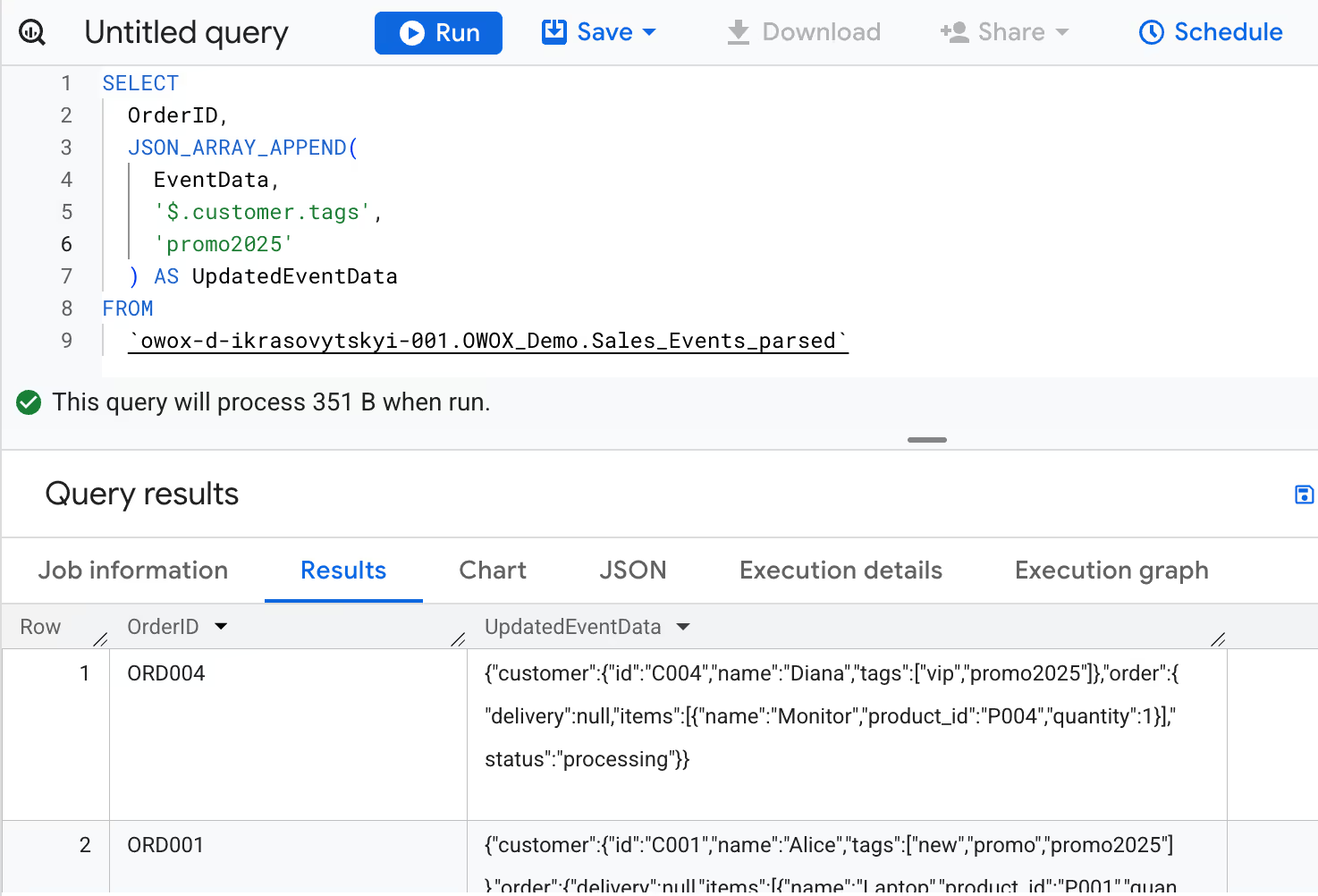

Imagine a marketing team is refining its loyalty program. Alice (Order ID ORD001) is already tagged as both a "new" and "promo" customer. They now want to append a "loyalty" tag to her profile in BigQuery.

1SELECT

2 OrderID,

3 JSON_ARRAY_APPEND(

4 EventData,

5 '$.customer.tags',

6 'promo2025'

7 ) AS UpdatedEventData

8FROM

9 `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

This query adds "loyalty" to the existing customer.tags array. The result becomes ["new", "promo", "loyalty"], enabling the team to identify and prioritize high-potential repeat buyers more effectively.

The JSON_ARRAY_INSERT function is used to insert a new element at a specific position within a JSON array. It’s helpful when order matters, like inserting a product at the right step in a checkout or modifying a predefined list structure.

The Syntax of JSON_ARRAY_INSERT is:

1JSON_ARRAY_INSERT(

2 json_expr,

3 json_path, value

4 [, insert_each_element => TRUE | FALSE]

5)

Here:

This function preserves the array’s original order and shifts existing elements forward.

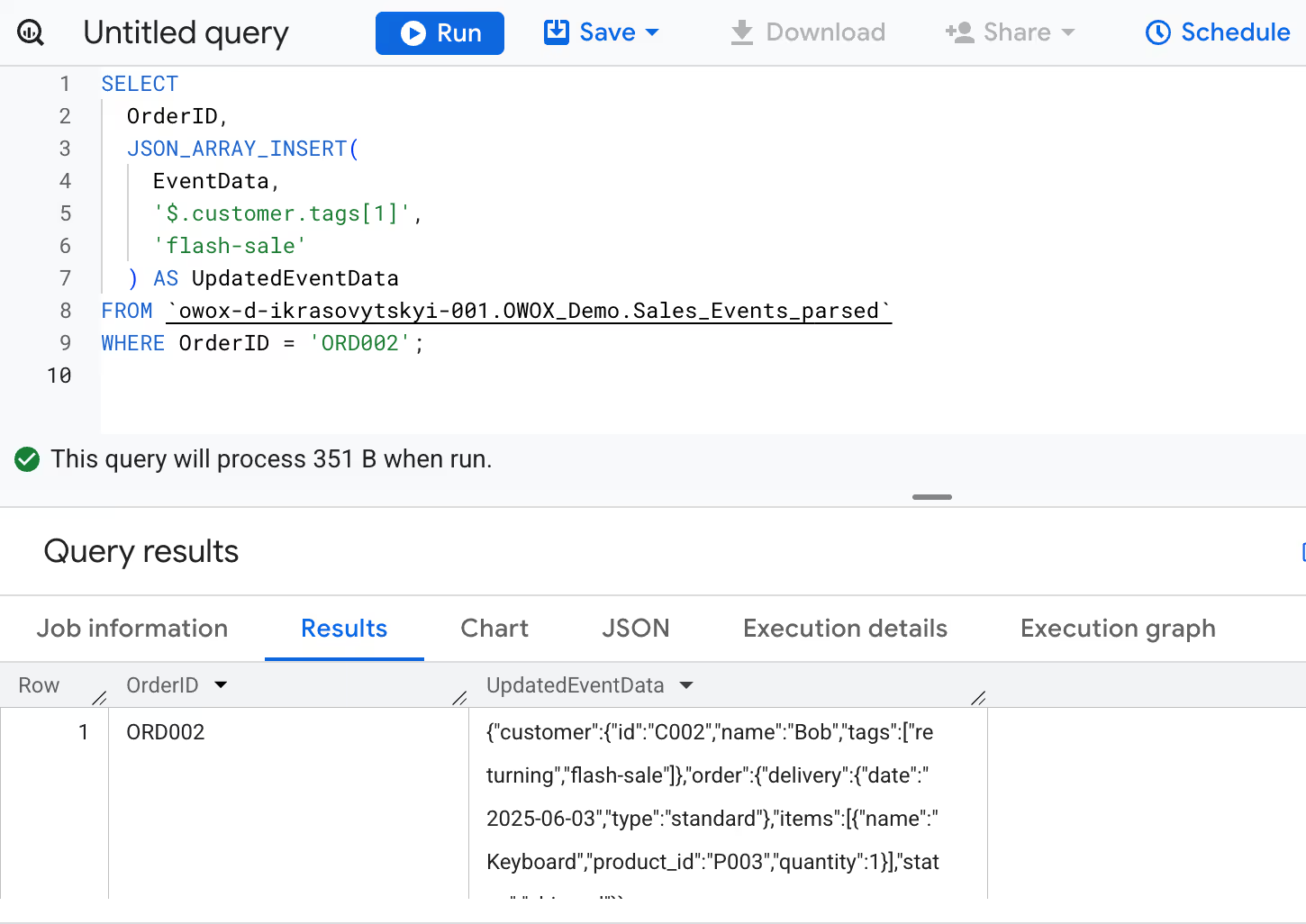

Imagine the marketing team wants to personalize the customer journey for Bob. He is already tagged as "returning", but now they want to insert a "flash-sale" tag right after the first one in his customer.tags array.

1SELECT

2 OrderID,

3 JSON_ARRAY_INSERT(

4 EventData,

5 '$.customer.tags[1]',

6 'flash-sale'

7 ) AS UpdatedEventData

8FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

9WHERE OrderID = 'ORD002';

This inserts "flash-sale" at the second position, updating Bob’s tag array to ["returning", "flash-sale"]. The order reflects his current status and recent campaign exposure more accurately.

The JSON_REMOVE function is used to delete specific fields or elements from a JSON document based on JSONPath expressions. If the path doesn’t exist, it simply skips that path without error, making it safe for optional or inconsistent structures.

The Syntax of JSON_REMOVE is:

1JSON_REMOVE(json_expr, json_path[, ...])

Paths are evaluated in order, and removals can affect the structure for subsequent paths.

Imagine the marketing team decides that the 'delivery' information for another customer, Charlie, is irrelevant, who has canceled their order. They want to remove it from his JSON record in BigQuery.

1SELECT

2 OrderID,

3 JSON_REMOVE(

4 EventData,

5 '$.order.delivery'

6 ) AS UpdatedEventData

7FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

8WHERE OrderID = 'ORD003';

This query removes the delivery key from the order object. Since Charlie’s delivery was already null, removing the field cleans up the data structure for further use.

The JSON_SET function updates an existing field or inserts a new one into a JSON document. It's a flexible function that lets you modify values or create new keys, as long as the path exists or create_if_missing is enabled.

The syntax of JSON_SET is:

1JSON_SET(

2 json_expr,

3 json_path, value

4 [, create_if_missing => TRUE | FALSE]

5)

This function allows controlled editing of structured and nested JSON objects.

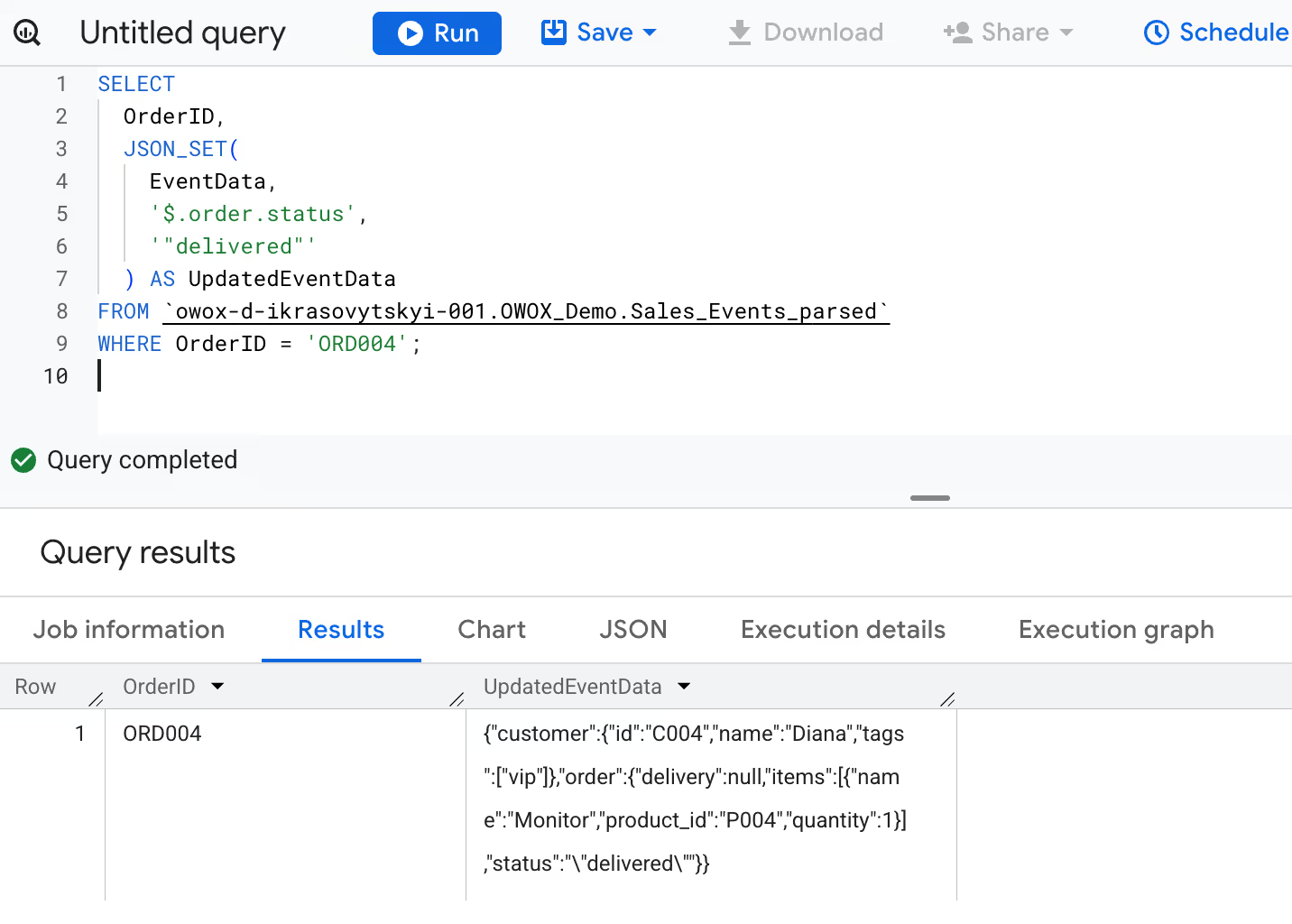

Imagine the marketing team updates Diana’s order status to "delivered" after a successful shipment. They want to reflect this in the order.status field of her record in BigQuery.

1SELECT

2 OrderID,

3 JSON_SET(

4 EventData,

5 '$.order.status',

6 '"delivered"'

7 ) AS UpdatedEventData

8FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

9WHERE OrderID = 'ORD004';

This updates Diana’s order.status from "processing" to "delivered", ensuring the latest fulfillment status is captured for accurate campaign and lifecycle tracking.

The JSON_STRIP_NULLS function removes key-value pairs with null values from a JSON object. This is especially helpful for cleaning incomplete data before analytics or reporting. It can also optionally clean inside arrays and remove empty structures if configured.

The syntax of JSON_STRIP_NULLS is:

1JSON_STRIP_NULLS(

2 json_expr

3 [, json_path ]

4 [, include_arrays => TRUE | FALSE ]

5 [, remove_empty => TRUE | FALSE ]

6)

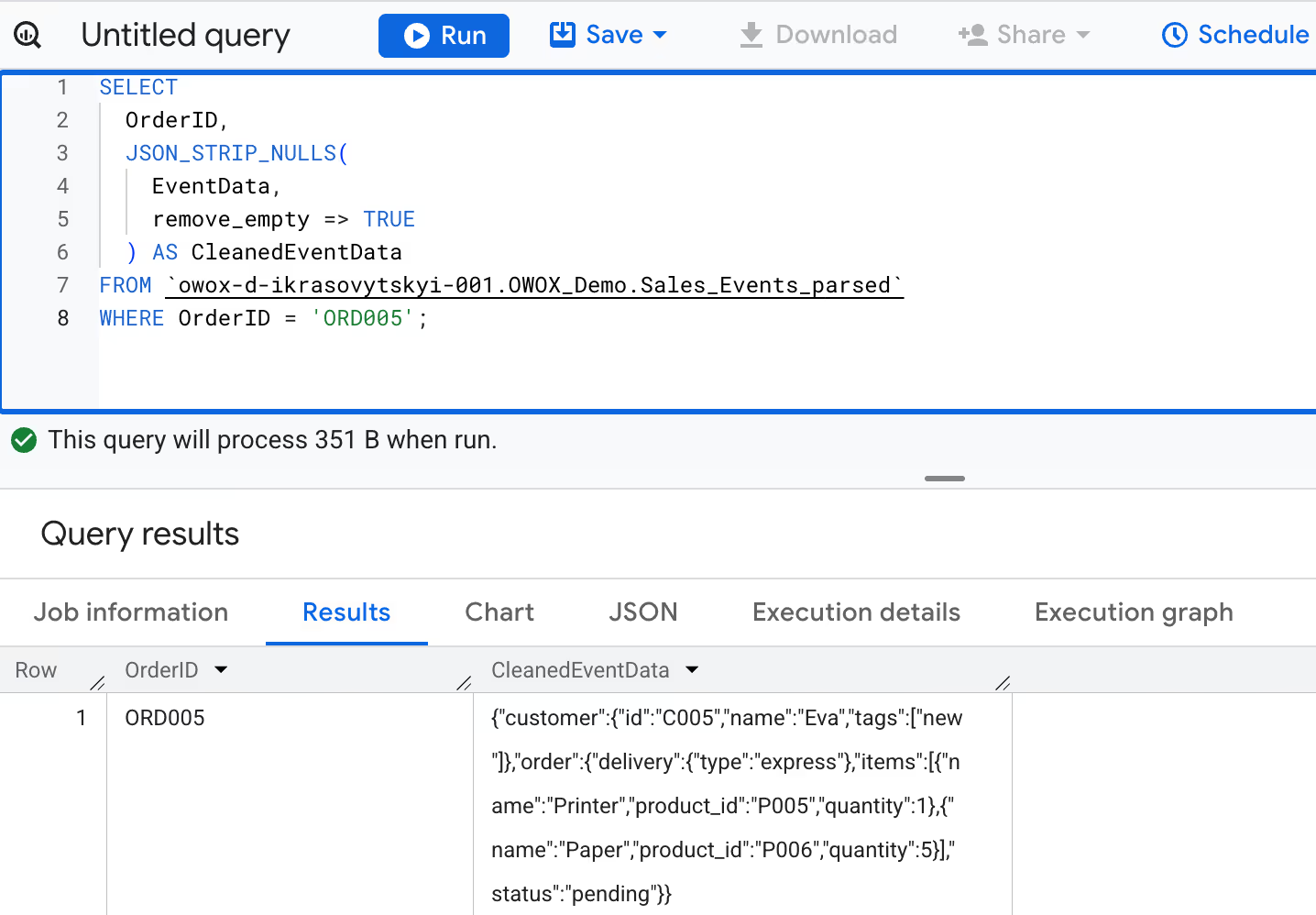

Imagine the marketing team is preparing campaign data for Eva and notices that her delivery date is null. To clean up the record for analysis, they want to remove all null values, including from arrays, and delete any resulting empty objects.

1SELECT

2 OrderID,

3 JSON_STRIP_NULLS(

4 EventData,

5 include_arrays => TRUE,

6 remove_empty => TRUE

7 ) AS CleanedEventData

8FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

9WHERE OrderID = 'ORD005';

This query removes all null fields from the JSON, including delivery.date, and eliminates any remaining empty objects, resulting in cleaner, lighter data that is ready for reliable reporting.

BigQuery's JSON functions aren't just technical tools; they solve real business problems. From cleaning messy event data to modifying nested fields, here’s how teams apply JSON processing in everyday workflows.

When working with customer or order records stored in JSON format, you might need to add a new element to an array. JSON_ARRAY_APPEND allows you to insert values at the end of an existing array within a JSON field without replacing the current contents.

Example:

Imagine the marketing team wants to add a "survey_respondent" tag to customer Alice’s profile to indicate she participated in a feedback campaign. They’ll use JSON_ARRAY_APPEND to update the customer.tags array.

1SELECT

2 OrderID,

3 JSON_ARRAY_APPEND(

4 EventData,

5 '$.customer.tags',

6 '"survey_respondent"'

7 ) AS UpdatedEventData

8FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

9WHERE OrderID = 'ORD001';

This query appends "survey_respondent" to the existing tags for Alice, allowing future segmentation based on participation and engagement activities.

There are times when you want to insert data at a specific position in a JSON array, even if that position doesn’t exist yet. JSON_ARRAY_INSERT allows inserting at indexes beyond the current array length, automatically filling in gaps with null values.

Example :

Now, the marketing team is planning a future tag campaign and wants to pre-assign a placeholder "future_loyalty" tag to Bob, his current customer.tags array only has one value, but the team wants to place the new tag at index 3.

1SELECT

2 OrderID,

3 JSON_ARRAY_INSERT(

4 EventData,

5 '$.customer.tags[3]',

6 '"future_loyalty"'

7 ) AS UpdatedEventData

8FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

9WHERE OrderID = 'ORD002';

This query inserts the tag "future_loyalty" at the fourth position (index 3), padding the array with null values in between if needed.

When working with customer order data, it's common to encounter null fields, such as missing delivery information or optional fields left unfilled. JSON_STRIP_NULLS helps clean up such records by removing unnecessary null values and empty arrays or objects.

Example:

Imagine the marketing analyst wants to clean up EventData for OrderID 'ORD005', removing all null values and empty arrays from her JSON record to prepare it for export.

1SELECT

2 OrderID,

3 JSON_STRIP_NULLS(

4 EventData,

5 remove_empty => TRUE

6 ) AS CleanedEventData

7FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

8WHERE OrderID = 'ORD005';

This query strips out all null fields and also removes any empty structures like empty arrays or objects, resulting in a more compact and clean JSON document.

Sometimes, you may try to remove a key that doesn’t exist in the JSON structure, such as deprecated fields or optional data. JSON_REMOVE handles this gracefully without breaking the query.

Example:

Imagine a marketing analyst is cleaning up Diana’s order data (OrderID = 'ORD004') and attempts to remove a field called "coupon" which does not exist in the original JSON.

1SELECT

2 OrderID,

3 JSON_REMOVE(EventData, '$.order.coupon') AS UpdatedEventData

4FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

5WHERE OrderID = 'ORD004';

Since the "coupon" field doesn’t exist, the function simply returns the original JSON without errors, keeping the document structure intact.

Nested and repeated fields are typical in JSON-based data like event logs or order records. They’re useful for storing complex information—like customer profiles or item lists—in a compact format. To work with this kind of data in BigQuery, you'll often rely on functions like JSON_EXTRACT, UNNEST, and structured JOINs.

Nested and repeated fields are common in JSON-based event logs or order records. They enable compact storage of complex, structured data such as customer details or item lists. To work with them, you often need to use functions like JSON_EXTRACT, UNNEST, and structured JOINs.

Example:

Let’s say you want to list all product names from Eva’s order (OrderID = 'ORD005'), where items are stored in a nested array.

1SELECT

2 OrderID,

3 JSON_VALUE(item, '$.name') AS ProductName

4FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`,

5UNNEST(JSON_QUERY_ARRAY(EventData, '$.order.items')) AS item

6WHERE OrderID = 'ORD005';

This query uses JSON_QUERY_ARRAY to access the items array and UNNEST to flatten it, allowing each product to be listed on a separate row.

In many marketing operations, order data evolves over time, like adding delivery details or updating customer status. Instead of overwriting the entire JSON blobs, JSON_SET helps update specific fields or insert new ones when needed.

Example

Imagine the marketing team wants to update the JSON data for Alice (OrderID = 'ORD001') by inserting a delivery date under the previously null delivery object in her order.

1SELECT

2 OrderID,

3 JSON_SET(

4 EventData,

5 '$.order.delivery.date',

6 '2025-06-05',

7 create_if_missing => TRUE

8 ) AS UpdatedEventData

9FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

10WHERE OrderID = 'ORD001';

This query creates the missing delivery.date field and sets its value to 2025-06-05, without modifying the rest of the JSON. It ensures the new data is added even when intermediate paths are missing or null.

Combining JSON manipulation functions with parsing and conversion in BigQuery allows marketers to reshape raw event data into useful formats. This approach helps clean, extract, and enrich JSON without exporting it elsewhere.

Example

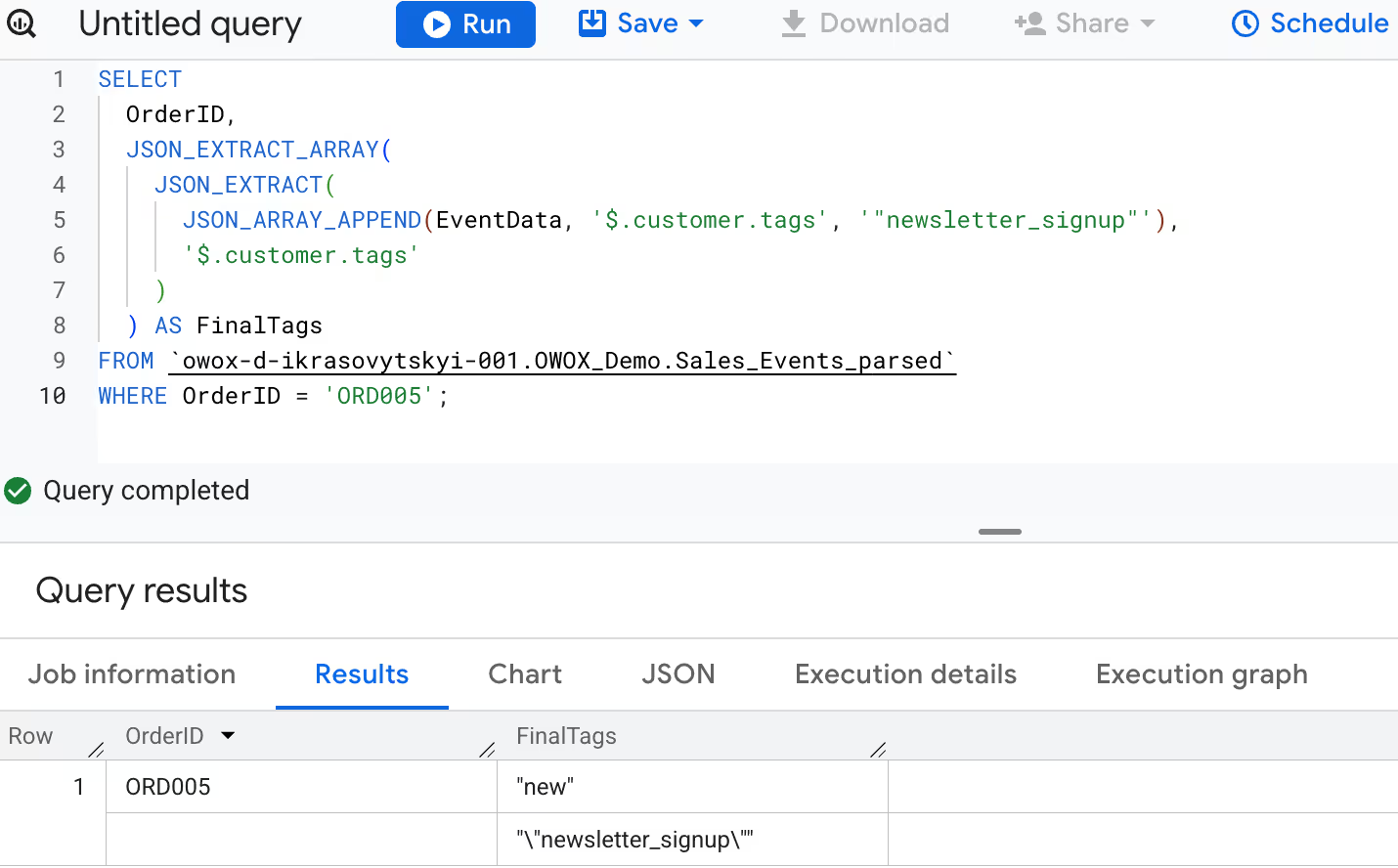

Now, the marketing team wants to append a new tag - "newsletter_signup" to Eva’s customer profile (OrderID = 'ORD005') and then extract the final list of tags as a SQL array for segmentation.

1SELECT

2 OrderID,

3 JSON_EXTRACT_ARRAY(

4 JSON_EXTRACT(

5 JSON_ARRAY_APPEND(EventData, '$.customer.tags', '"newsletter_signup"'),

6 '$.customer.tags'

7 )

8 ) AS FinalTags

9FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Sales_Events_parsed`

10WHERE OrderID = 'ORD005';

This query appends "newsletter_signup" to the tags array, extracts the updated array, and parses it, returning a clean list of tags ready for downstream use.

Working with JSON in BigQuery gives you a lot of flexibility, but it also comes with its own set of challenges. In this section, you’ll find common issues, typical error messages, and straightforward tips to help you troubleshoot and resolve them.

❌Common Issue: JSON files fail to load when they aren’t in newline-delimited JSON (NDJSON) format. BigQuery expects each line to be a standalone JSON object, not a single array or pretty-printed block.

✅Advice: Reformat your JSON file so that each line contains one complete JSON object. Avoid wrapping data in arrays or using indentation. Convert pretty-printed JSON to NDJSON before uploading to BigQuery.

❌Common Issue: BigQuery's JSONPath implementation supports only a limited set of operators—namely $, ., and []. Using unsupported features, such as wildcards (*), filters, or recursive descent (..), can lead to errors. For instance, attempting to extract all "Name" fields from an array using "$.[Name]" is invalid in BigQuery.

✅Advice: Stick to the supported JSONPath syntax in BigQuery: use $ for the root, . For child elements, and [] for array indices or keys. To extract values from arrays, consider using the UNNEST function in combination with JSON_QUERY_ARRAY.

❌Common Issue: BigQuery batch loads only support CSV, Avro, and newline-delimited JSON (NDJSON). If you try to load files in formats like XML, YAML, or improperly structured JSON, the load will fail.

✅Advice: Convert your data to NDJSON or another supported format like Avro before loading. Make sure each line of JSON is a valid, standalone object, this helps avoid errors during ingestion

❌Common Issue: BigQuery has a limit of 500 nested levels in JSON. If your data includes objects or arrays nested beyond that, your queries or loads will fail.

✅Advice: Flatten overly nested JSON before loading or querying. Restructure complex payloads during ingestion or split them into separate fields to stay within the supported depth.

❌Common Issue: Legacy SQL does not support the JSON data type in BigQuery. Attempting to query JSON columns using legacy SQL results in errors or unsupported function issues.

✅Advice: Always use Standard SQL when working with JSON in BigQuery. Enable it by default in your query settings to access JSON functions like JSON_EXTRACT, JSON_QUERY, and JSON_VALUE.

❌Common Issue: BigQuery does not support applying row-level access policies directly on JSON columns. Attempting to filter rows based on JSON field values in policy rules is not allowed.

✅Advice: Extract necessary JSON fields into separate columns during ingestion or via scheduled queries. Apply row-level access policies on those extracted columns to enforce data security effectively.

❌Common Issue: BigQuery's JSON type has limitations, including a lack of strict data types, automatic conversion of numbers to FLOAT64, and no native support for enforcing boolean or null types.

✅Advice: When accuracy is important, use SAFE_CAST or extract values into typed columns. Always validate data types during the process to keep later steps free of type-related issues or surprises.

To get the best results when working with JSON in BigQuery, stick to a few practical habits. They’ll help your queries run smoother, keep costs under control, and make sure your data stays consistent

Check the query execution plan, either in the Cloud Console or using the INFORMATION_SCHEMA.JOBS* views, to understand what’s happening under the hood. Add filters early to limit the amount of data your query needs to scan. Break down complex logic with CTEs to keep things readable, and only select the fields you actually need to avoid unnecessary processing.

Establish clear data governance practices to ensure consistency across datasets. This includes using access controls, applying validation checks, and avoiding unverified changes. Consistent data makes queries more reliable and easier to maintain, especially when working with dynamic or external JSON sources.

Use BigQuery's built-in JSON functions to read and work with nested data fields. Functions like JSON_EXTRACT, JSON_VALUE, and JSON_QUERY help you extract values clearly. These tools allow you to work with raw JSON while keeping your queries readable, accurate, and easier to troubleshoot.

Apply WHERE filters early to reduce the data processed by JSON functions. Break down complex operations using Common Table Expressions (CTEs) help keep your SQL organized and easier to manage. When working with JSON data, use JSON_EXTRACT_SCALAR to pull out only the values you need. This keeps processing light, avoids unnecessary work, and makes your queries run faster.

Maintain consistency by validating JSON structures using functions like PARSE_JSON. Apply the same transformation rules across datasets to avoid mismatches. Use materialized views for cleaned outputs, and schedule regular checks to detect schema changes and ensure reliable, uniform processing over time.

BigQuery offers a large set of functions that go far beyond JSON handling. From data formatting and transformation to statistical analysis and conditional logic, each function family serves a distinct purpose in building efficient SQL workflows.

The OWOX BigQuery Data Marts enables you to connect Google Sheets to data sources like BigQuery and Google Analytics, eliminating the need for manual exports. It helps automate reporting, update metrics on schedule, and reduce errors in analysis.

With built-in dashboards and visual reports, both analysts and business users can explore trends and monitor key KPIs directly in Sheets. The extension makes advanced data analysis simple, even for non-technical teams.

These functions let you modify JSON data directly in SQL by appending, inserting, updating, removing values, or cleaning nulls, without needing to transform the data externally.

BigQuery supports native JSON columns and uses a schema-on-read approach, allowing you to store, parse, and query semi-structured data without defining a strict schema upfront.

Yes, you can query nested JSON using path expressions, functions like JSON_EXTRACT, and operators to access or flatten fields, arrays, and objects within complex JSON structures.

Typical use cases include cleaning null values, updating user profiles, extracting API fields, flattening events, and transforming dynamic exports from tools such as HubSpot, GA4, or other SaaS platforms.

Yes, they’re designed to scale. To improve performance, filter early, avoid unnecessary fields, and monitor query execution plans when working with large or deeply nested JSON structures.

Absolutely. You can combine JSON functions with UNNEST, REGEXP, SAFE_CAST, CTEs, or aggregations to parse, transform, and analyze structured and semi-structured data together in one query.

Advanced techniques include partial updates using JSON_SET, combining parsing with manipulation, validating structure with JSON_TYPE, and joining UNNESTed arrays for detailed analytics or reporting workflows.