The Ultimate 2024 ETL Guide: Master Data Integration Techniques

Vlada Malysheva, Creative Writer @ OWOX

The more data from various sources a company collects, the greater their capabilities in analytics, data science, and machine learning. But along with opportunities, worries grow associated with data processing. After all, before starting to build reports and search for insights, all this raw and disparate data must be processed: cleaned, checked, converted to a single format, and merged. Extract, Transform, and Load (or ETL) processes and tools are used for these tasks. In this article, we analyze in detail what ETL is and why ETL tools are needed by analysts and marketers.

What is ETL and why is it important?

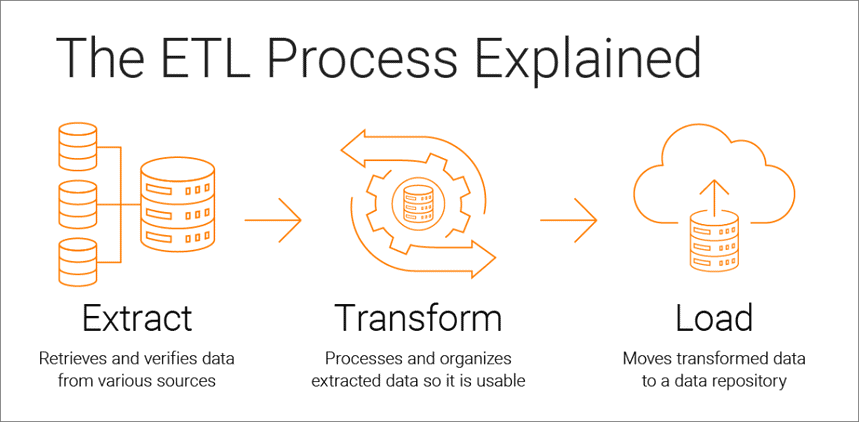

Extract, Transform, Load is a data integration process that underlies data-driven analytics and consists of three stages:

- Data is extracted from the original source

- Data is converted to a format suitable for analysis

- Data is loaded into storage, a data lake, or a business intelligence system

ETL tools allow companies to collect data of various types from multiple sources and merge that data to work with it in a centralized storage location, such as Google BigQuery, Snowflake, or Azure.

Extract, Transform, and Load processes provide the basis for successful data analysis and create a single source of reliable data, ensuring the consistency and relevance of all of your company’s data.

To be as useful as possible to decision-makers, a business’s analytics system must change as the business changes. ETL is a regular process, and your analytics system must be flexible, automated, and well-documented.

A brief history of how ETL came about

ETL became popular in the 1970s when companies began working with multiple repositories, or databases. As a result, it became necessary to effectively integrate all of this data.

In the late 1980s, data storage technologies appeared that offered integrated access to data from several heterogeneous systems. But the problem was that many databases required vendor-specific ETL tools. Therefore, different departments often chose different ETL tools for use with different data storage solutions. This led to the need to constantly write and adjust scripts for different data sources. The increase in data volume and complexity led to an automated ETL process that avoids manual coding.

How the ETL process works

The ETL process consists of three steps: extract, transform, and load. Let’s take a close look at each of them.

Step 1. Extract data

At this step, raw (structured and partially structured) data from different sources is extracted and placed in an intermediate area (a temporary database or server) for subsequent processing.

Sources of such data may be:

- Websites

- Mobile devices and applications

- CRM/ERP systems

- API interfaces

- Marketing services

- Analytics tools

- Databases

- Cloud, hybrid, and on-premises environments

- Flat files

- Spreadsheets

- SQL or NoSQL servers

- Internet of Things (IoT) data transfer tools such as vending machines, ATMs, and commodity sensors

Data collected from different sources is usually heterogeneous and presented in different formats: XML, JSON, CSV, and others. Therefore, before extracting it, you must create a logical data map that describes the relationship between data sources and the target data.

At this step, it’s necessary to check if:

- Extracted records match the source data

- Spam/unwanted data will get into the download

- Data meets destination storage requirements

- There are duplicates and fragmented data

- All the keys are in place

Data can be extracted in three ways:

- Partial extraction — The source notifies you of the latest data changes.

- Partial extraction without notification — Not all data sources provide an update notification; however, they can point to records that have changed and provide an excerpt from such records.

- Full extraction — Some systems cannot determine which data has been changed at all; in this case, only complete extraction is possible. To do that, you’ll need a copy of the latest upload in the same format so you can find and make changes.

This step can be performed either manually by analysts or automatically. However, manually extracting data is time-consuming and can lead to errors. Therefore, we recommend using tools like OWOX BI that automate the ETL process and provide you with high-quality data.

Step 2. Transform data

At this step, raw data collected in an intermediate area (temporary storage) is converted into a uniform format that meets the needs of the business and the requirements of the target data storage. This approach — using an intermediate storage location instead of directly uploading data to the final destination — allows you to quickly roll back data if something suddenly goes wrong.

Data transformation can include the following operations:

- Cleaning — Eliminate data inconsistencies and inaccuracies.

- Standardization — Convert all data types to the same format: dates, currencies, etc.

- Deduplication — Exclude or discard redundant data.

- Validation — Delete unused data and flag anomalies.

- Re-sorting rows or columns of data

- Mapping — Merge data from two values into one or, conversely, split data from one value into two.

- Supplementing — Extract data from other sources.

- Formatting data into tables according to the schema of the target data storage

- Auditing data quality and reviewing compliance

- Other tasks — Apply any additional/optional rules to improve data quality; for example, if the first and last names in the table are in different columns, you can merge them.

Transformation is perhaps the most important part of the ETL process. It helps you improve data quality and ensures that processed data is delivered to storage fully compatible and ready for use in reporting and other business tasks.

In our experience, some companies still don’t prepare business-ready data and build reports on raw data. The main problem with this approach is endless debugging and rewriting of SQL queries. Therefore, we strongly recommend not ignoring this stage.

OWOX BI automatically collects raw data from different sources and converts it into a report-friendly format. You receive ready-made datasets that are automatically transformed into the desired structure, taking into account nuances important for marketers. You won’t have to spend time developing and supporting complex transformations, delve into the data structure, and spend hours looking for the causes of discrepancies.

Step 3. Load data

At this point, processed data from the staging area is uploaded to the target database, storage, or data lake, either locally or in the cloud.

This provides convenient access to business-ready data to different teams within the company.

There are several upload options:

- Initial load — Fill all tables in data storage for the first time.

- Incremental load — Write new data periodically as needed. In this case, the system compares incoming data with what is already available and creates additional records only if it detects new data. This approach reduces the cost of processing data by reducing its volume.

- Full update — Delete table contents and reload the table with the latest data.

You can perform each of these steps using ETL tools or manually using custom code and SQL queries.

Advantages of ETL

1. ETL saves your time and helps you avoid manual data processing.

The biggest benefit of the ETL process is that it helps you automatically collect, convert, and consolidate data. You can save time and effort and eliminate the need to manually import a huge number of lines.

2. ETL makes it easy to work with complex data.

Over time, your business has to deal with a large amount of complex and diverse data: time zones, customer names, device IDs, locations, etc. Add a few more attributes, and you’ll have to format data around the clock. In addition, incoming data can be in different formats and of different types. ETL makes your life much easier.

3. ETL reduces risks associated with the human factor.

No matter how careful you are with your data, you are not immune to mistakes. For example, data may be accidentally duplicated in the target system, or a manual input may contain an error. By eliminating human influence, an ETL tool helps you avoid such problems.

4. ETL helps improve decision-making.

By automating critical data workflows and reducing the chance of errors, ETL ensures that the data you receive for analysis is of high quality and can be trusted. And quality data is fundamental to making better corporate decisions.

5. ETL increases ROI.

Because it saves you time, effort, and resources, the ETL process ultimately helps you improve your ROI. In addition, by improving business analytics, you increase your profits. This is because companies rely on the ETL process to obtain consolidated data and make better business decisions.

Challenges of ETL

When choosing an ETL tool, it’s worth relying on your business requirements, the amount of data collected, and how you use it. What challenges can you encounter when setting up the ETL process?

1. Processing data from a variety of sources.

One company can work with hundreds of sources with different data formats. These can include structured and partially structured data, real-time streaming data, flat files, CSV files, S3 baskets, streaming sources, and more. Some of this data is best converted in packets, while for others streaming data conversion works better. Processing each type of data in the most efficient and practical way can be a huge challenge.

2. Data quality is paramount.

For analytics to work efficiently, you need to ensure accurate and complete data transformation. Manual processing, regular error detection, and rewriting of SQL queries can result in errors, duplication, or data loss. ETL tools save analysts from the routine and help reduce errors. A data quality audit identifies inconsistencies and duplicates, and monitoring functions warn if you’re dealing with incompatible data types and other problems.

3. Your analytics system must be scalable.

The amount of data that companies collect will only grow over the years. For now, you can be satisfied with a local database and batch downloading, but will it always be enough for your business? It’s great to have the possibility to scale ETL processes and capacity to infinity! When it comes to data-driven decision-making, think big and fast: take advantage of cloud storage (like Google BigQuery) that lets you process large amounts of data quickly and cheaply.

ETL vs ELT — What’s the difference?

ELT (Extract, Load, Transform) is essentially a modern look at the familiar ETL process in which data is converted after it’s loaded to storage.

Traditional ETL tools extract and convert data from different sources before loading it into storage. With the advent of cloud storage, there’s no need to clean up data at the intermediate stage between the source and target data storage locations.

ELT is particularly relevant to advanced analytics. For example, you can upload raw data into a data lake and then merge it with data from other sources or use it to train prediction models. Keeping data raw allows analysts to expand their capabilities. This approach is fast because it leverages the power of modern data processing mechanisms and reduces unnecessary data movement.

Which should you choose? ETL or ELT? If you work locally and your data is predictable and comes from only a few sources, then traditional ETL will suffice. However, it’s becoming less and less relevant as more companies move to cloud or hybrid data architectures.

Uncover in-depth insights

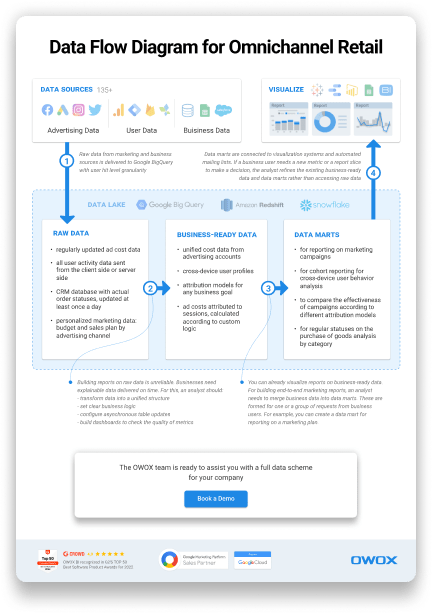

Data Flow Diagram for Omnichannel Retail

Download nowBonus for readers

5 tips for successful ETL implementation

If you want to implement a successful ETL process, follow these steps:

Step 1. Clearly identify the sources of the data you wish to collect and store. These sources can be SQL relational databases, NoSQL non-relational databases, software as a service (SaaS) platforms, or other applications. Once the data sources are connected, define the specific data fields you want to extract. Then accept or enter this data from various sources in raw form.

Step 2. Unify this data using a set of business rules (such as aggregation, attachment, sorting, merge functions, and so on).

Step 3. After transformation, the data must be loaded to storage. At this step, you need to decide on the frequency of data uploading. Specify whether you want to record new data or update existing data.

Step 4. It’s important to check the number of records before and after transferring data to the repository. This should be done to exclude invalid and redundant data.

Step 5. The last step is to automate the ETL process using special tools. This will help you save time, improve accuracy, and reduce the effort involved in restarting the ETL process manually. With ETL automation tools, you can design and control a workflow through a simple interface. In addition, these tools have capabilities such as profiling and data cleaning.

How to select an ETL tool

To begin with, let’s figure out what ETL tools exist. There are currently four types available. Some are designed to work in a local environment, some work in the cloud, and some work in both environments. Which to choose depends on where your data is located and what needs your business has:

- ETL tools for batch processing of data in local storage.

- Cloud ETL tools that can extract and load data from sources directly to cloud storage. They can then transform the data using the power and scale of the cloud. Example: OWOX BI.

- ETL open source tools such as Apache Airflow, Apache Kafka, and Apache NiFi are a budget alternative to paid services. Some don’t support complex transformations and may have customer support issues.

- Real-time ETL tools. Data is processed in real time using a distributed model and data streaming capabilities.

What to look for when selecting an ETL tool:

- Ease of use and maintenance

- Speed of work

- Level of security

- Number and variety of connectors needed

- Ability to work seamlessly with other components of your data platform, including data storage and data lakes

ETL/ELT and OWOX BI

With OWOX BI, you can collect marketing data for reports of any complexity in secure Google BigQuery cloud storage without the help of analysts and developers.

What you get with OWOX BI:

- Automatically collect data from various sources

- Automatically import raw data into Google BigQuery

- Clean, deduplicate, monitor the quality of, and update data

- Prepare and model business-ready data

- Build reports without the help of analysts or SQL knowledge

OWOX BI frees up your precious time, so you can pay more attention to optimizing advertising campaigns and growth zones.

You no longer have to wait for reports from an analyst. Get ready-made dashboards or an individual report that are based on simulated data and is suitable for your business.

With OWOX BI’s unique approach, you can modify data sources and data structures without overwriting SQL queries or re-ordering reports. This is especially relevant with the release of the new Google Analytics 4.

Key takeaways

The volumes of data collected by companies are getting bigger every day and will continue to grow. It’s enough to work with local databases and batch downloading for now, however, very soon it won’t satisfy business needs. So, the possibility to scale ETL processes comes in handy and is particularly relevant to advanced analytics.

The main advantages of ETL tools are:

- saving your time.

- avoiding manual data processing.

- making it easy to work with complex data.

- reducing risks associated with the human factor.

- helping improve decision-making.

- increasing ROI.

When it comes to choosing an ETL tool, think about your business's particular needs. If you work locally and your data is predictable and comes from only a few sources, then traditional ETL will suffice. But don’t forget that more and more companies are moving to cloud or hybrid architectures and you have to take this into account.

FAQ

-

What are some challenges associated with ETL?

Some challenges associated with ETL include staying up-to-date with changing data sources and ensuring data security during the extraction, transformation, and loading process. Additionally, ETL can be complex and may require specialized knowledge or expertise to set up and maintain. -

What are the benefits of using ETL in data processing?

ETL can help businesses improve data accuracy, save time and effort by automating the data integration process, reduce data duplication, and improve overall data quality. -

What are some common ETL tools?

Some common ETL tools include Apache Nifi, Apache Spark, Talend Open Studio, Pentaho Data Integration, and Microsoft SQL Server Integration Services.