Content

- Understanding the Meaning of SQL in Data Management

- Why is Google BigQuery the best choice for Digital Marketing Analytics?

- Importance of Standard SQL in Maximizing BigQuery's Potential

- How is the Standard SQL of BigQuery used in the Marketing Landscape?

- What Are the Advantages of Utilizing Standard SQL over Legacy SQL?

- Different Use Case Examples of using SQL in BigQuery

- Google BigQuery Standard SQL: Best Practices for Marketers

- Exploring Processes on How to Switch to Standard SQL

- Understanding Advanced SQL Syntax & Features in Google BigQuery for Data Analysis

- What Are the SQL Queries Tailored for Marketing Reporting?

BigQuery Standard SQL in Marketing: Advantages and Examples of Its Usage

Oksana Litvenko, Digital Analyst @ OWOX

Liubov Zhovtonizhko, Creative Writer @ OWOX

SQL, or Structured Query Language, has really changed the game for marketers by giving them a precise way to analyze data and understand their customers better.

For a digital marketer, learning SQL for data analysis is crucial, as it enhances their ability to make data-driven decisions. SQL helps in identifying marketing qualified leads (MQLs) based on lead insights and interactions such as CTAs clicked, pages visited, offers downloaded, and interactions with social posts.

Additionally, SQL helps in identifying sales qualified leads (SQLs) by analyzing their readiness to talk to the sales team, having displayed enough interest and intent to buy. We will get into the main benefits and some real-life examples of how SQL is making a big difference in the world of marketing.

In 2016, Google BigQuery introduced Standard SQL as a new way to interact with tables. Before this, BigQuery utilized its own Structured Query Language known as BigQuery SQL, which is now referred to as Legacy SQL.

At first glance, there isn’t much difference between Legacy and Standard SQL: the names of tables are written a little differently; Standard has slightly stricter grammar requirements (for example, you can’t put a comma before FROM) and more data types. However, if you look closely, you will see that there are some minor syntax changes that give marketers many advantages.

Note: This post was originally published in April 2019 and was fully updated in July 2024 for accuracy and relevance.

Understanding the Meaning of SQL in Data Management

Structured Query Language (SQL) is the cornerstone of modern data management and analysis, integral to various database systems like Oracle, MS SQL Server, MS Access, and notably MySQL, a popular relational database management system employed by technology giants like Amazon, Flipkart, and Facebook.

SQL’s versatility and standardization, adhering to ANSI and ISO standards, make it an indispensable tool in the realm of Database Management Systems (DBMS).

DBMSs, including BigQuery, serve as pivotal tools in handling data in all its facets: storage, retrieval, manipulation, and creation. They offer a secure, efficient, and user-friendly means for managing data, including customer data. This is critical in a data-driven world where efficient data handling can significantly impact decision-making and operational efficiency.

In the context of Google BigQuery, an enterprise data warehouse that leverages SQL, we see SQL’s adaptability and power. BigQuery SQL enables large-scale data analysis, harnessing Google’s infrastructure. SQL’s role in data management, particularly in relational databases like MySQL and data warehouses like Google BigQuery, is pivotal.

It provides the framework for efficient, effective, and secure data handling.

Automate your digital marketing reporting

Manage and analyze all your data in one place! Access fresh & reliable data with OWOX BI — an all-in-one reporting and analytics tool

4.9

Importance of SQL Skills for Effective Data Management

Acquiring proficiency in SQL is crucial for efficiently accessing, analyzing, and manipulating data. This skill is increasingly important for marketers, and fortunately, SQL training is widely accessible online, catering to learners from beginners to advanced levels.

- Efficient Data Access and Analysis: Understanding SQL is vital for accessing, analyzing, and manipulating data, especially in large databases.

- Custom Query Creation: SQL allows for writing specific queries to meet exact data needs, facilitating precise and efficient data extraction.

- Standard Database Operations: Key operations like UPDATE, INSERT, and DELETE are fundamental in SQL, enabling data modification, addition, and removal within databases.

- Database and Table Creation: SQL provides the functionality to create new databases and tables, catering to diverse data storage requirements.

- Handling Large Datasets: SQL is capable of managing vast amounts of data and maintaining high performance even with extensive datasets.

- Database Maintenance and Optimization: SQL is instrumental in performing critical maintenance tasks such as indexing and database tuning, which are essential for optimal database performance.

Why is Google BigQuery the best choice for Digital Marketing Analytics?

Google BigQuery has emerged as a transformative tool for digital marketers, streamlining and enhancing data analysis with its advanced features. By utilizing SQL, it empowers analysts to perform various digital marketing tasks such as data retrieval, transformation, segmentation, performance analysis, personalization, and reporting.

Additionally, SQL helps in the lead hand-off process by differentiating between MQLs and SQLs, ensuring that when a lead is designated as an SQL, they are ready to talk to the sales team and are likely to purchase the product or service offered.

Here are some of the key ways it’s changing the game:

Handling Vast Datasets with Ease

Handling vast datasets becomes effortless with Google BigQuery, a cloud-based platform designed for marketers managing large, dynamic datasets. Its optimized architecture and user-friendly drag-and-drop interface simplify data analysis, enabling quick and efficient insights without the need for extensive coding, even when working with terabyte-scale datasets. BigQuery can process billions of rows in seconds, providing real-time insights for marketing strategies.

Centralizing Historical Data

Google BigQuery effectively addresses the common problem of restricted historical data access in many marketing platforms. It enables the storage of all historical data from various sources in a single location, creating a vast repository. This centralization not only processes immediate data access and analysis, but also greatly enhances the gathering of real-time insights.

Data Consolidation from Various Sources

Marketing teams working together with sales teams to generate leads and improve marketing campaigns will find Google BigQuery perfectly tailored to the needs of modern, rapid, data-driven analytics. It simplifies the storage and management of data, acting as a comprehensive data warehouse.

This platform accommodates a wide range of data, from web analytics to advertising and conversion data, thereby improving marketing performance by centralizing the focus on analysis and reporting.

Automating Data Refreshes

Google BigQuery eliminates the need for manual data updates, a common inefficiency in spreadsheet-based analysis.

Utilizing robust API connections ensures regular and automatic data updates. This automation allows marketers to focus on other essential tasks with the assurance that their dashboards will always display the most current data.

Facilitating Flexible Ad-hoc Data Analysis

Optimized for customized data analysis, Google BigQuery supports diverse data manipulation and insight generation. Using SQL, it allows users to retrieve specific data from the database, such as selecting columns like names and email addresses of customers or impressions and clicks of ad campaigns. It easily integrates with various data analysis and visualization tools, enabling the creation of custom metrics and complex visualizations. This flexibility is crucial for deriving deeper, more meaningful insights from data.

Importance of Standard SQL in Maximizing BigQuery's Potential

SQL is vital for harnessing the full potential of a powerful platform built on BigQuery Standard SQL that is aligned with ANSI SQL standards. This alignment ensures familiarity for those experienced with traditional SQL, making it a crucial skill for BigQuery users.

While BigQuery offers a user-friendly interface and APIs, SQL remains the primary tool for data manipulation and analysis. Proficiency in SQL enables users to perform complex operations like data manipulation, aggregation, and transformation, which are essential for extracting valuable insights and making data-driven decisions.

Furthermore, SQL expertise unlocks BigQuery's advanced features, including custom functions, advanced analytics, and machine learning capabilities, enhancing the depth of data analysis. BigQuery's SQL variant is specially designed for its unique architecture, optimizing large-scale data analytics and seamless integration with Google Cloud services.

How is the Standard SQL of BigQuery used in the Marketing Landscape?

SQL reduces data analysis time by up to 60%, increasing efficiency for marketers. Standard SQL, particularly as implemented in BigQuery, has revolutionized the use of SQL in the marketing landscape, especially in digital marketing analytics. Its sophisticated capabilities for data access and manipulation make it a crucial asset for marketers.

With BigQuery’s Standard SQL, complex queries that target specific demographics, such as teenagers in a certain location, become more efficient and precise. This enhances the ability of marketers to gather crucial data for developing targeted marketing strategies.

Furthermore, BigQuery’s Standard SQL features extend beyond basic data retrieval. They enable comprehensive processing and synthesis of large datasets, facilitating the identification of key patterns and trends that are critical for informed decision-making.

In digital marketing, where the success of a campaign largely depends on data-driven insights, BigQuery’s SQL stands out by providing powerful tools for analyzing and optimizing marketing data.

Benefits of Using Standard SQL for Marketers

SQL, particularly BigQuery’s Standard SQL for marketers, offers a range of benefits for marketers by enhancing their data comprehension and management capabilities. Here’s an updated perspective reflecting BigQuery’s capabilities:

- Advanced-Data Comprehension: BigQuery’s SQL empowers marketers with sophisticated tools to delve deep into data essential for making informed decisions. Its advanced functions facilitate a nuanced understanding of complex datasets.

- Efficient Data Extraction: BigQuery’s SQL excels in extracting precise data sets from vast databases quickly and efficiently, a key requirement for targeted marketing analysis.

- Robust Data Management and Security: Beyond data access, BigQuery’s SQL plays a critical role in managing and securing data. It ensures high standards of data integrity and confidentiality, which are crucial in today’s data-sensitive environment.

- Detailed Customer Pattern Analysis: Marketers can leverage BigQuery’s SQL to perform intricate analyses of customer behaviors, identifying patterns such as product preferences and purchasing habits. This granular insight into customer behavior is instrumental in crafting personalized marketing strategies.

- Insights on Key Performance Indicators (KPIs): With BigQuery’s SQL, marketers can efficiently gather insights on essential KPIs like customer retention rates, geographic distribution, and website engagement metrics. This data is vital for evaluating the effectiveness of marketing campaigns.

- Data-Driven Decision-making: BigQuery’s SQL supports a data-driven approach to decision-making, allowing marketers to rely on solid data insights rather than assumptions. This leads to more strategic, outcome-oriented marketing initiatives.

What Are the Advantages of Utilizing Standard SQL over Legacy SQL?

Using Standard SQL in Google BigQuery over Legacy SQL brings several benefits, especially for those looking to dive deep into data analytics. This approach not only matches what most in the industry use, but it also boosts BigQuery’s effectiveness.

With Standard SQL, marketers can write SQL queries to extract necessary data for reporting automation, enabling real-time data analysis and informed decision-making.

New Data Types: Arrays and Nested Fields

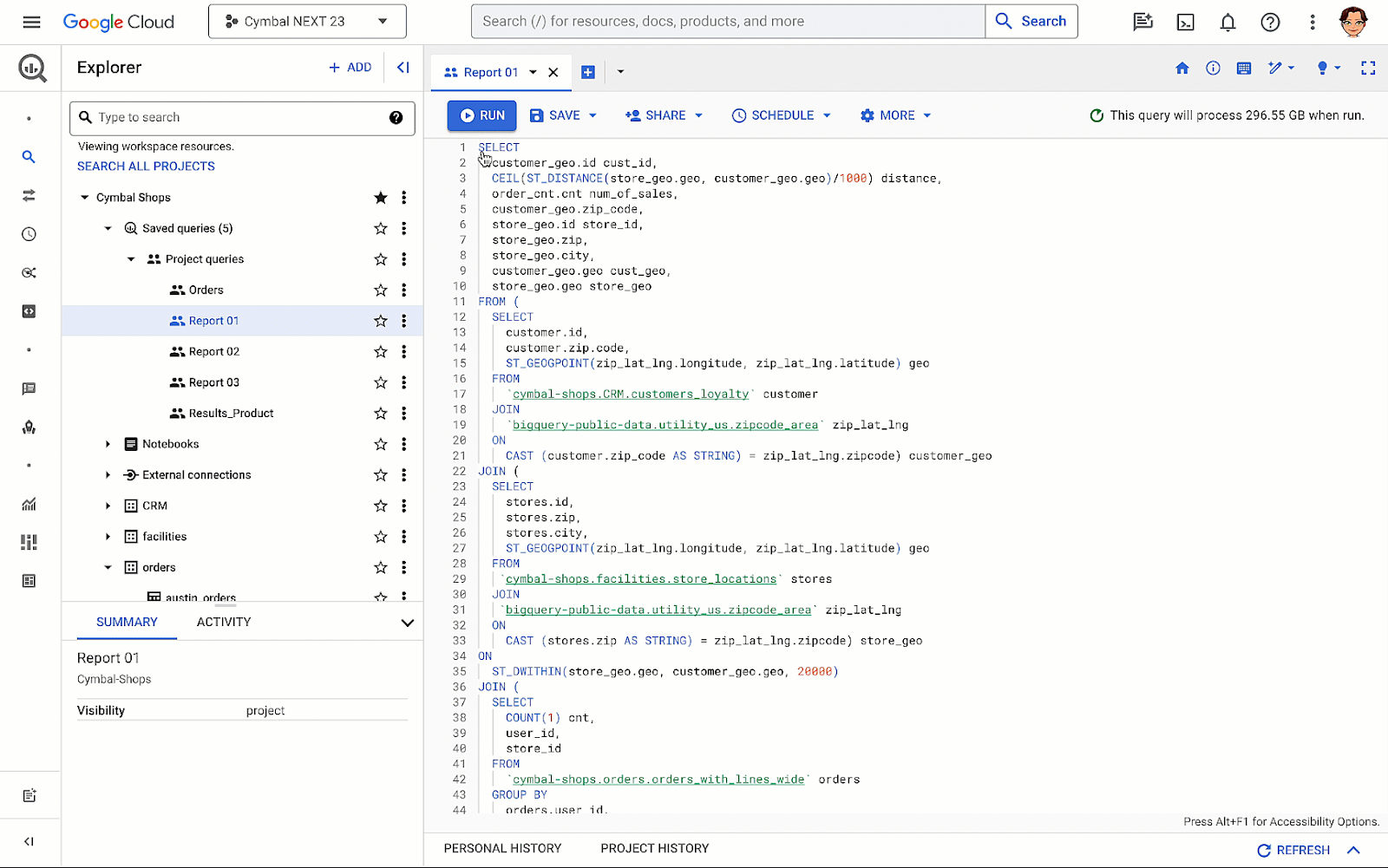

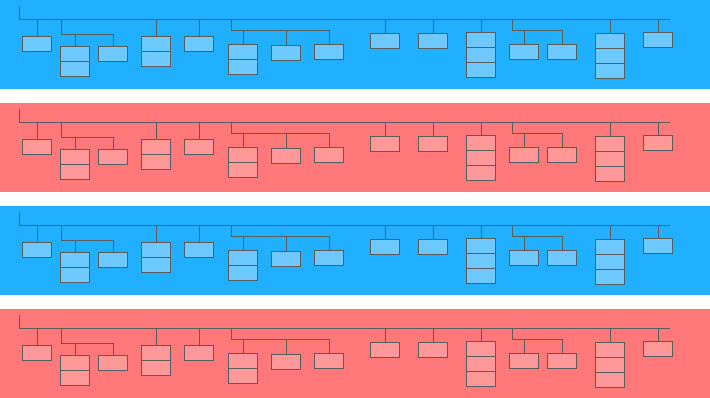

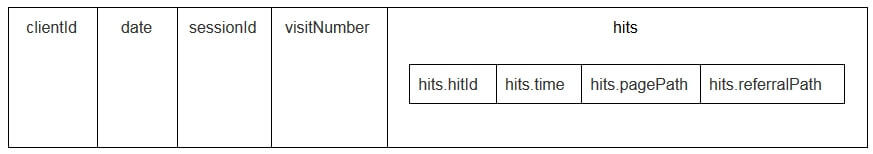

Standard SQL in BigQuery supports new data types: ARRAY and STRUCT (arrays and nested fields). This means that in BigQuery, it has become easier to work with tables loaded from JSON/Avro files, which often contain multi-level attachments. A nested field is a mini table inside a larger one:

In the diagram above, the blue and red bars are the lines in which mini tables are embedded. Each line is one session. Sessions have common parameters: date, ID number, user device category, browser, operating system, etc. In addition to the general parameters for each session, the hits table is attached to the line.

The hits table contains information about user actions on the site. For example, if a user clicks on a banner, flips through the catalog, opens a product page, puts a product in the basket, or places an order, these actions will be recorded in the hits table. If a user places an order on the site, information about the order will also be entered in the hits table:

- transactionId (number identifying the transaction)

- transactionRevenue (total value of the order)

- transactionShipping (shipping costs)

Session data tables collected using OWOX BI have a similar structure

Suppose you want to know the number of orders from users in New York City over the past month. To find out, you need to refer to the hits table and count the number of unique transaction IDs. To extract data from such tables,

Standard SQL has an UNNEST function:

#standardSQL

SELECT

COUNT (DISTINCT hits.transaction.transactionId) -- count the number of unique order numbers; DISTINCT helps to avoid duplication

FROM `project_name.dataset_name.owoxbi_sessions_*` -- refer to the table group (wildcard tables)

WHERE

(

_TABLE_SUFFIX BETWEEN FORMAT_DATE('%Y%m%d',DATE_SUB(CURRENT_DATE(), INTERVAL 1 MONTHS)) -- if we don't know which dates we need, it's better to use the function FORMAT_DATE INTERVAL

AND

FORMAT_DATE('%Y%m%d',DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY))

)

AND geoNetwork.city = 'New York' -- choose orders made in New York CityIf the order information was recorded in a separate table and not in a nested table, you would have to use JOIN to combine the table with order information and the table with session data in order to find out in which session orders were made.

More Subquery Options

If you need to extract data from multi-level nested fields, you can add subqueries with SELECT and WHERE. For example, in OWOX BI session streaming tables, another subtable, product, is written to the hits subtable. The product subtable collects product data that are transmitted with an Enhanced E-commerce array.

If enhanced e-commerce is set up on the site and a user has looked at a product page, the characteristics of this product will be recorded in the product subtable.

To get these product characteristics, you'll need a subquery inside the main query. For each product characteristic, a separate SELECT subquery is created in parentheses:

#standardSQL

SELECT

column_name1, -- list the other columns you want to receive

column_name2,

(SELECT productBrand FROM UNNEST(hits.product)) AS hits_product_productBrand,

(SELECT productRevenue FROM UNNEST(hits.product)) AS hits_product_productRevenue, -- list product features

(SELECT localProductRevenue FROM UNNEST(hits.product)) AS hits_product_localProductRevenue,

(SELECT productPrice FROM UNNEST(hits.product)) AS hits_product_productPrice,

FROM `project_name.dataset_name.owoxbi_sessions_YYYYMMDD`Thanks to the capabilities of Standard SQL, it's easier to build query logic and write code. For comparison, in Legacy SQL, you would need to write this type of ladder:

#legacySQL

SELECT

column_name1,

column_name2,

column_name3

FROM (

SELECT table_name.some_column AS column1...

FROM table_name

)Requests to External Sources

Using Standard SQL, you can access BigQuery tables directly from Google Bigtable, Google Cloud Storage, Google Drive, and Google Sheets.

That is, instead of loading the entire table into BigQuery, you can delete the data with one single query, select the parameters you need, and upload them to cloud storage.

Simplify BigQuery Reporting in Sheets

Easily analyze corporate data directly into Google Sheets. Query, run, and automatically update reports aligned with your business needs

More user functions (UDF)

If you need to use a formula that isn't documented, User Defined Functions (UDF) will help you. In our practice, this rarely happens, since the Standard SQL documentation covers almost all tasks of digital analytics. In Standard SQL, user-defined functions can be written in SQL or JavaScript; Legacy SQL only supports JavaScript.

The arguments of these functions are columns, and the values they take are the result of manipulating columns. In Standard SQL, functions can be written in the same window as queries.

More JOIN Conditions

In Legacy SQL, JOIN conditions can be based on equality or column names. In addition to these options, the Standard SQL dialect supports JOIN by inequality and by arbitrary expression. For example, to identify unfair CPA partners, we can select sessions in which the source was replaced within 60 seconds of the transaction.

To do this in Standard SQL, we can add an inequality to the JOIN condition:

#standardSQL

SELECT *

FROM

(

SELECT

traff.clientId AS clientId,

traff.page_path AS pagePath,

traff.traffic_source AS startSource,

traff.traffic_medium AS startMedium,

traff.time AS startTime,

aff.evAction AS evAction,

aff.evSource AS finishSource,

aff.evMedium AS finishMedium,

aff.evCampaign AS finishCampaign,

aff.time AS finishTime,

aff.isTransaction AS isTransaction,

aff.pagePath AS link,

traff.time-aff.time AS diff

FROM

(

SELECT

fullVisitorID AS clientId,

h.page.pagePath AS page_path,

trafficSource.source AS traffic_source,

trafficSource.medium AS traffic_medium,

trafficSource.campaign AS traffic_campaign,

date,

SAFE_CAST(visitStartTime+h.time/1000 AS INT64) AS time

FROM `demoproject.google_analytics_sample.ga_sessions_20190301`,

UNNEST (hits) AS h

WHERE trafficSource.medium != 'cpa'

) AS traff

JOIN (

SELECT

total.date date,

total.time time,

total.clientId AS clientId,

total.eventAction AS evAction,

total.source AS evSource,

total.medium AS evMedium,

total.campaign AS evCampaign,

tr.eventAction AS isTransaction,

total.page_path AS pagePath

FROM

(

SELECT

fullVisitorID AS clientId,

h.page.pagePath AS page_path,

h.eventInfo.eventAction AS eventAction,

trafficSource.source AS source,

trafficSource.medium AS medium,

trafficSource.campaign AS campaign,

date,

SAFE_CAST(visitStartTime+h.time/1000 AS INT64) AS time

FROM `demoproject.google_analytics_sample.ga_sessions_20190301`,

UNNEST(hits) AS h

WHERE

trafficSource.medium ='cpa'

) AS total

LEFT JOIN

(

SELECT

fullVisitorID AS clientId,

date,

h.eventInfo.eventAction AS eventAction,

h.page.pagePath pagePath,

SAFE_CAST(visitStartTime+h.time/1000 AS INT64) AS time

FROM `demoproject.google_analytics_sample.ga_sessions_20190301`,

UNNEST(hits) AS h

WHERE h.eventInfo.eventAction = 'typ_page'

AND h.type = 'EVENT'

GROUP BY 1, 2, 3, 4, 5

) AS tr

ON total.clientId=tr.clientId

AND total.date=tr.date

AND tr.time>total.time -- JOIN tables by inequality. Pass the additional WHERE clause that was needed in Legacy SQL

WHERE tr.eventAction = 'typ_page'

) AS aff

ON traff.clientId = aff.clientId

)

WHERE diff> -60

AND diff<0

GROUP BY 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13

ORDER BY clientId, finishTimeThe only limitation of Standard SQL with respect to JOIN is that it doesn't allow semi-join with subqueries of the form WHERE column IN (SELECT ...):

#legacySQL

SELECT

mother_age,

COUNT(mother_age) total

FROM

[bigquery-public-data:samples.natality]

WHERE -- such a construction cannot be used in Standard SQL

state IN (SELECT

state

FROM

(SELECT

state,

COUNT(state) total

FROM

[bigquery-public-data:samples.natality]

GROUP BY

state

ORDER BY

total DESC

LIMIT 10))

AND mother_age > 50

GROUP BY

mother_age

ORDER BY

mother_age DESCLess Chance of Mistakes

Some functions in Legacy SQL return NULL if the condition is incorrect. For example, if division by zero has crept into your calculations, the query will be executed, and NULL entries will appear in the resulting rows of the table. This may mask problems in the query or the data.

The logic of Standard SQL is more straightforward. If a condition or input data is incorrect, the query will generate an error, for example, «division by zero,» so you can quickly correct the query. The following checks are embedded in Standard SQL:

- Valid values for +, -, ×, SUM, AVG, STDEV

- Division by zero

Requests Run Faster

JOIN queries written in Standard SQL are faster than those written in Legacy SQL, thanks to preliminary filtering of incoming data. First, the query selects the rows that match the JOIN conditions and then processes them.

In the future, Google BigQuery will work on improving the speed and performance of queries only for Standard SQL.

Tables Can Be Edited: Insert and Delete Rows, Update

Data Manipulation Language (DML) functions are available in Standard SQL. This means that you can update tables and add or remove rows from them through the same window in which you write queries. For example, using DML, you can combine data from two tables into one:

#standardSQL

MERGE dataset.Inventory AS T

USING dataset.NewArrivals AS S

ON T.ProductID = S.ProductID

WHEN MATCHED THEN

UPDATE SET quantity = T.quantity + S.quantity

WHEN NOT MATCHED THEN

INSERT (ProductID, quantity) VALUES (ProductID, quantity)Code Is Easier to Read and Edit

With Standard SQL, complex queries can be started not only with SELECT but also with WITH, making code easier to read, comment, and understand. This also means it's easier to prevent your own and correct others' mistakes.

#standardSQL

WITH total_1 AS ( -- the first subquery in which the intermediate indicator will be calculated

SELECT

id,

metric1,

SUM(metric2) AS total_sum1

FROM `project_name.dataset_name.owoxbi_sessions_YYYYMMDD`

GROUP BY

id, metric

),

total_2 AS ( -- the second subquery

SELECT

id,

metric1,

SUM(metric2) AS total_sum2

FROM `project_name.dataset_name.owoxbi_sessions_YYYYMMDD`

GROUP BY

id, metric1

),

total_3 AS ( -- the third subquery

SELECT

id,

metric,

SUM(metric2) AS total_sum3

FROM `project_name.dataset_name.owoxbi_sessions_YYYYMMDD`

GROUP BY

id, metric

)

SELECT *,

ROUND(100*( total_2.total_sum2 - total_3.total_sum3) / total_3.total_sum3, 3) AS difference -- get the difference index: subtract the value of the second subquery from the value of the third; divide by the value of the third

FROM total_1

ORDER BY 1, 2It's convenient to work with the WITH operator if you have calculations that are done in several stages. First, you can collect intermediate metrics in subqueries and then do the final calculations.

The Google Cloud Platform (GCP), which includes BigQuery, is a full-cycle platform for working with big data, from organizing a data warehouse or data cloud to running scientific experiments and predictive and prescriptive analytics.

With the introduction of Standard SQL, BigQuery is expanding its audience. Working with GCP is becoming more interesting for marketing analysts, product analysts, data scientists, and teams of other specialists.

Different Use Case Examples of using SQL in BigQuery

At OWOX BI, we often work with tables compiled using the Google Analytics 4 export to Google BigQuery or the OWOX BI Pipeline. In the examples below, we'll look at the specifics of SQL queries for such data.

If you aren't already collecting data from your site in BigQuery, you can try doing so for free with the trial version of OWOX BI.

Automate your digital marketing reporting

Manage and analyze all your data in one place! Access fresh & reliable data with OWOX BI — an all-in-one reporting and analytics tool

4.9

1. Select Data for a Time Interval

In Google BigQuery, user behavior data for your site is stored in wildcard tables (tables with an asterisk); a separate table is formed for each day. These tables have the same name: only the suffix is different. The suffix is the date in the format YYYYMMDD.

For example, the table owoxbi_sessions_20190301 contains data on sessions for March 1, 2019.

We can refer directly to a group of such tables in one request in order to obtain data, for example, from February 1 through February 28, 2019. To do this, we need to replace YYYYMMDD with an * in FROM, and in WHERE, we need to specify the table suffixes for the start and end of the time interval:

#standardSQL

SELECT sessionId,

FROM `project_name.dataset_name.owoxbi_sessions_*`

WHERE _TABLE_SUFFIX BETWEEN `20190201` AND `20190228`The specific dates for which we want to collect data are not always known to us. For example, every week, we might need to analyze data for the last three months. To do this, we can use the FORMAT_DATE function:

#standardSQL

SELECT

FROM `project_name.dataset_name.owoxbi_sessions_*`

WHERE

_TABLE_SUFFIX BETWEEN FORMAT_DATE('%Y%m%d',DATE_SUB(CURRENT_DATE(), INTERVAL 3 MONTHS))

AND

FORMAT_DATE('%Y%m%d',DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY))After BETWEEN, we record the suffix of the first table. The phrase CURRENT_DATE (), INTERVAL 3 MONTHS means «select data for the last 3 months from the current date.» The second table suffix is formatted after AND. It's necessary to mark the end of the interval as yesterday: CURRENT_DATE (), INTERVAL 1 DAY.

2. Retrieve User Parameters and Indicators

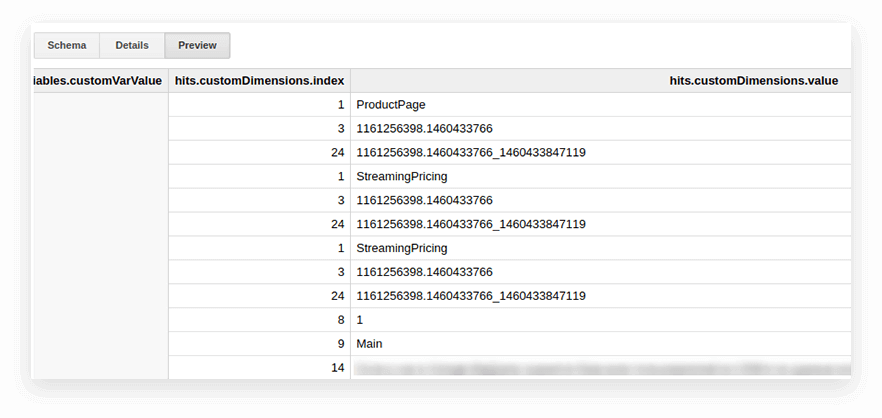

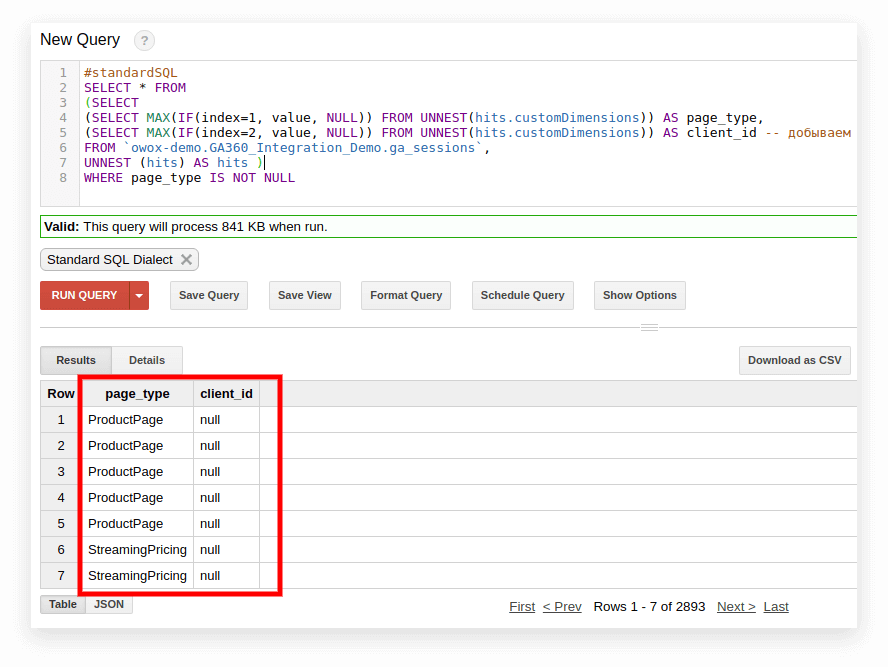

User parameters and metrics in Google Analytics 4 Export tables are written to the nested hits table and to the customDimensions and customMetrics subtables. All custom dimensions are recorded in two columns: one for the number of parameters collected on the site and the second for their values. Here's what all the parameters transmitted with one hit look like:

In order to unpack them and write the necessary parameters in separate columns, we use the following SQL query:

-- Custom Dimensions (in the line below index - the number of the user variable, which is set in the Google Analytics 4 interface; dimension1 is the name of the custom parameter, which you can change as you like. For each subsequent parameter, you need to write the same line:

(SELECT MAX(IF(index=1, value, NULL)) FROM UNNEST(hits.customDimensions)) AS dimension1,-- Custom Metrics: the index below is the number of the user metric specified in the Google Analytics 4 interface; metric1 is the name of the metric, which you can change as you like. For each of the following metrics, you need to write the same line:

(SELECT MAX(IF(index=1, value, NULL)) FROM UNNEST(hits.customMetrics)) AS metric1Here's what it looks like in the request:

#standardSQL

SELECT ,

, -- list column names

(SELECT MAX(IF(index=1, value, NULL)) FROM UNNEST(hits.customDimensions)) AS page_type,

(SELECT MAX(IF(index=2, value, NULL)) FROM UNNEST(hits.customDimensions)) AS visitor_type, -- produce the necessary custom dimensions

(SELECT MAX(IF(index=1, value, NULL)) FROM UNNEST(hits.customMetrics)) AS metric1 -- produce the necessary custom metrics

-- if you need more columns, continue to list

FROM `project_name.dataset_name.owoxbi_sessions_20190201`In the screenshot below, we've selected parameters 1 and 2 from Google Analytics 360 demo data in Google BigQuery and called them page_type and client_id. Each parameter is recorded in a separate column:

3. Calculate the Number of Sessions by Traffic Source, Channel, Marketing Campaigns, City, and Device Category

Such calculations are useful for a marketing team looking to leverage SQL for data analysis, especially if you plan to visualize data in Looker Studio and filter by city and device category.

This is easy to do with the COUNT window function:

#standardSQL

SELECT

, -- choose any columns

COUNT (DISTINCT sessionId) AS total_sessions, -- summarize the session IDs to find the total number of sessions

COUNT(DISTINCT sessionId) OVER(PARTITION BY date, geoNetwork.city, session.device.deviceCategory, trafficSource.source, trafficSource.medium, trafficSource.campaign) AS part_sessions -- summarize the number of sessions by campaign, channel, traffic source, city, and device category

FROM `project_name.dataset_name.owoxbi_sessions_20190201`4. Merge the Same Data from Several Tables

Suppose you collect data on completed orders in several BigQuery tables: one collects all orders from Store A, and the other collects orders from Store B. Sales teams can use SQL to combine this data for better insights and to generate more sales-qualified leads, which is crucial for maximizing revenue and pushing leads through the sales funnel.

You want to combine them into one table with these columns:

- client_id — a number that identifies a unique buyer

- transaction_created — order creation time in TIMESTAMP format

- transaction_id — order number

- is_approved — whether the order was confirmed

- transaction_revenue — order amount

In our example, orders from January 1, 2018, to yesterday must be on the table. To do this, select the appropriate columns from each group of tables, assign them the same name, and combine the results with UNION ALL:

#standardSQL

SELECT

cid AS client_id,

order_time AS transaction_created,

order_status AS is_approved,

order_number AS transaction_id

FROM `project_name.dataset_name.table1_*`

WHERE (

_TABLE_SUFFIX BETWEEN `20180101`

AND

FORMAT_DATE('%Y%m%d',DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY))

)

UNION ALL

SELECT

userId AS client_id,

created_timestamp AS transaction_created,

operator_mark AS is_approved,

transactionId AS transaction_id

FROM `project_name.dataset_name.table1_*`

WHERE (

_TABLE_SUFFIX BETWEEN `20180101`

AND

FORMAT_DATE('%Y%m%d',DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY))

)

ORDER BY transaction_created DESC5. Create a Dictionary of Traffic Channel Groups

When data enters Google Analytics 4, the system automatically determines the group to which a particular transition belongs: Direct, Organic Search, Paid Search, and so on. To identify a group of channels, Google Analytics 4 looks at the UTM tags of transitions, namely utm_source and utm_medium.

If OWOX BI clients want to assign their own names to groups of channels, we create a dictionary in which the transition belongs to a specific channel. To do this, we use the conditional CASE operator and the REGEXP_CONTAINS function. This function selects the values in which the specified regular expression occurs.

We recommend taking names from your list of sources in Google Analytics 4. Here's an example of how to add such conditions to the request body:

#standardSQL

SELECT

CASE

WHEN (REGEXP_CONTAINS (source, 'google') AND medium = 'referral' THEN 'Organic Search'

WHEN (REGEXP_CONTAINS (source, 'yahoo')) AND medium = 'referral' THEN 'Referral'

WHEN (REGEXP_CONTAINS (source, '^(go.mail.ru|google.com)$') AND medium = 'referral') THEN 'Organic Search'

WHEN medium = 'organic' THEN 'Organic Search'

WHEN (medium = 'cpc') THEN 'Paid Search'

WHEN REGEXP_CONTAINS (medium, '^(sending|email|mail)$') THEN 'Email'

WHEN REGEXP_CONTAINS (source, '(mail|email|Mail)') THEN 'Email'

WHEN REGEXP_CONTAINS (medium, '^(cpa)$') THEN 'Affiliate'

WHEN medium = 'social' THEN 'Social'

WHEN source = '(direct)' THEN 'Direct'

WHEN REGEXP_CONTAINS (medium, 'banner|cpm') THEN 'Display'

ELSE 'Other'

END channel_group -- the name of the column in which the channel groups are written

FROM `project_name.dataset_name.owoxbi_sessions_20190201`Google BigQuery Standard SQL: Best Practices for Marketers

Marketers can maximize the benefits of Google BigQuery Standard SQL by following these best practices:

- Optimize Queries: Marketers should use SELECT statements wisely by specifying only the necessary columns to reduce data processing and improve query performance. Leveraging WHERE clauses early in the query process is crucial for minimizing the amount of data BigQuery needs to scan. Additionally, during development and testing, using the LIMIT clause can restrict the number of rows returned, further enhancing query efficiency.

- Efficient Data Structuring: Effective data structuring involves using partitioned tables to manage large datasets, which can significantly speed up query performance when partitioning by date or other relevant columns. Clustering tables with frequently filtered columns is another strategy to improve query efficiency and reduce costs, making data retrieval more efficient.

- Use Analytical Functions: Marketers can simplify complex data analysis tasks by utilizing window functions for running totals, moving averages, and ranking calculations. Common Table Expressions (CTEs) should be used to break down complex queries into simpler, more readable components, making them easier to manage and understand.

- Optimize Storage: Choosing appropriate data types for columns can save storage space and enhance query performance. For instance, using INT64 for integer values instead of STRING can be more efficient. Additionally, taking advantage of BigQuery's built-in compression helps achieve storage efficiency without compromising performance.

- Manage Costs: Regular monitoring of query costs using BigQuery's cost control features is essential. By analyzing query history, marketers can identify expensive queries and optimize them. For those running a high volume of queries, considering flat-rate pricing can help manage costs predictably and avoid unexpected expenses.

- Enhance Security: Implementing fine-grained access control using BigQuery’s Identity and Access Management (IAM) ensures data security and compliance. Enabling audit logging to track data access and query execution further enhances security measures, providing a comprehensive audit trail for compliance purposes.

- Leverage Integration: Integrating BigQuery with Google Analytics 360, Google Ads, and other marketing tools consolidate data from multiple sources, facilitating comprehensive analysis. Utilizing third-party data visualization and BI tools like Looker or Tableau with BigQuery enables marketers to create insightful reports and dashboards, enhancing data-driven decision-making.

- Regular Maintenance: Regularly cleaning up and archiving outdated or unnecessary data helps optimize storage and improve query performance. Keeping table statistics up to date is also crucial, as it assists BigQuery’s optimizer in choosing the most efficient execution plan for queries, ensuring optimal performance.

By adhering to these best practices, marketers can efficiently utilize Google BigQuery Standard SQL to gain valuable insights, optimize campaign performance, and drive better marketing outcomes.

Uncover in-depth insights

[GA4] BigQuery Export SQL Queries Library

Download nowBonus for readers

![[GA4] BigQuery Export SQL Queries Library](https://i.owox.ua/pages/33/33805.jpg)

Exploring Processes on How to Switch to Standard SQL

If you haven't switched to Standard SQL yet, you can do it at any time. The main thing is to avoid mixing dialects in one request.

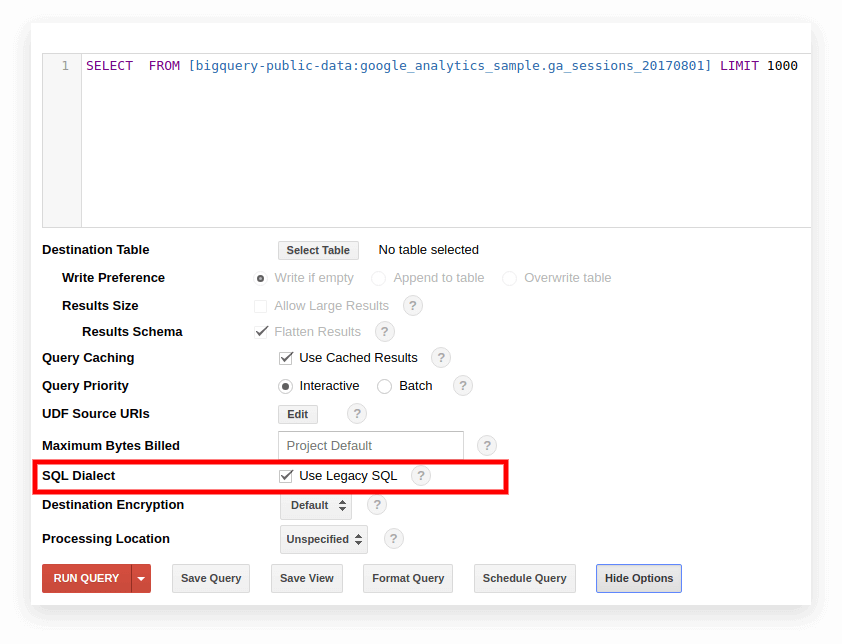

Option 1. Switch in the Google BigQuery Interface

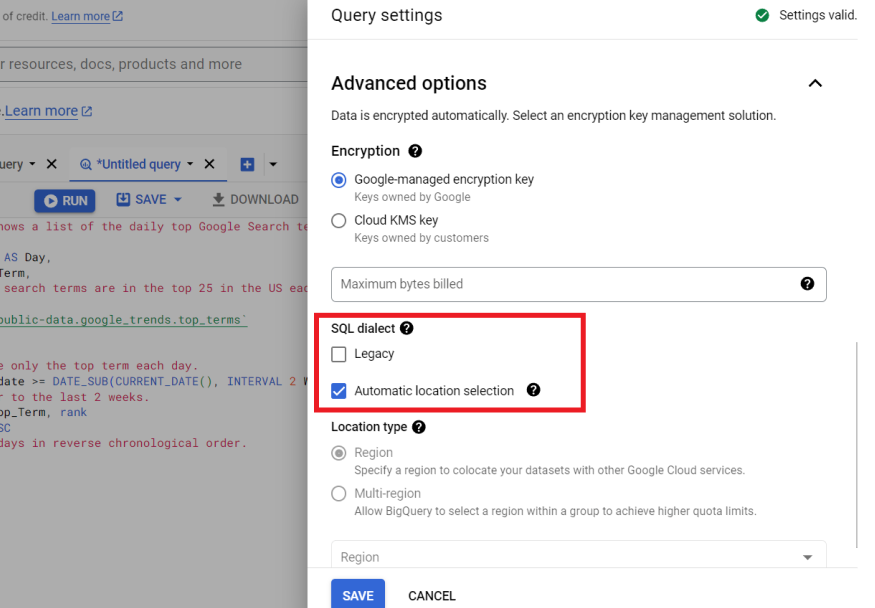

Legacy SQL is used by default in the old BigQuery interface. To switch between dialects, click Show Options under the query input field and uncheck the Use Legacy SQL box next to SQL Dialect.

The new interface uses Standard SQL by default. Here, you need to go to the More tab to switch dialects:

Option 2. Write the Prefix at the Beginning of the Request

If you haven't ticked the request settings, you can start with the desired prefix (#standardSQL or #legacySQL):

#standardSQL

SELECT

weight_pounds, state, year, gestation_weeks

FROM

`bigquery-public-data.samples.natality`

ORDER BY weight_pounds DESC

LIMIT 10;In this case, Google BigQuery will ignore the settings in the interface and run the query using the dialect specified in the prefix.

If you have views or saved queries that are launched on a schedule using Apps Script, don't forget to change the value of useLegacySql to false in the script:

var job = {

configuration: {

query: {

query: 'INSERT INTO MyDataSet.MyFooBarTable (Id, Foo, Date) VALUES (1, \'bar\', current_Date);',

useLegacySql: false

}Option 3. Transition to Standard SQL for Views

If you work with Google BigQuery, not with tables but with views, those views can't be accessed in the Standard SQL dialect. That is, if your presentation is written in Legacy SQL, you can't write requests to it in Standard SQL.

To transfer a view to standard SQL, you need to manually rewrite the query by which it was created. The easiest way to do this is through the BigQuery interface.

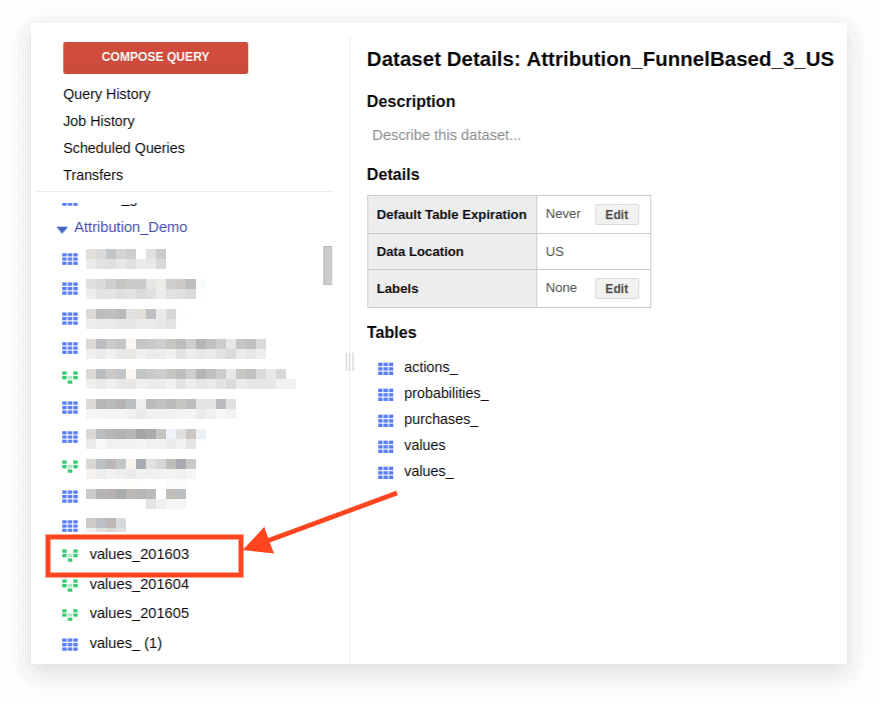

1. Open the view:

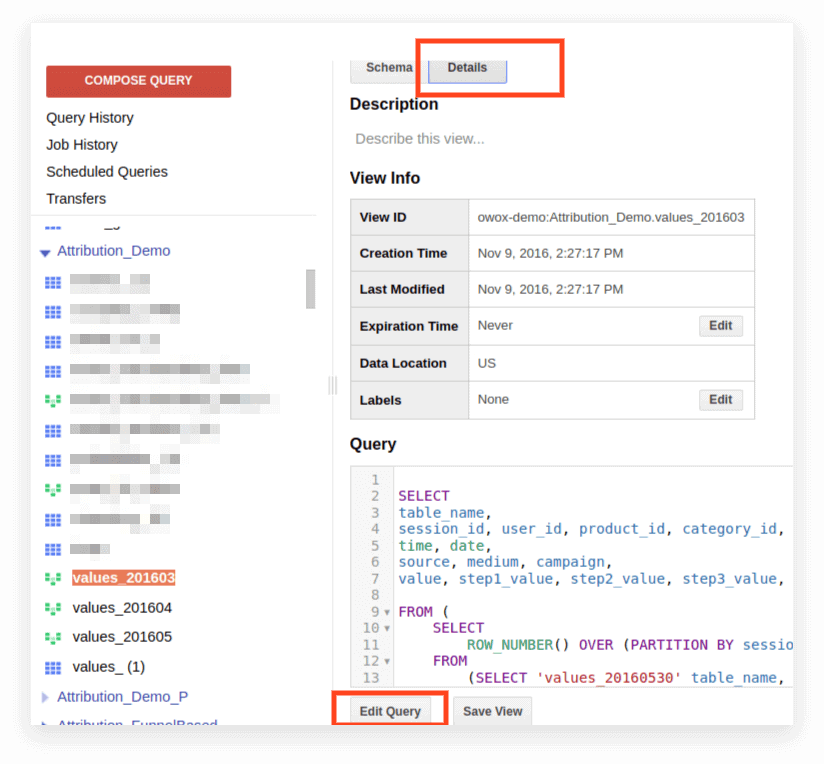

2. Click Details. The query text should open, and the Edit Query button will appear below:

Now, you can edit the request according to Standard SQL rules.

If you plan to continue using the request as a presentation, click Save View after you've finished editing.

Understanding Advanced SQL Syntax & Features in Google BigQuery for Data Analysis

SQL in BigQuery takes data analysis to the next level, offering a range of features for various tasks. It's great for managing data across Google Cloud services and tackling complex analytics.

Compatibility

Thanks to the implementation of SQL, you can directly access data stored in other services directly from BigQuery:

- Google Cloud Storage log files

- Transactional records in Google Bigtable

- Data from other sources

This allows you to use Google Cloud Platform products for any analytical tasks, including predictive and prescriptive analytics based on machine learning algorithms.

Query Syntax

The query structure in the Standard dialect is almost the same as in Legacy:

WITH AS

SELECT

FROM ,

UNNEST AS

WHERE

GROUP BY

HAVING

JOIN ON | USING

ORDER BY DESC | ASC

LIMIT OFFSETThe names of the tables and view are separated with a period (full stop), and the whole query is enclosed in grave accents: `project_name.data_name_name.table_name`.

For example, `bigquery-public-data.samples.natality`.

The full syntax of the query, with explanations of what can be included in each operator, is compiled as a schema in the BigQuery documentation.

Features of SQL syntax in BigQuery:

- Commas are needed to list fields in the SELECT statement.

- If you use the UNNEST operator after FROM, a comma or JOIN is placed before UNNEST.

- You can't put a comma before FROM.

- A comma between two queries equals a CROSS JOIN, so be careful with it.

- JOIN can be done not only by column or equality but by arbitrary expressions and inequality.

- It's possible to write complex subqueries in any part of the SQL expression (in SELECT, FROM, WHERE, etc.). In practice, it's not yet possible to use expressions like WHERE column_name IN (SELECT ...) as you can in other databases.

Operators

In Standard SQL, operators define the type of data. For example, an array is always written in brackets []. Operators are used for comparison, matching the logical expression (NOT, OR, AND), and in arithmetic calculations.

Functions

Standard SQL supports more features than Legacy: traditional aggregation (sum, number, minimum, maximum); mathematical, string, and statistical functions; and rare formats such as HyperLogLog ++.

In the Standard dialect, there are more functions for working with dates and TIMESTAMP. A complete list of features is provided in Google's documentation. The most commonly used functions are for working with dates, strings, aggregation, and windows.

1. Aggregation functions

COUNT (DISTINCT column_name) counts the number of unique values in a column. For example, say we need to count the number of sessions from mobile devices on March 1, 2019. Since a session number can be repeated on different lines, we want to count only the unique session number values:

#standardSQL

SELECT

COUNT (DISTINCT sessionId) AS sessions

FROM `project_name.dataset_name.owoxbi_sessions_20190301`

WHERE device.deviceCategory = 'mobile'SUM (column_name) — the sum of the values in the column

#standardSQL

SELECT

SUM (hits.transaction.transactionRevenue) AS revenue

FROM `project_name.dataset_name.owoxbi_sessions_20190301`,

UNNEST (hits) AS hits -- unpacking the nested field hits

WHERE device.deviceCategory = 'mobile'MIN (column_name) | MAX (column_name) — the minimum and maximum value in the column. These functions are convenient for checking the spread of data in a table.

2. Window (analytical) functions

Analytical functions consider values not for the entire table, but for a certain window — a set of rows that you're interested in. That is, you can define segments within a table.

For example, you can calculate SUM (revenue) not for all lines but for cities, device categories, and so on. You can turn the analytic functions SUM, COUNT, and AVG as well as other aggregation functions by adding the OVER condition (PARTITION BY column_name) to them.

For example, you need to count the number of sessions by traffic source, channel, campaign, city, and device category. In this case, we can use the following expression:

SELECT

date,

geoNetwork.city,

t.device.deviceCategory,

trafficSource.source,

trafficSource.medium,

trafficSource.campaign,

COUNT(DISTINCT sessionId) OVER(PARTITION BY date, geoNetwork.city, session.device.deviceCategory, trafficSource.source, trafficSource.medium, trafficSource.campaign) AS segmented_sessions

FROM `project_name.dataset_name.owoxbi_sessions_20190301`OVER determines the window for which calculations will be made. PARTITION BY indicates which rows should be grouped for calculation. In some functions, it's necessary to specify the order of grouping with ORDER BY. For a complete list of window functions, see the BigQuery documentation.

3. String functions

These are useful when you need to change text, format the text in a line, or glue the values of columns.

For example, string functions are great if you want to generate a unique session identifier from the Google Analytics 4 export data. Let's consider the most popular string functions.

SUBSTR cuts part of the string. In the request, this function is written as SUBSTR (string_name, 0.4). The first number indicates how many characters to skip from the beginning of the line, and the second number indicates how many digits to cut.

For example, say you have a date column that contains dates in the STRING format. In this case, the dates look like this: 20190103. If you want to extract a year from this line, SUBSTR will help you:

#standardSQL

SELECT

SUBSTR(date,0,4) AS year

FROM `project_name.dataset_name.owoxbi_sessions_20190301`CONCAT (column_name, etc.) glues values. Let's use the date column from the previous example. Suppose you want all dates to be recorded like this: 2019-03-01. To convert dates from their current format to this format, two string functions can be used: first, cut the necessary pieces of the string with SUBSTR, then glue them through the hyphen:

#standardSQL

SELECT

CONCAT(SUBSTR(date,0,4),"-",SUBSTR(date,5,2),"-",SUBSTR(date,7,2)) AS date

FROM `project_name.dataset_name.owoxbi_sessions_20190301`REGEXP_CONTAINS returns the values of columns in which the regular expression occurs:

#standardSQL

SELECT

CASE

WHEN REGEXP_CONTAINS (medium, '^(sending|email|mail)$') THEN 'Email'

WHEN REGEXP_CONTAINS (source, '(mail|email|Mail)') THEN 'Email'

WHEN REGEXP_CONTAINS (medium, '^(cpa)$') THEN 'Affiliate'

ELSE 'Other'

END Channel_groups

FROM `project_name.dataset_name.owoxbi_sessions_20190301`This function can be used in both SELECT and WHERE. For example, in WHERE, you can select specific pages with it:

WHERE REGEXP_CONTAINS(hits.page.pagePath, 'land[123]/|/product-order')

4. Date functions

Often, dates in tables are recorded in STRING format. If you plan to visualize results in Looker Studio, the dates in the table need to be converted to DATE format using the PARSE_DATE function.

PARSE_DATE converts a STRING of the 1900-01-01 format to the DATE format. If the dates in your tables look different (for example, 19000101 or 01_01_1900), you must first convert them to the specified format.

#standardSQL

SELECT

PARSE_DATE('%Y-%m-%d', date) AS date_new

FROM `project_name.dataset_name.owoxbi_sessions_20190301`DATE_DIFF calculates how much time has passed between two dates in days, weeks, months, or years. It's useful if you need to determine the interval between when a user sees advertising and places an order. Here's how the function looks in a request:

#standardSQL

SELECT DATE_DIFF(

PARSE_DATE('%Y%m%d', date1), PARSE_DATE('%Y%m%d', date2), DAY

) days -- convert the date1 and date2 lines to the DATE format; choose units to show the difference (DAY, WEEK, MONTH, etc.)

FROM `project_name.dataset_name.owoxbi_sessions_20190301`If you want to learn more about the listed functions, read BigQuery Google Features — A Detailed Review.

What Are the SQL Queries Tailored for Marketing Reporting?

The Standard SQL dialect allows businesses to extract maximum information from data with deep segmentation, technical audits, marketing KPI analysis, and identification of unfair contractors in CPA networks. Here are examples of business problem reports in which SQL queries on data collected in Google BigQuery will help you.

- ROPO analysis: evaluate the contribution of online campaigns to offline sales. To perform ROPO analysis, you need to combine data on online user behavior with data from your CRM**,** call tracking system and mobile application.

If there’s a key in one and the second base — a common parameter that is unique for each user (for example, User ID) — you can track:

- Which users visited the site before buying goods in the store

- How users behaved on the site

- How long users took to make a purchase decision

- What campaigns had the greatest increase in offline purchases?

- Segment customers by any combination of parameters, from behavior on the site (pages visited, products viewed, number of visits to the site before buying) to loyalty card number and purchased items.

- Find out which CPA partners are working in bad faith and replacing UTM tags.

- Analyze the progress of users through the sales funnel within the sales process.

We’ve prepared a selection of queries in Standard SQL dialect. If you have already collected data from your site from advertising sources and from your CRM system in Google BigQuery, you can use these templates to solve your business problems. Simply replace the project name, dataset, and table in BigQuery with your own. In the collection, you’ll receive 11 SQL queries.

For data collected using standard export from Google Analytics 4 to Google BigQuery:

- User actions in the context of any parameters

- Statistics on key user actions

- Users who viewed specific product pages

- Actions of users who bought a particular product

- Set up the funnel with any necessary steps

- Effectiveness of the internal search site

For data collected in Google BigQuery using OWOX BI:

- Attributed consumption by source and channel

- The Average cost of attracting a visitor by city

- ROAS for gross profit by source and channel

- Number of orders in the CRM by payment method and delivery method

- Average delivery time by city

Gain clarity for better decisions without chaos

No switching between platforms. Get the reports you need to focus on campaign optimization

FAQ

-

What makes Google BigQuery a preferred choice for digital marketing analytics?

Google BigQuery is preferred for digital marketing analytics due to its ability to handle vast datasets with ease, automate data refreshes, and provide flexible ad-hoc data analysis. Its integration with various data sources and centralization of historical data enhance its effectiveness in marketing analytics. -

Are there any challenges in transitioning from Legacy SQL to Standard SQL in BigQuery?

Transitioning from Legacy SQL to Standard SQL can involve challenges like adapting to new syntax and functions. However, Google BigQuery provides options to switch between dialects easily, and the familiarity with traditional SQL eases this transition. -

How does SQL in BigQuery aid in data-driven decision-making?

SQL allows for sophisticated data analysis and visualization, helping marketers to identify trends, patterns, and insights that inform strategic decisions. -

Can Standard SQL in BigQuery handle large datasets efficiently?

Yes, SQL in BigQuery is designed to handle large datasets efficiently, thanks to its serverless infrastructure and powerful processing capabilities. -

Why is StandardSQL important for marketers using BigQuery?

SQL is essential for marketers in BigQuery for efficient data extraction, analysis, and manipulation. It enables them to uncover insights, understand customer behaviors, and make data-driven decisions. -

What is the difference between Legacy and Standard SQL in BigQuery?

Legacy SQL was BigQuery's original query language, unique to Google, while Standard SQL is more in line with the SQL used in other database systems, offering more features like arrays, nested fields, and enhanced functions.